Artificial-intelligence systems are increasingly limited by the hardware used to implement them. Now comes a new superconducting photonic circuit that mimics the links between brain cells—burning just 0.3 percent of the energy of its human counterparts while operating some 30,000 times as fast.

In artificial neural networks, components called neurons are fed data and cooperate to solve a problem, such as recognizing faces. The neural net repeatedly adjusts the synapses—the links between its neurons—and determines whether the resulting patterns of behavior are better at finding a solution. Over time, the network discovers which patterns are best at computing results. It then adopts these patterns as defaults, mimicking the process of learning in the human brain.

Although AI systems are increasingly finding real-world applications, they face a number of major challenges, given the hardware used to run them. One solution that researchers have investigated to solve this problem is to develop brain-inspired “neuromorphic” computer hardware.

“When I look around at all the concepts that have been unearthed, I really feel like we’re onto something.”

—Jeffrey Shainline, NIST

For instance, neuromorphic microchip components may “spike,” or generate an output signal, only after they receive a certain amount of input signals over a given time, a strategy that more closely mimics how real biological neurons behave. By only rarely firing spikes, these devices shuffle around much less data than typical artificial neural networks and, in principle, require much less power and communication bandwidth.

However, neuromorphic hardware typically uses conventional electronics, which ends up limiting their complexity and speed. For example, biological neurons can each possess tens of thousands of synapses, but neuromorphic devices struggle to connect their artificial neurons to a few others. One solution is multiplexing, in which a single data channel may carry many signals at the same time. However, as chips become larger and more intricate, computations may slow down.

In a new study, researchers explored using optical transmitters and receivers to connect neurons instead. Optical links, or waveguides, can in principle connect each neuron with thousands of others at light-speed communication rates.

The scientists used superconducting nanowire devices capable of detecting single photons. These optical signals are the smallest possible, constituting the physical limit of energy efficiency.

Performing photonic neural computations is often tricky because optical cavities that can entrap light for long spans of time are usually needed. Creating such cavities, and linking them with many waveguides, is very challenging to accomplish on an integrated microchip.

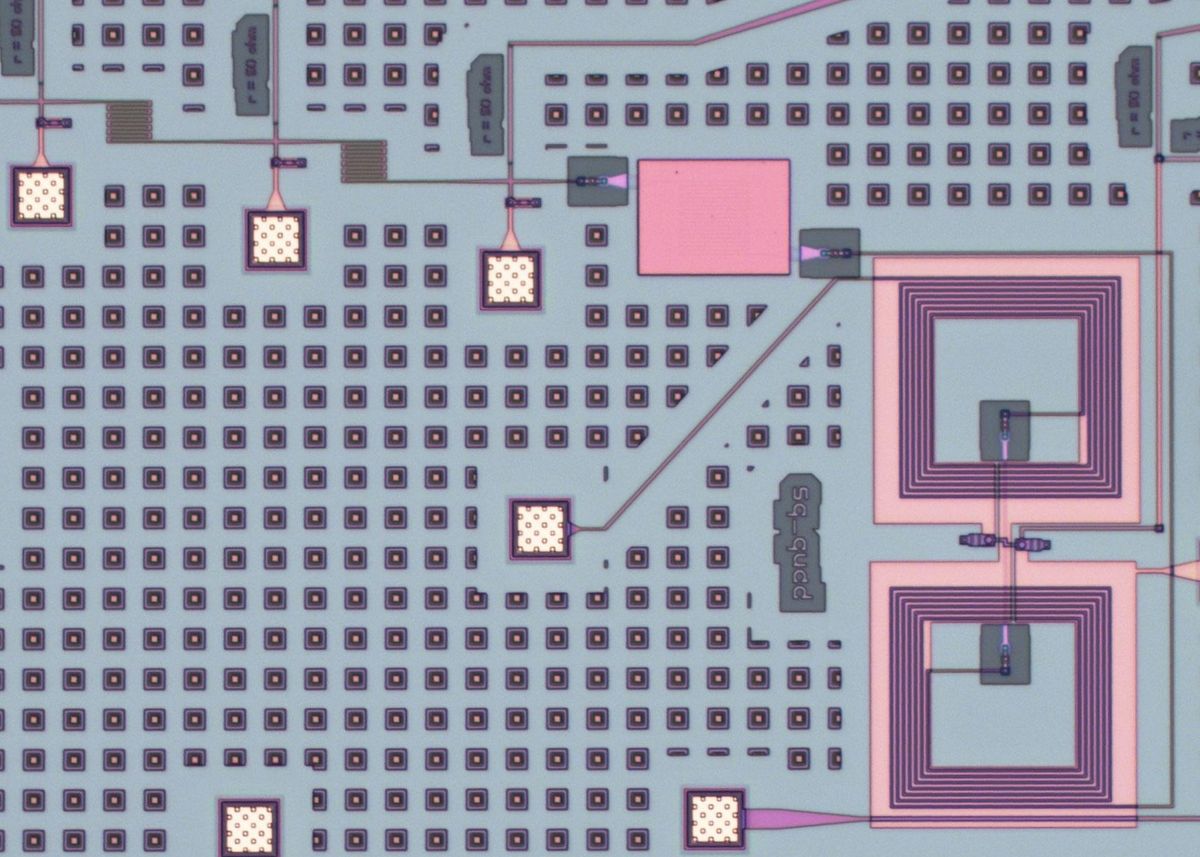

Instead, the researchers developed hybrid circuitry, in which the output signals from each detector were converted to ultrafast electrical pulses roughly 2 picoseconds long. These pulses each consisted of a single magnetic fluctuation, or fluxon, within a network of superconducting quantum-interference devices, or SQUIDs.

“We’ve been doing theoretical work for years to try to identify the principles that will enable technology that can achieve the physical limits of neuromorphic computing,” says Jeffrey Shainline, a researcher at NIST. “Pursuit of that objective has delivered us to this concept—combine optical communication at the single-photon level with neural computation performed by Josephson junctions.”

SQUIDs consist of one or more Josephson junctions—a sandwich of superconducting materials separated by a thin insulating film. If the current through the Josephson junction exceeds a certain threshold value, the SQUID begins to produce fluxons.

Upon sensing a photon, the single-photon detector yields fluxons, which collect as current in the SQUID’s superconducting loop. This stored current serves as a form of memory, providing a record of how many times a neuron produced a spike.

“It was surprising that it was pretty easy to get the circuits to work,” Shainline says. “The fabrication and experiments took quite a bit of time in the design phase, but the circuits actually worked the first time we fabricated them. That bodes well for the future scalability of such systems.”

The scientists integrated the single-photon detector with the Josephson junction, creating a superconducting synapse. They calculated that the synapses are capable of spike rates exceeding 10 million hertz while consuming roughly 33 attojoules of power per synaptic event (an attojoule is 10-18 of a joule). In contrast, human neurons have a maximum average spike rate of about 340 hertz and consume roughly 10 femtojoules per synaptic event (a femtojoule is 10-15 of a joule).

“When I look around at all the concepts that have been unearthed, I really feel like we’re onto something,” Shainline says. “It could be profoundly transformative.”

In addition, the scientists can vary the output from their devices from hundreds of nanoseconds to milliseconds. This means the hardware can interface with a range of systems, from high-speed electronics to more leisurely interactions with humans.

In the future, the researchers will combine their new synapses with on-chip sources of light to create fully integrated superconducting neurons.

“There are big remaining challenges there, but if we can get that last part integrated, there are many reasons to think the result could be a computational platform of immense power for artificial intelligence,” Shainline says.

The scientists recently detailed their findings online in the journal Nature Electronics.

- Reconfigurable AI Device Shows Brainlike Promise - IEEE Spectrum ›

- Neuromorphic Chips Are Destined for Deep Learning—or Obscurity ... ›

- IBM Debuts Brain-Inspired Chip For Speedy, Efficient AI - IEEE Spectrum ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.