A brain-inspired chip from IBM, dubbed NorthPole, is more than 20 times as fast as—and roughly 25 times as energy efficient as—any microchip currently on the market when it comes to artificial intelligence tasks. According to a study from IBM, applications for the new silicon chip may include autonomous vehicles and robotics.

Brain-inspired computer hardware aims to mimic a human brain’s exceptional ability to rapidly perform computations in an extraordinarily energy-efficient manner. These machines are often used to implement neural networks, which similarly imitate the way a brain learns and operates.

“NorthPole merges the boundaries between brain-inspired computing and silicon-optimized computing, between compute and memory, between hardware and software.” —Dharmendra Modha, IBM

One strategy often pursued with brain-inspired electronics is copying the way in which biological neurons both compute and store data. Uniting processors and memory can dramatically reduce the energy and time that computers lose in shuttling data between those components.

“The brain is vastly more energy-efficient than modern computers, in part because it stores memory with compute in every neuron,” says study lead author Dharmendra Modha, IBM’s chief scientist for brain-inspired computing.I

“NorthPole merges the boundaries between brain-inspired computing and silicon-optimized computing, between compute and memory, between hardware and software,” Modha says.

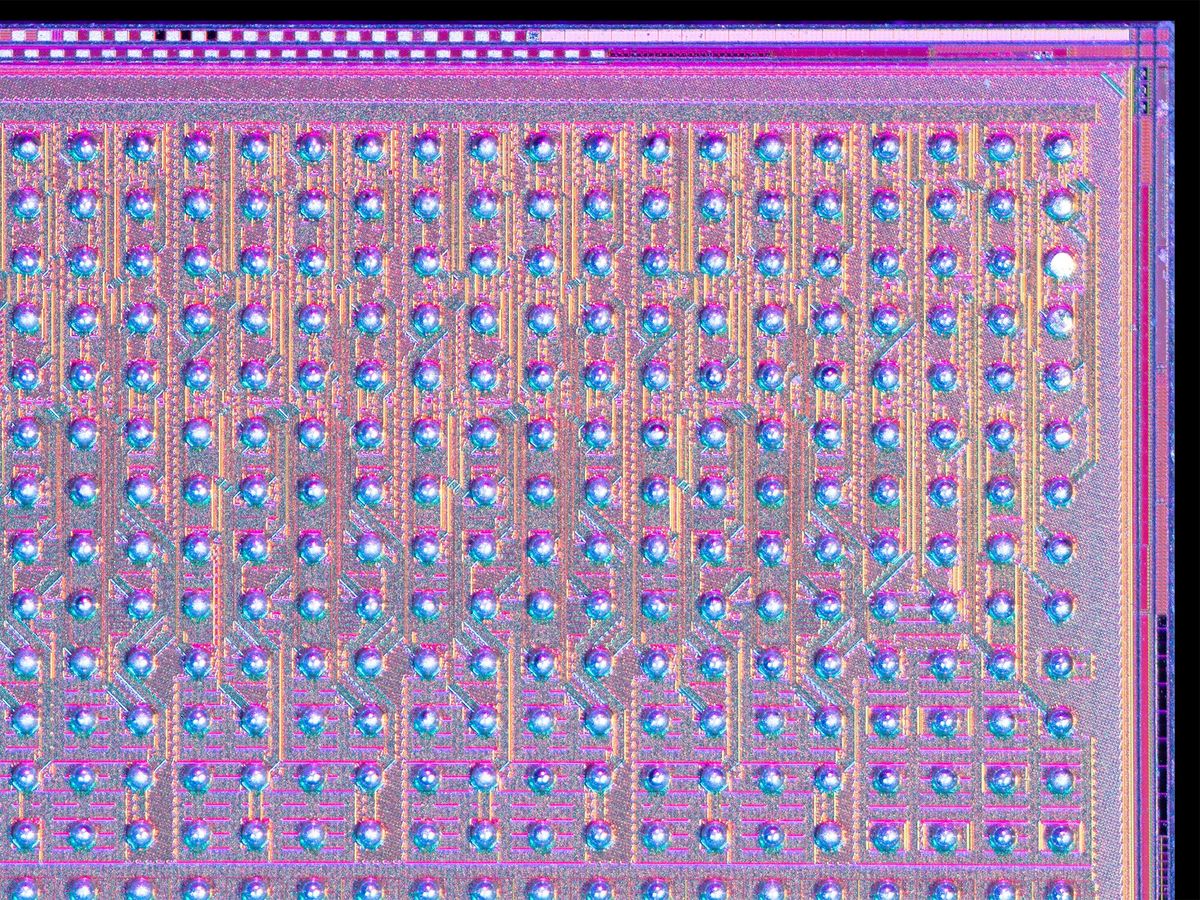

The new chip is optimized for 2-bit, 4-bit, and 8-bit low-precision operations. This is enough to achieve state-of-the-art accuracy on many neural networks while dispensing with the high precision required for training, the researchers say. Operating at a frequency range of 25 to 425 megahertz, the research prototype can perform 2,048 operations per core per cycle at 8-bit precision, and 8,192 operations at 2-bit precision.

NorthPole, developed over the past eight years, builds on TrueNorth, IBM’s last brain-inspired chip. TrueNorth, which debuted in 2014, boasted a power efficiency four orders of magnitude lower than conventional microprocessors of its time.

“The main motivation for NorthPole was to dramatically reduce the potential capital cost of TrueNorth,” Modha says.

The scientists tested NorthPole with two AI systems—the ResNet 50 image classification network and Yolo-v4 object detection network. When compared with Nvidia’s V100 GPU made using a comparable 12-nanometer node, NorthPole was 25 times as energy efficient per watt and 22 times as fast, while taking up one-fifth of the area.

“Given that analog systems are yet to reach technological maturity, this work presents a near-term option for AI to be deployed close to where it is needed.” —Vwani Roychowdhury, UCLA

NorthPole also outperformed all other chips on the market, even ones made with more advanced nodes. For example, compared with Nvidia’s H100 GPU, implemented using a 4-nm node, NorthPole was five times more energy efficient. And NorthPole proved roughly 4,000 times as fast as TrueNorth.

“The paper represents a tour de force of engineering,” says Vwani Roychowdhury, a computing and AI scientist at the University of California, Los Angeles, who was not involved with the study.

The new chip’s speed and efficiency come from how all of its memory is on the chip itself. That means that each core can access memory on the chip with almost equal ease.

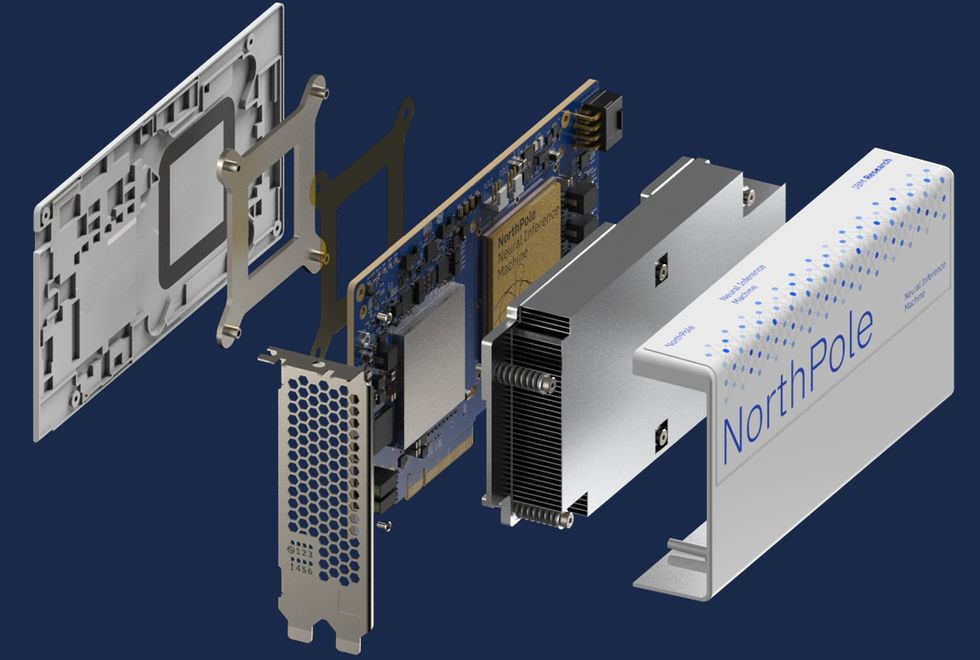

In addition, from outside the device, NorthPole appears as an active memory chip, Modha says. This helps integrate NorthPole into systems.

Potential applications for NorthPole may include image and video analysis, speech recognition, and the neural networks known as transformers that underlie the large language models (LLMs) powering chatbots such as ChatGPT, Modha says. IBM suggests these AI tasks might find use in autonomous vehicles, robotics, digital assistants, and satellite observations, among other possibilities.

Some applications demand neural networks too large to fit on a single NorthPole chip. In such cases, these networks can be broken down into smaller parts that can be spread across multiple NorthPole chips, Modha says.

NorthPole’s efficiency means that it does not need bulky liquid-cooling systems to run—fans and heat sinks are more than enough, IBM notes. That means it could get deployed in much tinier spaces.

The scientists note that IBM fabricated NorthPole with a 12-nm node process. The current state of the art for CPUs is 3 nm, and IBM has spent years researching 2-nm nodes. This suggests further gains with this brain-inspired strategy may prove readily available, the company says.

NorthPole’s type of architecture is often called compute-in-memory, which can be either digital or analog. In digital compute-in-memory systems such as NorthPole, many circuits are required to run the multiply-accumulate (MAC) operations that are the most basic computations in neural networks. In contrast, analog compute-in-memory systems possess components that are far better suited to performing these operations.

Analog compute-in-memory need less power and space than their digital counterparts. However, these analog systems often require new materials and manufacturing techniques, whereas NorthPole was fabricated using conventional semiconductor manufacturing techniques.

“Given that analog systems are yet to reach technological maturity, this work presents a near-term option for AI to be deployed close to where it is needed,” Roychowdhury says.

The scientists detailed their findings in the 21 October issue of the journal Science.

- Neuromorphic Chips Are Destined for Deep Learning—or Obscurity ... ›

- Nanowire Synapses 30,000x Faster Than Nature’s ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.