Brain-inspired “neuromorphic” microchips from IBM and Intel might be good for more than just artificial intelligence; they may also prove ideal for a class of computations useful in a broad range of applications including analysis of medical X-rays and financial economics, a new study finds.

Scientists have long sought to mimic how the brain works using software programs known as neural networks and hardware known as neuromorphic chips. So far, neuromorphic computing was mostly focused on implementing neural networks. It was unclear whether this hardware might prove useful beyond AI applications.

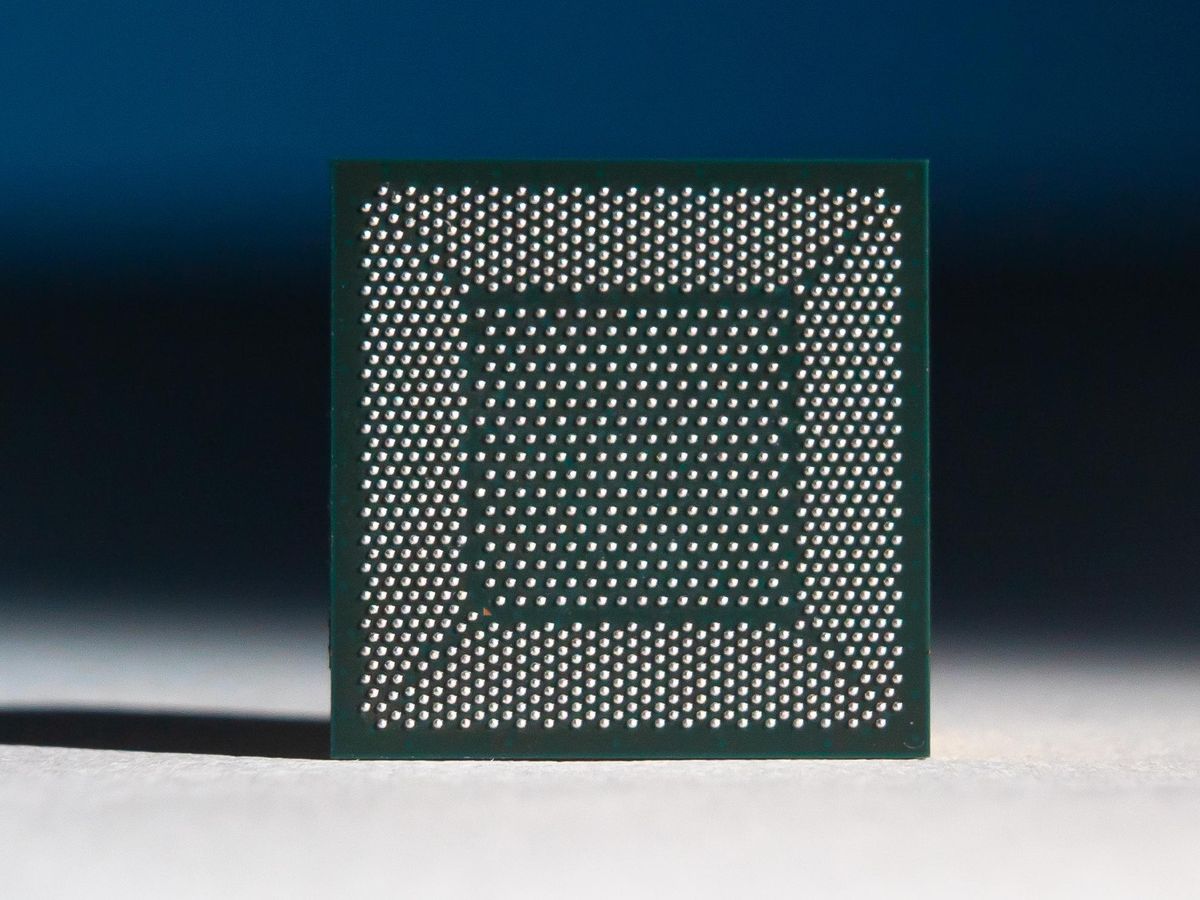

Neuromorphic chips typically imitate the workings of neurons in a number of different ways, such as running many computations in parallel. In addition, just as biological neurons both compute and store data, neuromorphic hardware often seeks to unite processors and memory, potentially reducing the energy and time that conventional computers lose in shuttling data between those components. Furthermore, whereas conventional microchips use clock signals fired at regular intervals to coordinate the actions of circuits, the activity in neuromorphic architecture often acts in a spiking manner, triggered only when an electrical charge reaches a specific value, much like what happens in brains like ours.

Until now, the main advantage envisioned with neuromorphic computing to date was in power efficiency: Features such as spiking and the uniting of memory and processing resulted in IBM’s TrueNorth chip, which boasted a power density four orders of magnitude lower than conventional microprocessors of its time.

“We know from a lot of studies that neuromorphic computing is going to have power-efficiency advantages, but in practice, people won’t care about power savings if it means you go a lot slower,” says study senior author James Bradley Aimone, a theoretical neuroscientist at Sandia National Laboratories in Albuquerque.

Now scientists find neuromorphic computers may prove well suited to what are called Monte Carlo methods, where problems are essentially treated as games and solutions are arrived at via many random simulations or “walks” of those games.

“We came to random walks by considering problems that do not scale very well on conventional hardware,” Aimone says. “Typically Monte Carlo solutions require a lot of random walks to provide a good solution, and while individually what each walker does over time is not difficult to compute, in practice, having to do a lot of them becomes prohibitive.”

In contrast, “Instead of modeling a bunch of random walkers in parallel, each doing its own computation, we can program a single neuromorphic mesh of circuits to represent all of the computations a random walk may do, and then by thinking of each random walk as a spike moving over the mesh, solve the whole problem at one time,” Aimone says.

Specifically, just as previous research has found quantum computing can display a “quantum advantage” over classical computing on a large set of problems, the researchers discovered a “neuromorphic advantage” may exist when it comes to random walks via discrete-time Markov chains. If you imagine a problem as a board game, here “chain” means playing the game by moving through a sequence of states or spaces. “Markov” means the next space you can move to in the game depends only on your current space, and not on your previous history, as is the case in board games such as Monopoly or Candy Land. “Discrete-time” simply means “that a fixed time interval happens between changing spaces—“a turn,” says study lead author Darby Smith, an applied mathematician at Sandia.

In experiments with IBM’s TrueNorth and Intel’s Loihi neuromorphic chips, a server-class Intel Xeon E5-2662 CPU, and an Nvidia Titan Xp GPU, the scientists found that when it comes to solving this class of problems at large scales, neuromorphic chips proved more efficient than the conventional semiconductors in terms of energy consumption, and competitive, if not better, in terms of time.

“I very much believe that neuromorphic computing for AI is very exciting, and that brain-inspired hardware will lead to smarter and more powerful AI,” says Aimone. “But at the same time, by showing that neuromorphic computing can be impactful at conventional computing applications, the technology has the potential to have much broader impact on society.”

One way the neuromorphic chips achieved their advantages in performance and energy efficiency was a high degree of parallelism. Compounding that was the ability to represent each random walk as a single spike of activity instead of a more complex set of activities.

“The big limitation on these Monte Carlo methods is that we have to model a lot of walkers,” Aimone says. “Because spikes are such a simple way of representing a random walk, adding an extra random walker is just adding one extra spike to the system that will run in parallel with all of the others. So the time cost of an extra walker is very cheap.”

The ability to efficiently tackle this class of problems has a wide range of potential applications, such as modeling stocks and options in financial markets, better understanding how X-rays interact with bone and soft tissue, tracking how information moves on social networks, and modeling how diseases travel through population clusters, Smith notes. “Applications of this approach are everywhere,” Aimone says.

But just because a neuromorphic advantage may exist for some problems “does not mean that brain-inspired computers can do everything better than normal computers,” Aimone cautions. “The future of computing is likely a mix of different technologies. We are not trying to say neuromorphic will supplant everything.”

The scientists are now investigating ways to handle interactions between multiple “walkers” or participants in these scenarios, which will enable applications such as molecular dynamics simulations, Aimone notes. They are also developing software tools to help other developers work on this research, he adds.

The scientists detailed their findings in the 14 February online edition of the journal Nature Electronics.

***

- How Analog and Neuromorphic Chips Will Rule the Robotic Age ... ›

- Neuromorphic Chips Are Destined for Deep Learning—or Obscurity ... ›

- Intel's Neuromorphic System Hits 8 Million Neurons, 100 Million ... ›

- AI on a MEMS Device Brings Neuromorphic Computing to the Edge ... ›

- Intel's Neuromorphic Chip Gets A Major Upgrade - IEEE Spectrum ›

- How Hacking Honeybees Brings AI Closer to the Hive - IEEE Spectrum ›

- Brain-Inspired Computer Approaches Brain-Like Size - IEEE Spectrum ›

- BrainChip Unveils Ultra-Low Power Akida Pico for AI Devices - IEEE Spectrum ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.