In order to achieve the edge computing that people talk about in a host of applications including 5G networks and the Internet of Things (IoT), you need to pack a lot of processing power into comparatively small devices.

The way forward for that idea will be to leverage artificial intelligence (AI) computing techniques—for so-called AI at the edge. While some are concerned about how technologists will tackle AI for applications beyond traditional computing—and some are wringing their hands over which country will have the upper hand in this new frontier—the technology is still pretty early in its development cycle.

But it appears that still-too-early-yet status is about to change a bit. Researchers at the Université de Sherbrooke in Québec, Canada, have managed to equip a microelectromechanical system (MEMS) device with a form of artificial intelligence, marking the first time that any type of AI has been included in a MEMS device. The result is a kind of neuromorphic computing that operates like the human brain but in a microscale device. The combination makes it possible to process data on the device itself, thus improving the prospects for edge computing.

“We had already written a paper last year showing theoretically that MEMS AI could be done,” said Julien Sylvestre, a professor at Sherbrooke and coauthor of the research paper detailing the advance. “Our latest breakthrough was to demonstrate a device that could do it in the lab.”

The AI method the researchers demonstrated in their research, which is described in the Journal of Applied Physics, is something called “reservoir computing.” Sylvestre explains that to understand a bit about reservoir computing, you need to understand a bit about how artificial neural networks (ANNs) operate. These ANNs take data on board through an input layer, transform that data in a hidden layer of computational units often called neurons, and then give the final interpretation of that data in an output layer. Reservoir computing is most often used on inputs that depend on time (as opposed to inputs such as images, which are static).

So reservoir computing uses a dynamical system driven by the time-dependent input. The dynamical system is chosen to be relatively complex, so its response to the input can be fairly different from the input itself.

Also, the system is chosen to have multiple degrees of freedom for responding to the input. As a result, the input is “mapped” to a trajectory in a high-dimensional space, each dimension corresponding to one of the degrees of freedom. This creates a lot of information “richness,” meaning that there have been many different transformations of the input.

“The special trick used by reservoir computing is to combine all the dimensions linearly to get an output that corresponds to what we want the computer to give as an answer for a given input,” said Sylvestre. “That’s what we call ‘training’ the reservoir computing. The linear combination is very simple to compute, unlike other approaches to AI, where one would attempt to modify the inner working of the dynamical system to get the desired output.”

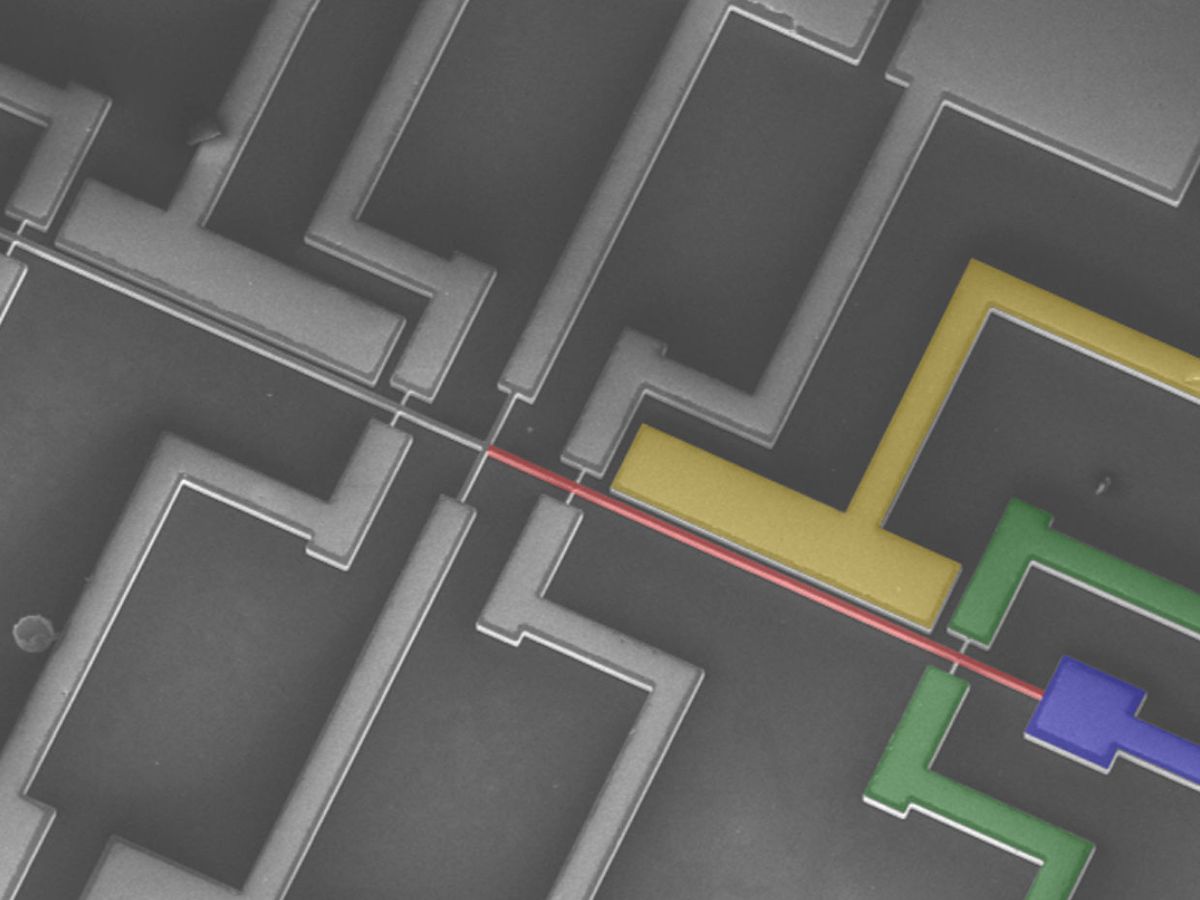

In most reservoir computing systems, the dynamical system is the software. In this work, the dynamical system is the MEMS device itself. To achieve this dynamical system, the device uses the nonlinear dynamics of how a very thin silicon beam oscillates in space. These oscillations create a kind of neural network that transforms the input signal into the higher dimensional space required for neural network computing.

Sylvestre explained that it’s hard to modify the inner workings of a MEMS device, but it’s not necessary in reservoir computing, which is why they used this approach to do AI in MEMS.

“Our work shows that it’s possible to use the nonlinear resources in MEMS to do AI,” said Sylvestre. “It’s a novel way to build ‘artificially smart’ devices that can be really small and efficient.”

It’s difficult to compare the processing power of this MEMS device with a known quantity, like a desktop computer, according to Sylvestre. “The computer and our MEMS work very differently,” he explained. “While a computer is big and consumes lots of power (tens of watts), our MEMS could fit on the tip of a human hair, and runs on microwatts of power. Still, they can do fancy tricks, like classifying spoken words—a task that would probably use 10 percent [of the resources] of a desktop computer.”

A possible application for this AI-equipped MEMS could be an accelerometer MEMS in which all the data the device is collecting is processed within the device without the need for sending that data back to a computer, according to Sylvestre.

While the researchers have not yet focused on how they would power these MEMS, it’s assumed that the devices’ miserly power use would allow them to run on only energy harvesters without the need for batteries. With that in mind, the researchers are looking to apply their AI MEMS approach to applications in sensing and robot control.

Dexter Johnson is a contributing editor at IEEE Spectrum, with a focus on nanotechnology.