Smartphones for several years now have had the ability to listen non-stop for wake words, like “Hey Siri” and “OK Google,” without excessive battery usage. These wake-up systems run in special, low-power processors embedded within a phone’s larger chip set. They rely on algorithms trained on a neural network to recognize a broad spectrum of voices, accents, and speech patterns. But they only recognize their wake words; more generalized speech recognition algorithms require the involvement of a phone’s more powerful processors.

Today, Qualcomm announced that Snapdragon 8885G, its latest chipset for mobile devices, will be incorporating an extra piece of software in that bit of semiconductor real estate that houses the wake word recognition engine. Created by Cambridge, U.K. startup Audio Analytic, the ai3-nano will use the Snapdragon’s low-power AI processor to listen for sounds beyond speech. Depending on the applications made available by smartphone manufacturers, the phones will be able to react to such sounds as a doorbell, water boiling, a baby’s cry, and fingers tapping on a keyboard—a library of some 50 sounds that is expected to grow to 150 to 200 in the near future.

The first application available for this sound recognition system will be what Audio Analytic calls Acoustic Scene Recognition AI. Instead of listening for just one sound, the scene recognition technology listens for the characteristics of all the ambient sounds to classify an environment as chaotic, lively, boring, or calm. Audio Analytic CEO and founder Chris Mitchell explains.

“There are two aspects to an environment,” he says, “eventfulness, which refers to how many individual sounds are going on, and how pleasant we find it. Say I went for a run, and there were lots of bird sounds. I would likely find that pleasant, so that would be categorized as ‘lively.’ You could also have an environment with a lot of sounds that are not pleasant. That would be ‘chaotic.’”

Mitchell’s team selected those four categories after reviewing studies about perceptions of sound. They then used its custom-created dataset of 30 million audio recordings to train the neural network.

What a mobile device will do with its newfound awareness of ambient sounds will be up to the manufacturers that use the Qualcomm platform. But Mitchell has a few ideas.

“A train, for example, is boring,” he says. “So you might want to increase the active noise cancellation on your headphones to remove the typical low hum. But when you get off the tube, you want more transparency—so you can hear bike messengers, so noise cancellation should be reduced. On a smartphone you could also adjust notifications based on the type of environment, whether it vibrates or rings, or what sort of ring tone is used.”

I first met Mitchell two years ago, when the company was demonstrating prototypes of how its audio analysis technology would work in smart speakers. Since then, Mitchell reports, products using the company’s technology are available in some 150 countries. Most are security and safety systems, recognizing the sound of breaking glass, a smoke alarm, or a baby’s cry.

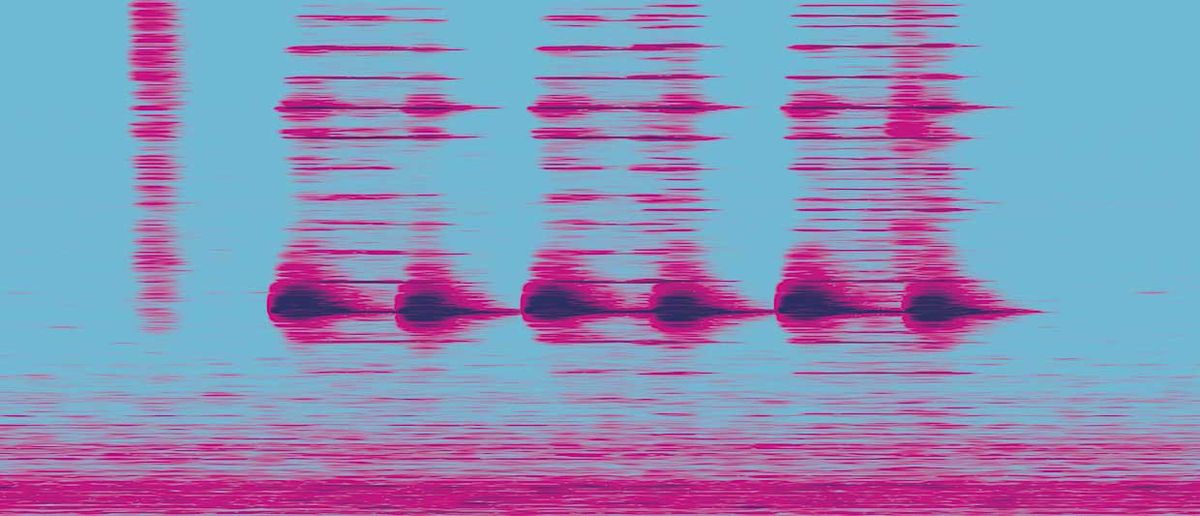

Audio Analytic’s approach, Mitchell explained to me, involves using deep learning to break sounds into standard components. He uses the word “ideophones” to refer to these components. The term also refers to the representation of a sound in speech, like “quack.” Once sounds are coded as ideophones, each can be recognized just as digital assistants’ systems recognize their wake words. This approach allows the ai3-nano engine to take up just 40 KB and run completely on the phone without connecting to a cloud-based processor.

Once the technology is established in smartphones, Mitchell expects its applications will grow beyond security and scene recognition. Early instances, he expects, will include media tagging, games, and accessibility.

For media tagging, he says, the system can search phone-captured video by sound. So, for example, a parent can easily find a clip of a child laughing. Or children could use this technology in a game that has them make the sounds of an animal—say a duck or a pig. Then for completing the task, the display could put a virtual costume on them.

As for accessibility, Mitchell sees the technology as a boon to the hard of hearing, who already rely on mobile phones as assistive devices. “This can allow them to detect [and specifically identify] a knock on the door, a dog barking or a smoke alarm,” he says.

After rolling out additional sound recognition capabilities, they expect to work next on identifying context beyond specific events or scenes. “We have started doing early stage research in that area,” he says. “So our system can say ‘It sounds like you are making breakfast’ or ‘It sounds like you are getting ready to leave the house.’” Which would allow apps to take advantage of that information in arming a security system or adjusting lights or heat.

Tekla S. Perry is a senior editor at IEEE Spectrum. Based in Palo Alto, Calif., she's been covering the people, companies, and technology that make Silicon Valley a special place for more than 40 years. An IEEE member, she holds a bachelor's degree in journalism from Michigan State University.