According to the best measures we’ve got, a set of benchmarks called MLPerf, machine-learning systems can be trained nearly twice as quickly as they could last year. It’s a figure that outstrips Moore’s Law, but also one we’ve come to expect. Most of the gain is thanks to software and systems innovations, but this year also gave the first peek at what some new processors, notably from Graphcore and Intel subsidiary Habana Labs, can do.

The once-crippling time it took to train a neural network to do its task is the problem that launched startups like Cerebras and SambaNova and drove companies like Google to develop machine-learning accelerator chips in house. But the new MLPerf data shows that training time for standard neural networks has gotten a lot less taxing in a short period of time. And that speedup has come from much more than just the advance of Moore’s Law.

This capability has only incentivized machine-learning experts to dream big. So the size of new neural networks continues to outpace computing power.

Called by some “the Olympics of machine learning,” MLPerf consists of eight benchmark tests: image recognition, medical-imaging segmentation, two versions of object detection, speech recognition, natural-language processing, recommendation, and a form of gameplay called reinforcement learning. (One of the object-detection benchmarks was updated for this round to a neural net that is closer to the state of the art.) Computers and software from 21 companies and institutions compete on any or all of the tests. This time around, officially called MLPerf Training 2.0, they collectively submitted 250 results.

Very few commercial and cloud systems were tested on all eight, but Nvidia director of product development for accelerated computing Shar Narasimhan gave an interesting example of why systems should be able to handle such breadth: Imagine a person with a smartphone snapping a photo of a flower and asking the phone: “What kind of flower is this?” It seems like a single request, but answering it would likely involve 10 different machine-learning models, several of which are represented in MLPerf.

To give a taste of the data, for each benchmark we’ve listed the fastest results for commercially available computers and cloud offerings (Microsoft Azure and Google Cloud) by how many machine-learning accelerators (usually GPUs) were involved. Keep in mind that some of these will be a category of one. For instance, there really aren’t that many places that can devote thousands of GPUs to a task. Likewise, there are some benchmarks where systems beat their nearest competitor by a matter of seconds or where five or more entries landed within a few minutes of each other. So if you’re curious about the nuances of AI performance, check out the complete list.

[Or, click here to skip past the data and hear about some of the new stuff from Google, Graphcore, Intel, and Hazy Research. We won’t judge.]

Image classification (ResNet)

Medical imaging segmentation (3D U-net)

Object detection, lightweight (RetinaNet)

Object detection, heavy (Mask R-CNN)

Speech recognition (RNN-T)

Natural language processing (BERT)

Recommender systems (DLRM)

Reinforcement learning (Minigo)

As usual, systems built using Nvidia A100 GPUs dominated the results. Nvidia’s new GPU architecture, Hopper, was designed with architectural features aimed at speeding training. But it was too new for this set of results. Look for some systems based on the Hopper H100–based systems in upcoming contests. For Nvidia’s take on the results see this blog post.

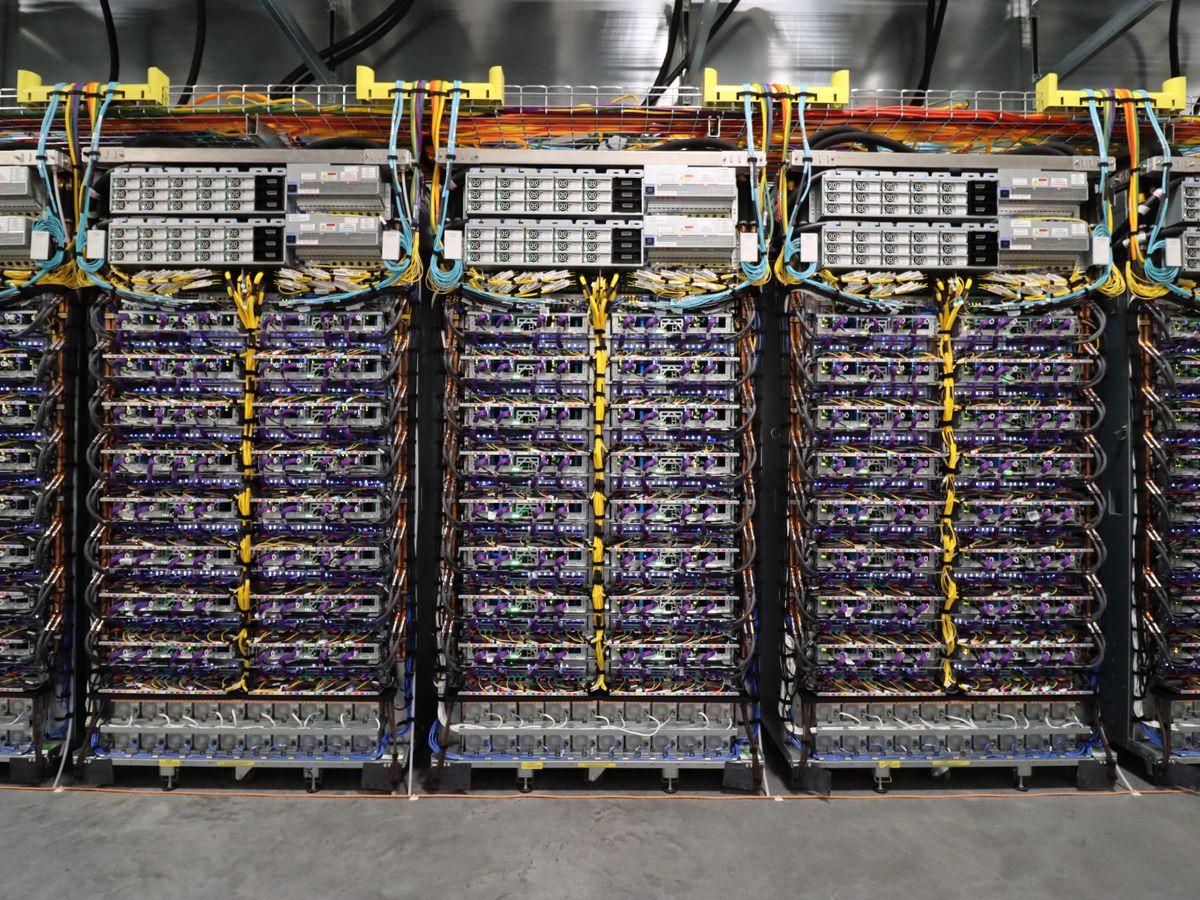

Google’s TPU v4 offers a three-fold improvement in computations per watt over its predecessor, the company says. Google noted that two of its tests were done using what it calls a “full TPU v4 pod”—a system consisting of 4,096 chips, for a total of up to 1.1 billion billion operations per second. At that scale, the system ripped through the image-recognition and natural-language-processing trainings in just over 10 seconds each. Because it’s a cloud service, Google’s machine-learning system is available around the world. But the company wants you to know that the machines are actually located in Oklahoma. Why? Because that’s where it’s built a data center that operates on 90 percent carbon-free energy. For Google’s take on its results see the Google Cloud’s blog post.

Graphcore presented the first performance results from computers built with its new Bow IPU. Bow is the first commercial processor built by stacking two silicon wafers atop each other. In its current iteration, one of the chips in the stack does no computing; instead, it delivers power in such a way that the chip runs up to 40 percent faster using as much as 16 percent less energy compared to its predecessor.

MLPerf 2.0 was the first opportunity to see how that translated to real neural nets. The chips didn’t disappoint, showing a 26 to 31 percent speedup for image recognition and a 36 to 37 percent boost at natural-language processing. Graphcore executives say to expect more. The company is planning a future IPU, where both chips in the 3D stack do computing. Such a future processor would be combined into an 8,192-IPU supercomputer called the Good Computer, capable of handling neural networks 1,000 times or more as large as today’s biggest language models.

Graphcore also touted what’s essentially a beneficial nonresult. Neural networks are constructed using frameworks that make the development job way easier. Pytorch and Tensorflow are commonly used open-source frameworks in North America and Europe, but in China Baidu’s PaddlePaddle is popular, according Graphcore executives. Seeking to satisfy clients and potential customers there, they showed that using PaddlePaddle or Graphcore’s in-house framework popART makes essentially no difference to training time.

Intel subsidiary Habana Labs’ Gaudi2 put up some winning systems in this set of results. And Habana’s Eitan Medina says to expect a lot more from Gaudi2 in future tests. The company didn’t have time to put all of Gaudi2’s architectural features through their paces in time for this round. One possibly important feature is the use the of low-precision numbers in parts of the training process, similar to what Nvidia’s H100 promises. “With Gaudi2, there’s still lots of performance to squeeze out,” says Medina.

Performing MLPerf benchmarks is no easy task, and often involves the work of many engineers. But a single graduate student, with some consultation, can do it, too. Tri Dao was that graduate student. He’s member of Hazy Research, the nom de guerre of Chris Re’s laboratory at Stanford. (Re is one of the founders of AI giant SambaNova.) Dao, Re, and other colleagues came up with a way to speed up the training of so-called attention-based networks, also called transformer networks.

Among the MLPerf benchmarks, the natural-language-processing network BERT is the transformer, but the concept of “attention” is at the heart of very large language models such as GPT3. And it’s starting to show up in other machine-learning applications, such as machine vision.

In attention networks, the length of the sequence of data the network works on is crucial to its accuracy. (Think of it as how many words a natural-language processor is aware of at once or how large an image a machine vision system can look at.) However, that length doesn’t scale up well. Double its size and you’re quadrupling the scale of the attention layer of the network, Dao explains. And this scaling problem shows up in training time because of all the instances when the network needs to write to system memory.

Dao and his colleagues came up with an algorithm that gives the training process an awareness of this time penalty and a way to minimize it. Dao applied the lab’s “flash attention” algorithm to BERT using an 8-GPU system in the Microsoft Azure cloud, shaving almost 2 minutes (about 10 percent) off of Microsoft’s best effort. “Chris [Re] calls MLPerf ‘the Olympics of machine-learning performance.’ ” says Dao. And “even on the most competitive benchmark we were able to give a speedup.”

Look for Re’s group to put flash attention to use in other transformer models soon. For more on the algorithm see their blog post about it.

- AI Training Is Outpacing Moore's Law - IEEE Spectrum ›

- These Might Be the Fastest (and Most Efficient) AI Systems Around ... ›

- Benchmark Shows AIs Are Getting Speedier - IEEE Spectrum ›

- Baidu’s PaddlePaddle Spins AI up to Industrial Applications - IEEE Spectrum ›

- Machine Learning’s New Math - IEEE Spectrum ›

- New Records for the Biggest and Smallest AI Computers ›

- AMD CEO: The Next Challenge is Energy Efficiency - IEEE Spectrum ›

- Waterwave Could Quench AIs' Thirst for GPU Memory - IEEE Spectrum ›

- Nvidia Tops Llama 2, Stable Diffusion Speed Trials - IEEE Spectrum ›

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.