The machine-learning consortium MLCommons released the latest set of benchmark results last week, offering a glimpse at the capabilities of new chips and old as they tackled executing lightweight AI on the tiniest systems and training neural networks at both server and supercomputer scales. The benchmark tests saw the debut of new chips from Intel and Nvidia as well as speed boosts from software improvements and predictions that new software will play a role in speeding the new chips in the years after their debut.

Training Servers

Training AI has been a problem that’s driven billions of dollars in investment, and it seems to be paying off. “A few years ago we were talking about training these networks in days or weeks, now we’re talking about minutes,” says Dave Salvator, director of product marketing at Nvidia.

There are eight benchmarks in the MLPerf training suite, but here I’m showing results from just two—image classification and natural-language processing—because although they don’t give a complete picture, they’re illustrative of what’s happening. Not every company puts up benchmark results every time; in the past, systems from Baidu, Google, Graphcore, and Qualcomm have made marks, but none of these were on the most recent list. And there are companies whose goal is to train the very biggest neural networks, such as Cerebras and SambaNova, that have never participated.

Another note about the results I’m showing—they are incomplete. To keep the eye glazing to a minimum, I’ve listed only the fastest system of each configuration. There were already four categories in the main “closed” contest: cloud (self-evident), on premises (systems you could buy and install in-house right now), preview (systems you can buy soon but not now), and R&D (interesting but odd, so I excluded them). I then listed the fastest training result for each category for each configuration—the number of accelerators in a computer. If you want to see the complete list, it’s at the MLCommons website.

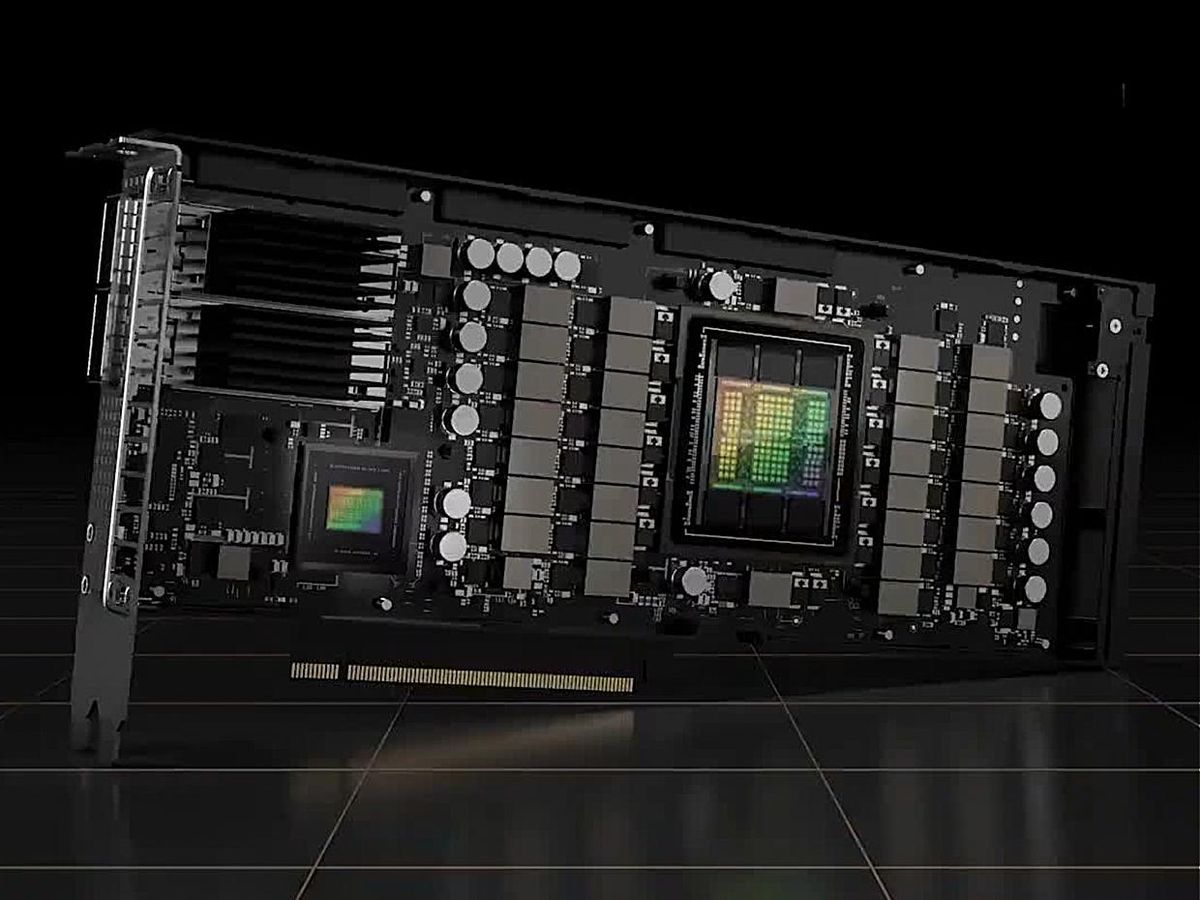

A casual glance shows that machine-learning training is still very much Nvidia’s house. It can bring a supercomputer-scale number of GPUs to the party to smash through training problems in mere seconds. Its A100 GPUs have dominated the MLPerf list for several iterations now, and it powers Microsoft’s Azure cloud AI offerings as well as systems large and small from partners including Dell, HPE, and Fujitsu. But even among the A100 gang there’s real competition, particularly between Dell and HPE.

But perhaps more important was Azure’s standing. On image classification, the cloud systems were essentially a match for the best A100 on-premises computers. The results strengthen Microsoft’s case that renting resources in the cloud is as good as buying your own. And that case might might be even stronger soon. This week Nvidia and Microsoft announced a multiyear collaboration that would see the inclusion of Nvidia’s upcoming GPU, the H100, in the Azure cloud.

This was the first peek at training abilities for the H100. And Nivida’s Dave Salvator emphasized how much progress happens—largely due to software improvements—in the years after a new chip comes out. On a per-chip basis, the A100 delivers 2.5 times the average performance today versus its first run at the MLPerf benchmarks in 2020. Compared to A100’s debut scores, H100 delivered 6.7 times the speed. But compared to A100 with today’s software, the gain is only 2.6-fold.

In a way, H100 seems a bit overpowered for the MLPerf benchmarks, tearing through most of them in minutes using a fraction of the A100 hardware needed to match it. And in truth, it is meant for bigger things. “H100 is our solution for the most advanced models where we get into the millions, even billions of hyperparameters,” says Salvator.

Salvator says a lot of the gain is from the H100’s “transformer engine.” Essentially, it’s the intelligent use of low-precision—efficient but less accurate—computations whenever possible. The scheme is particularly designed for neural networks called transformers, of which the natural language processing benchmark BERT is an example. Transformers are in the works for many other machine learning tasks. “Transformer-based networks have been literally transformative to AI,” says Salvator. “It’s a horrible pun.“

Memory is a bottleneck for all sorts of AI, but it’s particularly limiting in BERT and other transformer models. Such neural networks rely on a quality called “attention.” You can think of it as how many words a language processor is aware of at once. It doesn’t scale up well, largely because it leads to a huge increase in writing to system memory. Earlier this year Hazy Research (the name for Chris Re’s lab at Stanford) deployed an algorithm to an Azure cloud system that shaved 10 percent of the training time off Microsoft’s best effort. For this round, Azure and Hazy Research worked together to demonstrate the algorithm—called Flash Attention.

Both the image-classification and natural-language-processing tables show Intel’s competitive position. The company showed results for the Habana Gaudi2, its second generation AI accelerator, and the Sapphire Rapids Xeon CPU, which will be commercially available in the coming months. For the latter, the company was out to prove that you can do a lot of machine-learning training without a GPU.

A setup with 32 CPUs landed well behind a Microsoft Azure cloud-based system with only four GPUs on object recognition, but it still finished in less than an hour and a half, and for natural-language processing, it nearly matched that Azure system. In fact, none of the training took longer than 90 minutes, even on much more modest CPU-only computers.

“This is for customers for whom training is part of the workload, but it’s not the workload,” says Jordan Plawner, an Intel senior director and AI product manager. Intel is reasoning that if a customer is retraining only once a week, whether the work takes 30 minutes or 5 minutes is of too little importance for them to spend on a GPU accelerator they don’t need for the rest of the week.

Habana Gaudi2 is a different story. As the company’s dedicated machine-learning accelerator, the 7-nanometer chip goes up against Nvidia’s A100 (another 7-nm chip) and soon will face the 5-nm H100. In that light, it performed well on certain tests. On image classification, an eight-chip system landed only a couple of minutes behind an eight-chip H100. But the gap was much wider with the H100 at the natural-language-processing task, though it still narrowly bested an equal-size and Hazy-Research-enhanced A100 system.

“We’re not done with Gaudi 2,” says Habana’s Eitan Medina. Like others, Habana is hoping to speed learning by strategically using low-precision computations on certain layers of neural networks. The chip has 8-bit floating-point capabilities, but so far the smallest precision the company has engaged on the chip for MLPerf training purposes is bfloat 16.

Training Supercomputers

MLCommons released results for training high-performance computers—supercomputers and other big systems—at the same time as those for training servers. The HPC benchmarks are not as established and have fewer participants, but they still give a snapshot of how machine learning is done in the supercomputing space and what the goals are. There are three benchmarks: CosmoFlow estimates physical quantities from cosmological image data; DeepCAM spots hurricanes and atmospheric rivers in climate simulation data; and OpenCatalyst predicts the energy levels of molecular configurations.

There are two ways to measure systems on these benchmarks. One is to run a number of instances of the same neural network on the supercomputer, and the other is to just throw a bunch of resources at a single instance of the problem and see how long it takes. The table below is the latter and just for CosmoFlow, because it’s much simpler to read. (Again, feel free to view the whole schemozzle at MLCommons.)

The CosmoFlow results show four supercomputers powered by as many different types of CPU architectures and two types of GPU. Three of the four were accelerated by Nvidia GPUs, but Fugaku, the second most powerful computer in the world, used only its own custom-built processor, the Fujitsu A64FX.

The MLPerf HPC benchmarks came out only the week before Supercomputing 2022, in Dallas, one of the two conferences at which new Top500 rankings of supercomputers are announced.

A separate benchmark for supercomputing AI has also been developed. Instead of training particular neural networks, it solves “a system of linear equations using novel, mixed-precision algorithms that exploit modern hardware.” Although results from the two benchmarks don’t line up, there is overlap between the HPL-MxP list and the CosmoFlow results including: Nvidia’s Selene, Riken’s Fugaku, and Germany’s JUWELS.

Tiny ML systems

The latest addition to the MLPerf effort is a suite of benchmarks designed to test the speed and energy efficiency of microcontrollers and other small chips that execute neural networks that do things like spotting keywords and other low-power, always-on tasks. MLPerf Tiny, as it’s called, is too new for real trends to have emerged in the data. But the results released so far show a couple of standouts. The table here shows the fastest “visual wakewords” results for each type of processor, and shows that Syntiant and Greenwave Technologies have an edge over the competition.

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.