Whether it’s designing microchips or dreaming up new proteins, sometimes it seems like neural networks can do anything. Infamously, however, these brain-inspired artificial intelligence (AI) systems work in mysterious ways, raising concerns that what they are doing might not make any sense.

New research suggests that 200-year-old math could help shed light on how neural networks perform complex tasks such as predicting climate or modeling turbulence, a new study finds. This in turn could help boost the accuracy of neural networks and the speed at which they learn, researchers say.

In artificial neural networks, components known as neurons (analogous to neurons in the human brain in that they are the base component of their system) are fed data and cooperate to solve a problem, such as recognizing faces. Neural nets are dubbed “deep“ if they possess multiple layers of these neurons.

The way in which neural networks reach conclusions has long been considered a mysterious black box—that is, a network could not also provide an explanation of how it arrived at the conclusion it did. Although researchers have developed ways to examine the inner workings of neural networks, these have often shown little success when it comes to the networks of many science and engineering applications, says study senior author Pedram Hassanzadeh, a fluid dynamicist at Rice University, in Houston.

In order to analyze a neural network designed to carry out physics, Hassanzadeh and his colleagues experimented with using a math technique often employed in physics. The method, known as Fourier analysis, is used to identify regular patterns in data across space and time.

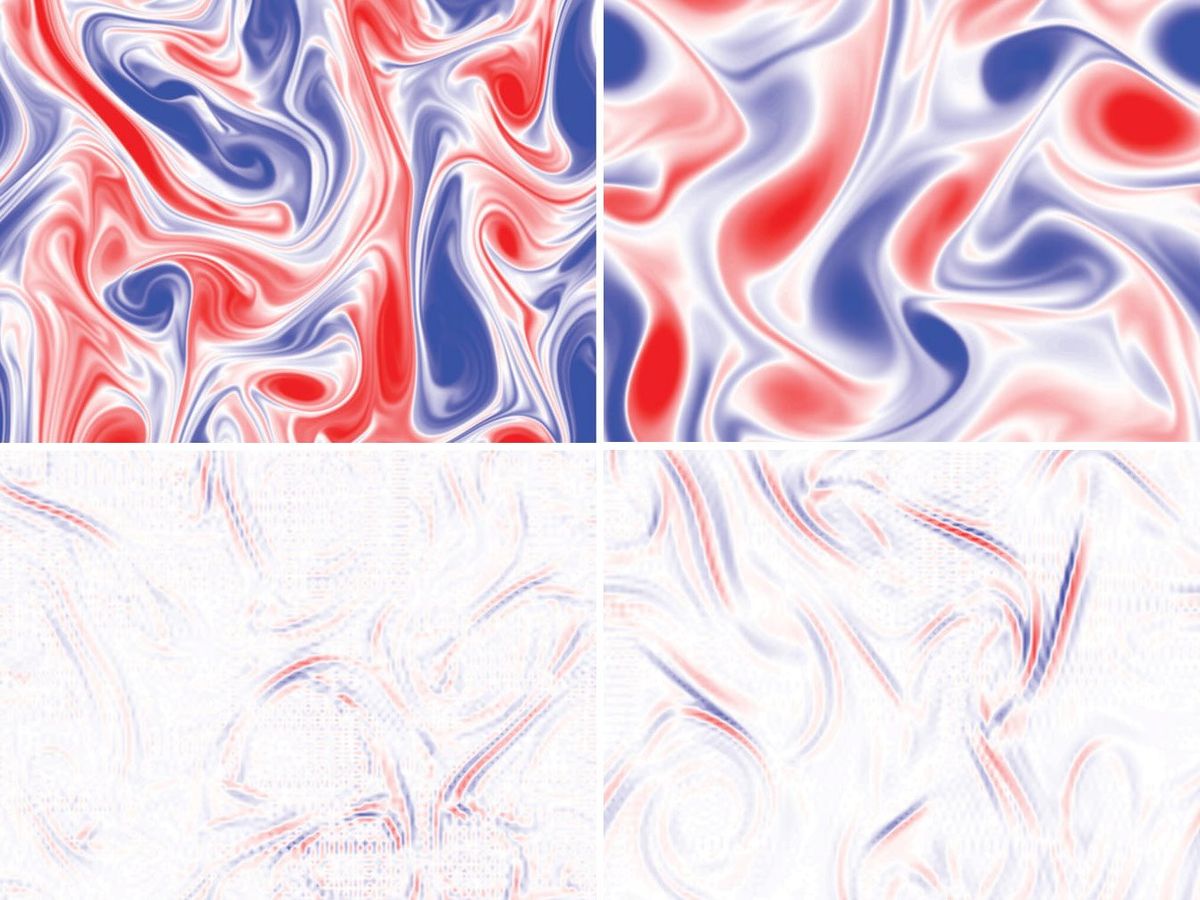

In the new study, the researchers experimented on a deep neural network trained to analyze the kind of complex turbulence seen in air in the atmosphere or water in the ocean, and to predict how those flows might change over time. A better understanding of the concepts that neural networks have learned in order to analyze these complex systems could help lead to more accurate models that need less data for training, Hassanzadeh says.

The scientists performed a Fourier analysis on the deep neural network’s governing equations. Each of the model’s roughly 1 million parameters—the connections between the neurons—act like multipliers that adjust specific operations in these equations during its calculations. These parameters were grouped in about 40,000 five-by-five matrices called kernels.

The parameters of untrained neural networks generally have random values. During training, a neural network’s parameters are modified and honed as it gradually learns to calculate solutions that are closer and closer to the known outcomes in training cases. Researchers can then use a fully trained neural network to analyze data it had not previously seen.

Since Fourier analysis first emerged roughly 200 years ago, researchers have also developed other tools to analyze patterns in data, such as low-pass filters that screen out background noise, high-pass filters that help analyze background signals, and Gabor filters that are often used in image processing. The Fourier analysis of the kernels revealed the neural network’s parameters were behaving like a combination of low-pass, high-pass, and Gabor filters.

“For years, we heard that neural networks are black boxes and there are too many parameters to understand and analyze. And sure, when we just looked at some of these parameters, they did not make much sense, and they all looked different,” Hassanzadeh says. However, after Fourier analysis of all these kernels, he says, “we realized they are these spectral filters.”

Scientists have for years tried combining these filters to analyze climate and turbulence. However, these combinations often did not prove successful in modeling these complex systems. Neural networks learned ways to combine these filters correctly, Hassanzadeh says.

“Many groups of climate scientists and machine-learning scientists are working together in the United States and around the world to develop neural-network-enhanced climate models—that is, hybrid models that use traditional partial-differential-equation solvers and neural networks together to do faster, better climate projections,” Hassanzadeh explains. Fourier analysis may help scientists design better neural networks for such goals and help them better understand the underlying physics of climate and turbulence, he adds.

Beyond models of climate and turbulence, Fourier analysis may help investigate neural networks designed to analyze a wide range of other complex systems, Hassanzadeh says. These include “combustion inside a jet engine, flow in a wind farm, many materials, the atmosphere of Jupiter and other planets, plasma, convection in the interior of the sun and Earth, and so on,” he says. The researchers have developed a general framework to help apply this approach “to any physical system and for any neural network architecture,” Hassanzadeh says.

In addition, this approach may potentially help analyze “neural networks for image classification or in neuroscience,” Hassanzadeh adds. However, he says, “to what degree our work sheds light on those fields remains to be studied.”

A major concern when it comes to neural networks is how generalizable they are—whether they can analyze systems different from the ones on which they were trained. One approach used to help neural networks extrapolate from one system to another is known as transfer learning. This method focuses on retraining a small number of key neurons in a neural network to help it analyze other systems. These new findings may identify the best neurons to retrain.

Specifically, the conventional wisdom in transfer learning is that it is better to retrain the neurons in the deepest layers closest to the output the model produces, Hassanzadeh says. However, the new study suggests that when it comes to complex systems that involve patterns in data across time and space, retraining the shallowest layers close to the input the model receives may result in better performance, while retraining the deepest layers may prove completely ineffective, he says.

All in all, “as much as neural networks are called black boxes, we can actually take them apart and try to understand what they do, and connect their inner workings with the physics and math we know about physical systems,” Hassanzadeh says. “There is a major need for this in scientific machine learning.”

In the future, the researchers aim to identify how neural networks learn how to combine filters in order to reach the best results, Hassanzadeh says. He and his colleagues detailed their findings 23 January in the journal PNAS Nexus.

- Quantum Computers Will Speed Up the Internet's Most Important ... ›

- A Faster Fast Fourier Transform - IEEE Spectrum ›

- MIT and IBM Find Clever AI Ways Around Brute-Force Math - IEEE Spectrum ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.