A brain-imitating neural network that employs photons instead of electrons could rapidly analyze vast amounts of data by running many computations simultaneously using thousands of wavelengths of light, a new study finds.

Artificial neural networks are increasingly finding use in applications such as analyzing medical scans and supporting autonomous vehicles. In these artificial-intelligence systems, components (also known as neurons) are fed data and cooperate to solve a problem, such as recognizing faces. A neural network is dubbed “deep“ if it possesses multiple layers of neurons.

As neural networks grow in size and power, they are becoming more energy hungry when run on conventional electronics. Which is why some scientists have been investigating optical computing as a promising, next-generation AI medium. This approach uses light instead of electricity to perform computations more quickly and with less power than its electronic counterparts use.

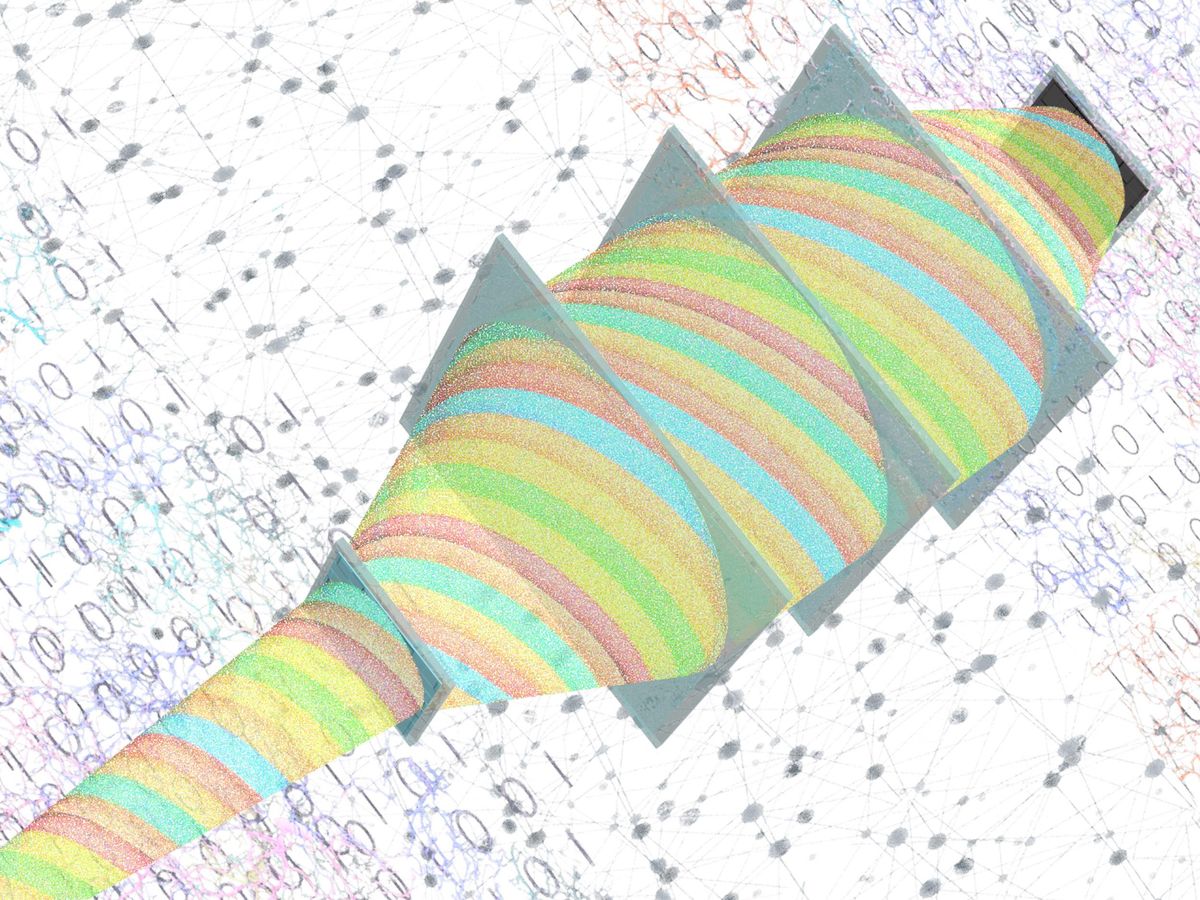

For example, a diffractive optical neural network is composed of a stack of layers, each possessing thousands of pixels that can diffract, or scatter, light. These diffractive features serve as the neurons in a neural network. Deep learning is used to design each layer so when input in the form of light shines on the stack, the output light encodes data from complex tasks such as image classification or image reconstruction. All this computing “does not consume power, except for the illumination light,” says study senior author Aydogan Ozcan, an optical engineer at the University of California, Los Angeles.

Such diffractive networks could analyze large amounts of data at the speed of light to perform tasks such as identifying objects. For example, they could help autonomous vehicles instantly recognize pedestrians or traffic signs, or help medical diagnostic systems quickly identify evidence of disease. Conventional electronics need to first image those items, then convert those signals to data, and finally run programs to figure out what those objects are. In contrast, diffractive networks only need to receive light reflected off or otherwise arriving from those items—they can identify an object because the light from it gets mostly diffracted toward a single pixel assigned to that kind of object.

Previously, Ozcan and his colleagues designed a monochromatic diffractive network using a series of thin 64-square-centimeter polymer wafers fabricated using 3D printing. When illuminated with a single wavelength or color of light, this diffractive network could implement a single matrix multiplication operation. These calculations, which involve multiplying grids of numbers known as matrices, are key to many computational tasks, including operating neural networks.

Now the researchers have developed a broadband diffractive optical processor that can accept multiple input wavelengths of light at once for up to thousands of matrix multiplication operations “executed simultaneously at the speed of light,” Ozcan says.

In the new study, the scientists 3D-printed three diffractive layers, each with 14,400 diffractive features. Their experiments showed the diffractive network could successfully operate using two submillimeter-wavelength terahertz-frequency channels. Their computer models suggested these diffractive networks could accept up to roughly 2,000 wavelength channels simultaneously.

“We demonstrated the feasibility of massively parallel optical computing by employing a wavelength multiplexing scheme,” Ozcan says.

The scientists note it should prove possible to build diffractive networks that use visible and other frequencies of light other than terahertz. Such optical neural nets can also be manufactured from a wide variety of materials and techniques.

All in all, they “may find applications in various fields, including, for example, biomedical imaging, remote sensing, analytical chemistry, and material science,” Ozcan says.

The scientists detailed their findings 9 January in the journal Advanced Photonics.

- A Neural-Net Based on Light Could Best Digital Computers - IEEE ... ›

- Photonic Chip Performs Image Recognition at the Speed of Light ›

- The Future of Deep Learning Is Photonic - IEEE Spectrum ›

- AI at the Speed of Light - IEEE Spectrum ›

- Linking Chips With Light For Faster AI - IEEE Spectrum ›

- AI Chip Trims Energy Budget Back by 99+ Percent - IEEE Spectrum ›

- Optical AI Enables Greener, Faster Image Creation - IEEE Spectrum ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.