An artificial intelligence system from Google’s sibling company DeepMind stumbled on a new way to solve a foundational math problem at the heart of modern computing, a new study finds. A modification of the company’s game engine AlphaZero (famously used to defeat chess grandmasters and legends in the game of Go) outperformed an algorithm that had not been improved on for more than 50 years, researchers say.

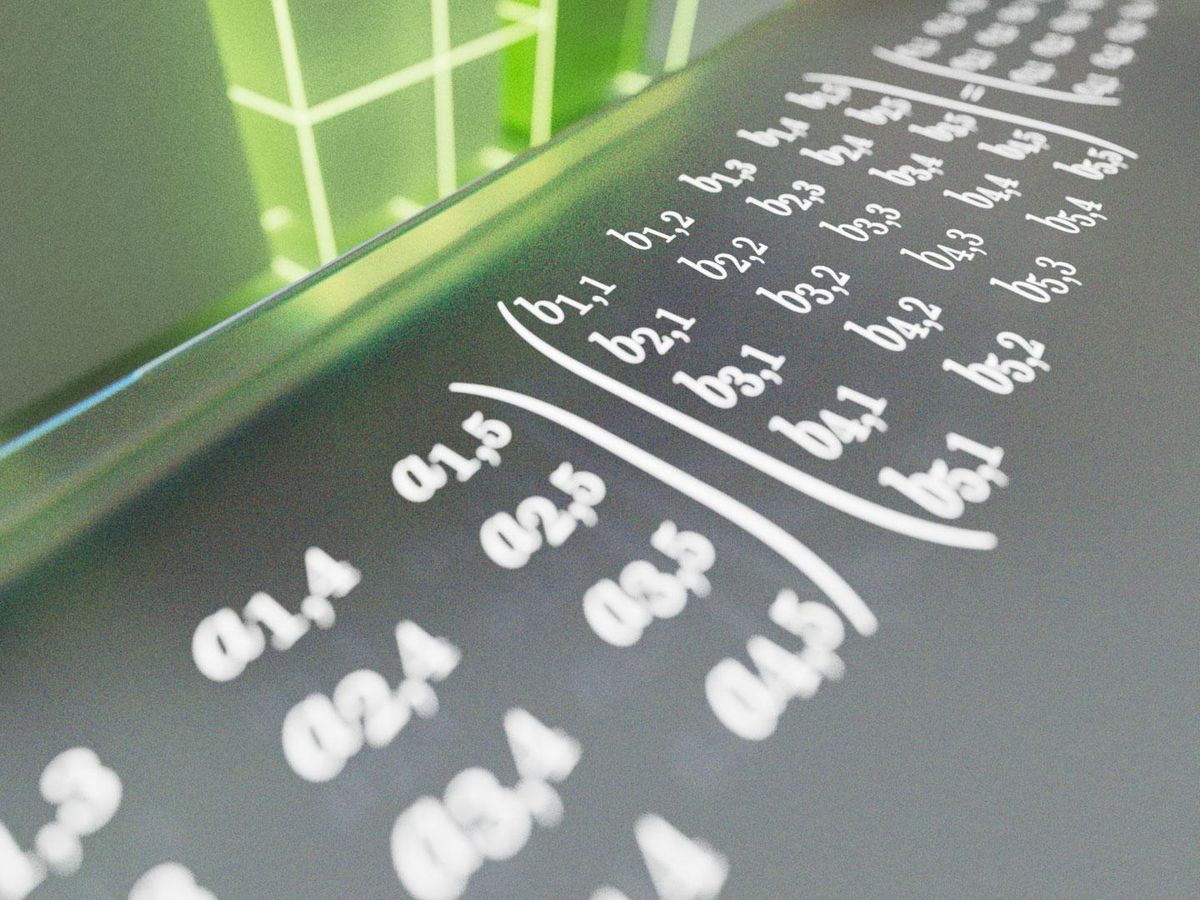

The new research focused on multiplying grids of numbers known as matrices. Matrix multiplication is an operation key to many computational tasks, such as processing images, recognizing speech commands, training neural networks, running simulations to predict the weather, and compressing data for sharing on the Internet.

“Finding new matrix-multiplication algorithms could help speed up many of these applications,” says study lead author Alhussein Fawzi, a research scientist at London-based DeepMind, a subsidiary of Google’s parent company Alphabet.

“AlphaTensor provides an important proof of concept that with machine learning, we can go beyond existing state-of-the-art algorithms, and therefore that machine learning will play a fundamental role in the field of algorithmic discovery.”

—Alhussein Fawzi, DeepMind

The standard technique for multiplying two matrices together is usually to multiply the rows of one with the columns of the other. However, in 1969, the German mathematician Volker Strassen surprised the math world by discovering a more efficient method. When it comes to multiplying a pair of two-by-two matrices—ones that each have two rows and two columns—the standard algorithm takes eight steps. In contrast, Strassen’s takes only seven.

However, decades of research after Strassen’s breakthrough, larger versions of this problem are still unsolved. For example, it remains unknown how efficiently one can multiply a pair of matrices as small as three by three, Fawzi says.

“I felt from the very beginning that machine learning could help a lot in this field, by finding the best patterns—that is, which entries to combine in the matrices and how to combine them—to get the right result,” Fawzi says.

In the new study, Fawzi and his colleagues explored how AI might help automatically discover new matrix-multiplication algorithms. They built on Strassen’s research, which focused on ways to break down 3D arrays of numbers called matrix-multiplication tensors into their elementary components.

The scientists developed an AI system dubbed AlphaTensor based on AlphaZero, which they earlier developed to master chess, Go, and other games. They converted the problem of breaking down tensors into a single-player game and trained AlphaTensor to find efficient ways to win the game.

The researchers noted that this game proved extraordinarily challenging. The number of possible algorithms that AlphaTensor has to consider is much greater than the number of atoms in the universe, even for small cases of matrix multiplication. In one scenario, there were more than 1033 possible moves at each step of the game.

“The search space was gigantic,” Fawzi says.

“In the next few years, many new algorithms for fundamental computational tasks that we use every day will be discovered with the help of machine learning.”

—Alhussein Fawzi, DeepMind

When AlphaTensor began, it had no knowledge about existing algorithms for matrix multiplication. By playing the game repeatedly and learning from the outcomes, it gradually improved.

“AlphaTensor is the first AI system for discovering novel, efficient, and provably correct algorithms for fundamental tasks such as matrix multiplication,” Fawzi says.

AlphaTensor eventually discovered up to thousands of matrix-multiplication algorithms for each size of matrix it examined, revealing that the realm of matrix-multiplication algorithms was richer than previously thought. These included algorithms faster than any previously known. For example, AlphaTensor discovered an algorithm for multiplying four-by-four matrices in just 47 steps, improving on Strassen’s 50-year-old algorithm, which uses 49.

“The first time we saw that we were able to improve over existing known algorithms was very exciting,” Fawzi recalls.

These new algorithms possess a variety of different mathematical properties with a range of potential applications. The scientists modified AlphaTensor to find algorithms that are fast on a given hardware device, such as Nvidia V100 GPU and Google TPU v2. They discovered algorithms that multiply large matrices 10 to 20 percent faster than the commonly used algorithms on the same hardware.

“AlphaTensor provides an important proof of concept that with machine learning, we can go beyond existing state-of-the-art algorithms, and therefore that machine learning will play a fundamental role in the field of algorithmic discovery going forward,” Fawzi says. “I believe that in the next few years, many new algorithms for fundamental computational tasks that we use every day will be discovered with the help of machine learning.”

AlphaTensor started with no knowledge about the problem it tackled. This suggests that an interesting future direction might be to combine it with approaches that embed mathematical knowledge about the problem, “which will potentially allow the system to scale further,” Fawzi says.

In addition, “we are also looking to apply AlphaTensor to other fundamental operations used in computer science,” Fawzi says. “Many problems in computer science and math have similarities to the way we framed the problem in our research, so we believe that our paper will spur new results in mathematics and computer science with the help of machine learning.”

The scientists detailed their findings on 5 October in the journal Nature.

This article appears in the January 2023 print issue as “DeepMind Plays Math and Wins.”

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.