Neural networks can achieve superhuman performance in many tasks, but these AI systems can suddenly and completely forget what they have learned if asked to absorb new memories. Now a new study reveals a novel way for neural networks to undergo sleeplike phases and help prevent such amnesia.

A major challenge that artificial neural networks face is “catastrophic forgetting.” When they learn a new task, they have an unfortunate tendency to abruptly and entirely forget what they previously learned. Essentially, they overwrite past data with new knowledge.

In contrast, the human brain is capable of lifelong learning of new tasks without interfering with its ability to perform ones it previously committed to memory. Prior work suggests the human brain learns best when rounds of learning are interspersed with periods of sleep. Sleep apparently helps recent experiences get incorporated into the pool of long-term memories.

“Reorganization of memory might be actually one of the major reasons why organisms need to go through the sleep stage,” says study coauthor Erik Delanois, a computational neuroscientist at the University of California, San Diego.

Previous research had sought to solve catastrophic forgetting by having AIs practice a semblance of sleep. For example, when neural networks learn a new task, a strategy known as interleaved training simultaneously feeds the machines old data they had previously learned from in order to help them preserve their knowledge of the past. This approach was previously thought to mimic how the brain works during sleep—old memories get replayed.

However, scientists had assumed interleaved training required feeding a neural network all the data it originally used to learn old skills every time it wanted to learn something new. Not only does this require a lot of time and data, it also does not appear to be what actual brains do during real sleep—they neither keep all the data they needed to learn old tasks, nor would they have time to replay all that data while sleeping.

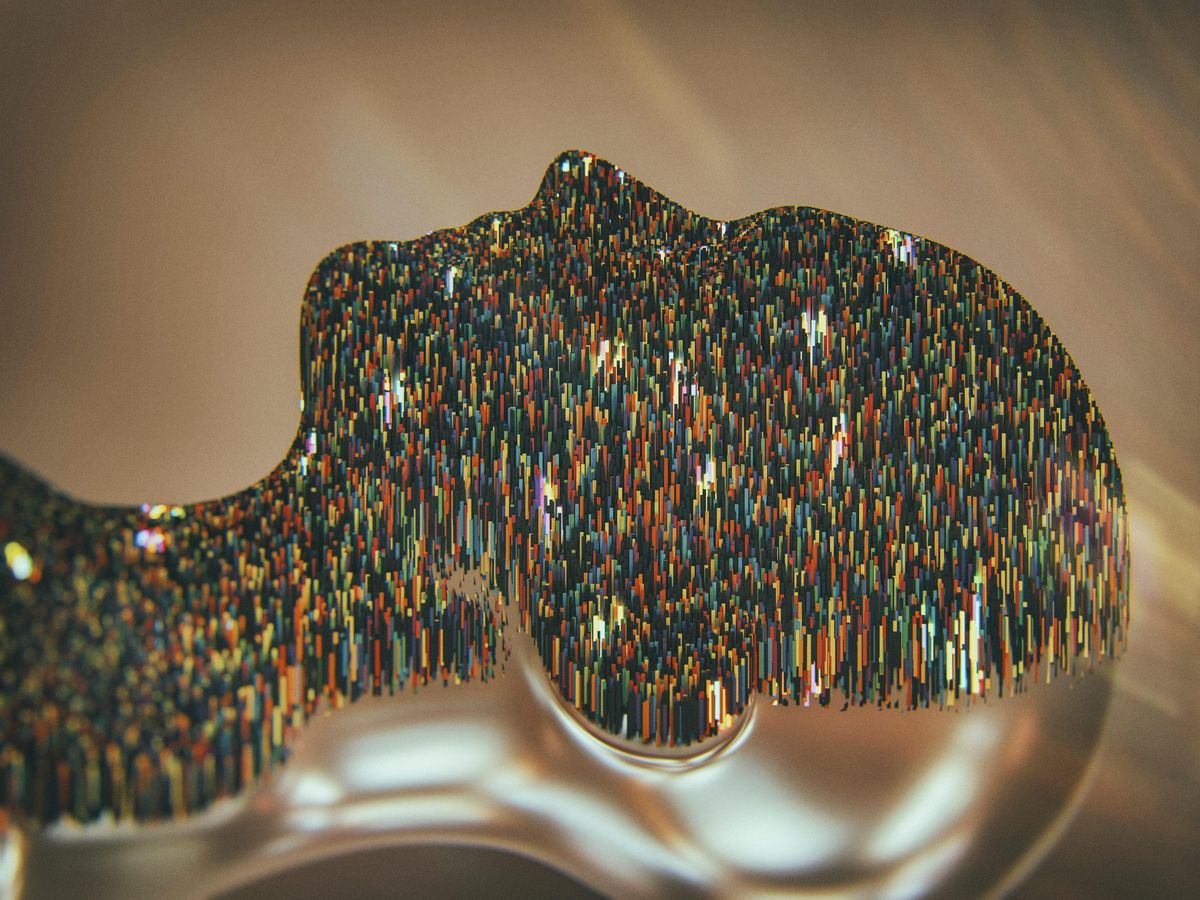

In the new study, the researchers analyzed the mechanisms behind catastrophic forgetting and the role of sleep in preventing it. Instead of using conventional neural networks, they used a “spiking neural network” that more closely mimics the human brain.

In artificial neural networks, components dubbed neurons are fed data and cooperate to solve a problem, such as recognizing faces. The neural net repeatedly adjusts the synapses—the links between its neurons—and sees if the resulting patterns of behavior are better at finding a solution. Over time, the network discovers which patterns are best at computing results. It then adopts these patterns as defaults, mimicking the process of learning in the human brain.

In most artificial neural networks, a neuron’s output is a number that alters continuously as the input it is fed changes. This is roughly analogous to the number of signals a biological neuron might fire over a span of time.

In contrast, in a spiking neural network, a neuron “spikes,” or generates an output signal, only after it receives a certain amount of input signals over a given time, copying how real biological neurons behave. Since spiking neural networks only rarely fire spikes, they shuffle around much less data than typical artificial neural networks and in principle require much less power and communication bandwidth.

As expected, the spiking neural network displayed catastrophic forgetting when it learned to spot horizontal pairs of particles in a grid and then was trained to look for vertical pairs of particles in a grid. Then, between rounds of learning, the researchers had the spiking neural network go through intervals where the ensembles of neurons involved in learning the first task were reactivated. This more closely imitates what neuroscientists currently think occurs during sleep.

“The nice part is that we do not explicitly store data associated with earlier memories to artificially replay them during sleep in order to keep forgetting at bay,” says study coauthor Pavel Sanda, a computational neuroscientist at the Institute of Computer Science of the Czech Academy of Sciences.

The scientists found their strategy helped prevent catastrophic forgetting. The spiking neural network was capable of performing both tasks after undergoing sleeplike phases. They suggested their strategy helped preserve the patterns of synapses associated with both old and new tasks.

“Our work highlights the utility in developing biologically inspired solutions,” Delanois says.

The researchers note that their findings are not limited to spiking neural networks. Upcoming work suggests that sleeplike phases can help “overcome catastrophic forgetting in standard artificial neural networks,” Sanda says.

The scientists detailed their findings 18 November in the journal PLOS Computational Biology.

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.