If you’re going to let a car drive itself, it had better have an exquisitely detailed picture of its surroundings. So far, the industry has favored the laser-powered precision of lidar. But startup Nodar, based in Somerville, Mass., says camera-based systems could do better.

Lidar, which is short for light detection and ranging, scans the environment with laser beams and then picks up the reflections. Measuring how long it takes for the light to bounce back makes it possible to judge the distance and use that information to construct a 3D image. Most of today’s autonomous vehicles, including those made by Waymo and Cruise, rely heavily on lidar, which can cost tens of thousands of dollars for just a single unit. Nodar says its alternative would cost far less.

Camera-based 3D vision systems have been considerably worse at judging distances than lidar, and they often struggle in low light or inclement weather. But thanks to advances in automotive camera technology and Nodar’s proprietary software, CEO Leaf Jiang says that’s no longer the case.

Nodar takes images from two cameras spaced well apart and then compares their views to construct a triangle, with the object at the far apex. It then calculates how far away an object is.

“Camera-based systems in general, have always gotten a bad rap,” he says. “We’re hoping to dispel those myths with our new results.”

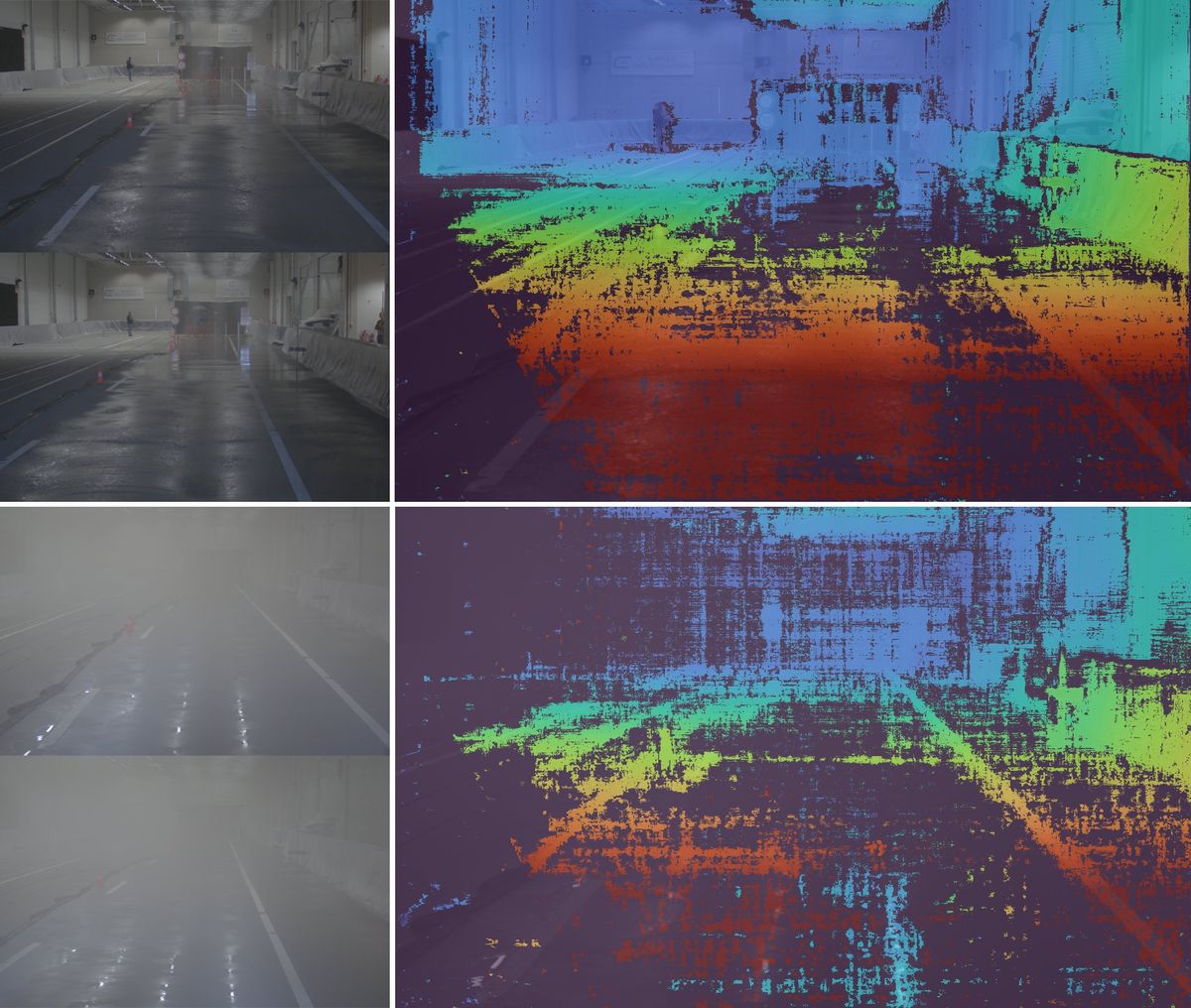

In recent testing, he says, the company’s technology consistently outperformed lidar on both resolution and range in a variety of scenarios, including night driving and heavy fog. In particular, it was able to detect small objects, like pieces of lumber or traffic cones, at twice the distance of lidar, which Jiang says is important for highway driving at higher speeds.

Nodar takes images from two cameras spaced well apart and then compares their views to construct a triangle, with the object at the far apex. It then calculates how far away an object is. Such stereo camera setups are well known; several automotive suppliers incorporate them into advanced driver-assistance systems (ADAS).

However, the approach faces two challenges. The two cameras have to be precisely calibrated, which is tricky to do on a vibrating car exposed to a wide range of environmental conditions. Normally this is achieved using exquisitely engineered mounts that keep the cameras stable, says Jiang, but this requires them to be close together. That’s a problem because the smaller the baseline distance between the cameras, the harder it is to triangulate to distant objects.

To get around this, Nodar has developed patented auto-calibration software that allows you to place cameras much farther apart while making the system much less sensitive to instabilities. Normally camera calibration is done in carefully controlled environments using specially designed visual targets, but Nodar’s software uses cues in real-world scenes and is able to sync the two cameras up on every frame. This is computationally complex, says Jiang, but Nodar has developed highly efficient algorithms that can run in real time on off-the-shelf automotive chips.

By allowing the cameras to be placed much farther apart, their system makes it possible to triangulate to objects as far out as 1,000 meters, says Jiang, which is substantially farther than most lidar sensors can manage.

The other challenge for cameras is that, unlike lidar, which has its own light source, they rely on ambient light. That’s why they often struggle at night or in bad weather.

To see how their system performed in these situations, Nodar conducted a series of tests on a remote airstrip in Maine with almost zero light pollution. The company also worked with an automotive environmental-simulation chamber in Germany that can recreate conditions like rain and fog. They collected data using a pair of 5.4-megapixel cameras with 30-degree field-of-view lenses spaced 1.2 meters apart and compared their results against a high-end 1,550-nanometer automotive lidar.

In broad daylight, Nodar’s setup generated 40 million 3D data points per second compared to the lidar’s 600,000. In extremely heavy rain the number of valid data points dropped by only around 30 percent, while for lidar the drop was roughly 60 percent. And in fog with visibility of roughly 45 meters they found that 70 percent of their distance measurements were still accurate, compared to just 20 percent for lidar.

At night, their system could detect a 12-centimeter-high piece of lumber from 130 meters away using high-beam headlights, compared to less than 50 meters with lidar. Lidar performed similarly with a 70-centimeter-high traffic cone, but Nodar’s technology could spot it from 200 meters away.

The capabilities of automotive cameras are improving rapidly, says Jiang. Today’s devices are able to operate in very low light levels, he says, and can pick out fine details in a foggy scene not visible to the naked eye.

But that’s also complemented by the company’s proprietary stereo matching algorithm, which Jiang says can sync up images even when they’re blurry. This allows them to use longer exposure times to collect more light at night, and also makes it possible to triangulate on fuzzy visual cues in fog or rain.

If their technology works as they say, the advantages would be “lower cost, longer range, better resolution, and easy integration, as they use off-the-shelf cameras,” says Guarav Gupta, an analyst at Gartner. But the only people who can really validate the claims are the automotive companies Nodar is working with, he adds.

Its also important to note that automotive lidar provides a 360-degree view around the vehicle, says Steven Waslander, director of the Toronto Robotics and AI Laboratory at the University of Toronto. It’s probably not fair to compare that performance against forward-facing stereo cameras, he says. If you wanted to replicate that 360-degree view with multiple stereo systems, he adds, it would cost more in terms of both money and computational resources.

Jiang says that Nodar’s improved range and resolution could be particularly important for highway driving, where higher speeds and longer braking distances make detecting distant objects crucial. But Mohit Sharma, a research analyst at Counterpoint Research, points out that emerging lidar sensors using optical phased arrays, such as the lidar-on-a-chip made by Analog Photonics, will allow much faster scanning speeds suitable for highway driving.

Ultimately, Sharma thinks no one technology is going to be a silver bullet for autonomous vehicles. “I believe sensor fusion is the best way to deal with complexities of autonomous driving and innovation in both lidar and camera technology will be helpful in reaching full self driving,” he says.

- Aeva Unveils Lidar on a Chip ›

- New Lidar System Promises 3D Vision for Cameras, Cars, and Bots ›

- MIT and DARPA Pack Lidar Sensor Onto Single Chip ›

- AI Takes On India's Most Congested City - IEEE Spectrum ›

Edd Gent is a freelance science and technology writer based in Bengaluru, India. His writing focuses on emerging technologies across computing, engineering, energy and bioscience. He's on Twitter at @EddytheGent and email at edd dot gent at outlook dot com. His PGP fingerprint is ABB8 6BB3 3E69 C4A7 EC91 611B 5C12 193D 5DFC C01B. His public key is here. DM for Signal info.