If robots are ever going to drive us through crowded cities, they will need an accurate three-dimensional model of the world around them. The iconic spinning lidars—using pulses of laser light to gather depth information—that festooned early robo-taxis are slowly giving way to smaller and cheaper solid-state devices. But lidars remain bulky, power hungry and too expensive for all but high-end applications.

Now researchers at Stanford University have developed an innovative system that can integrate with almost any mass-produced CMOS image sensor to capture 3D data at potentially a fraction of the price of today’s lidars. It relies on the piezoelectric effect, the process by which deforming some materials generates electricity, and vice versa.

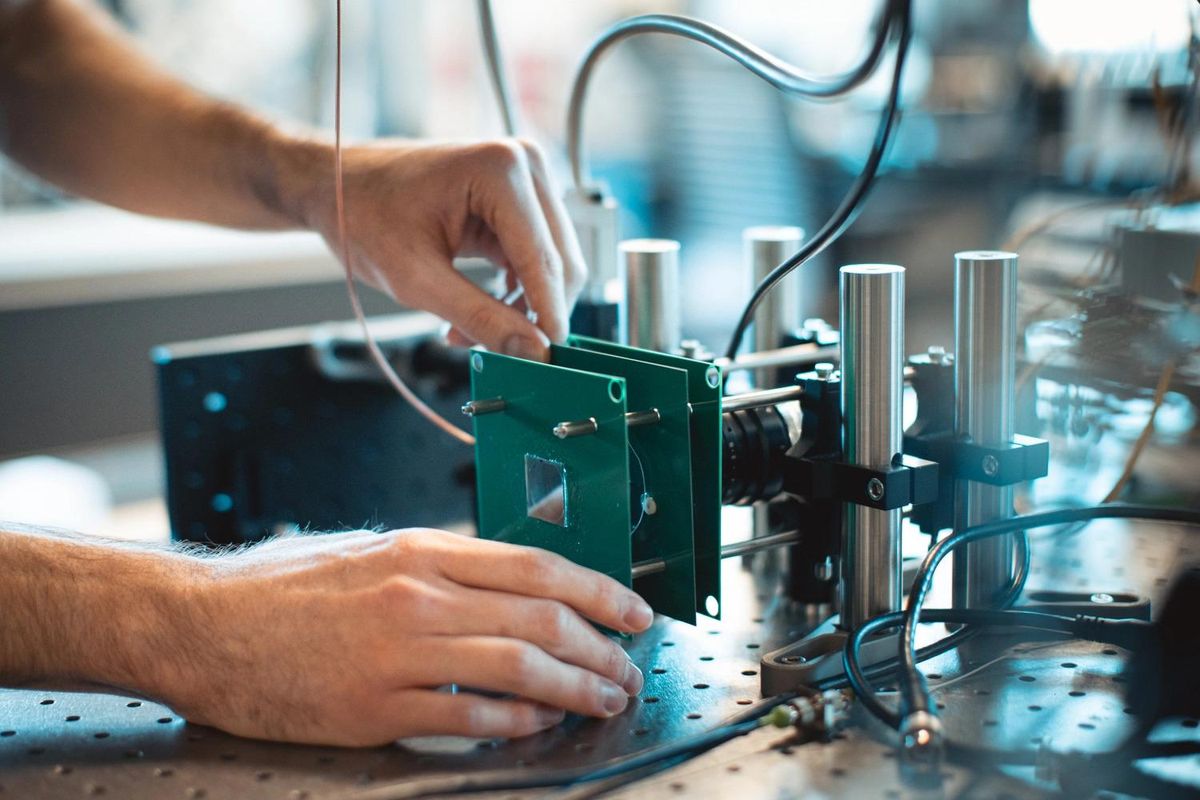

Okan Atalar and Amin Arbabian in Stanford’s electrical engineering department started with thin-film lithium niobate, a material often used for waveguides and optical switches in cellphones and communications systems. Coating the film with transparent electrodes enabled them to excite the crystal, setting up an acoustic standing wave that modulates the intensity of light passing through it.

This allowed them to perform a modulated time-of-flight (MToF) calculation that captures the distance to objects in the scene. Time of flight works as you might expect: Measure the time from when a pulse of light was emitted to when it returns and from that calculate the distance to the object it bounced off. The problem with plain time of flight is that even the briefest pulses (a few nanoseconds) of laser light are tens of centimeters long, which limits the system’s accuracy.

In MToF, the laser signal is modulated and the detector decodes that modulation, measuring the phase shift of the return signal to determine the distance. Atalar’s optoacoustic modulator requires less than a watt of power, which is orders of magnitude less than traditional electro-optic devices.

In tests described in a recent paper in Nature Communications, the modulator was paired with a standard CMOS digital camera with 4-megapixel resolution. It constructed a relatively high-resolution depth map of several metal targets, locating them to within just a few centimeters. “It has a very low incremental cost because you’re only adding one layer and some electronics on top of the CMOS sensor, which stills get the color image and wide dynamic range you get today,” says Arbabian. “But then you turn on this other mode and you can do time of flight with the same electronics.”

CMOS cameras are made by the billions each year and typically cost just a few U.S. dollars each, as compared to specialized lidars with price tags of thousands (or tens of thousands) of dollars. Even if adding an optical modulator doubled the price of the CMOS camera, it still could open up new markets for depth-sensing systems, thinks Arbabian. “Think about applications for security cameras, or for virtual- and augmented-reality headsets,” he says. “3D perception could add hand-gesture detection for smartphones.”

The Stanford team is now building on its proof-of-concept demonstration, aiming to increase the frequency of the system’s operation and improve the modulation to boost its accuracy. Atalar estimates the technology is still one or two years away from being spun out for commercial development.

It could find the lidar market a little different when it finally gets there. Last year, Sony announced that it was building the optical switching for a time-of-flight lidar system directly onto a single imaging chip. Single-photon avalanche diode (SPAD) pixels are stacked above a distance-measuring processing circuit, giving an effective resolution of 0.1 megapixel.

That’s not terribly detailed, but the chip is expected to cost only around US $120 and is likely to benefit from the price and performance pathway of Moore’s Law. “Silicon is relatively cheap,” says Ralf Muenster, vice pesident of business development and marketing at SiLC Technologies, a startup building its own compact, low-power lidar. “So putting things on silicon is quite attractive nowadays, especially with imagers. Manufacturing Stanford’s modulator is not going to be as simple as silicon.”

SiLC is pursuing a fully integrated vision chip using frequency modulated continuous wave (FMCW) lidar. This highly accurate depth-sensing technology has the advantage of also capturing the velocity of each object in a scene, which could smooth its use in safety-critical applications like automated driving.

As lidars slim down and scale up, the days of pure two-dimensional image sensing seem numbered. You probably saw your first lidar system on a so-called self-driving car. You might see your next one on your phone.

28 Apr. 2022 Update: The original story described “SLiC”—the correct name for the company is SiLC Technologies.

Mark Harris is an investigative science and technology reporter based in Seattle, with a particular interest in robotics, transportation, green technologies, and medical devices. He’s on Twitter at @meharris and email at mark(at)meharris(dot)com. Email or DM for Signal number for sensitive/encrypted messaging.