Lidar is increasingly giving 3D scanning capabilities to autonomous cars, drones, and robots, as well as smartphones, but it typically employs moving parts that limit how much it can shrink. Now scientists have developed a solid-state lidar chip the size of a fingertip that could one day lead to ubiquitous 3D sensors, a new study finds.

A lidar sensor uses light much as radar uses radio waves—it shines a laser onto a location and analyzes how long reflected pulses take to return in order to calculate distances and generate a 3D map of a place. To capture this time-of-flight data, a lidar sensor has to steer a laser beam across a scanned area, typically using mechanical parts that can make the device slow, bulky, unstable, and expensive.

Recently, though, scientists have begun exploring solid-state lidar sensors. One strategy involves optical phased arrays, which steer light waves emitted by antennas in the arrays by shifting their phases—that is, the degree to which the waves are in step with each other. However, these devices often need large antennas for high performance, their power consumption can prove high, and controlling the phase of a large number of elements can prove challenging when they are tightly packed together, which can limit the resolution and the field of view of these devices.

“Instead of just capturing natural light entering the camera, each pixel of our lidar can emit a laser light and receive the reflected light from the target..... We can potentially make lidars as compact as today’s smartphone cameras.”

–Ming Wu, University of California, Berkeley

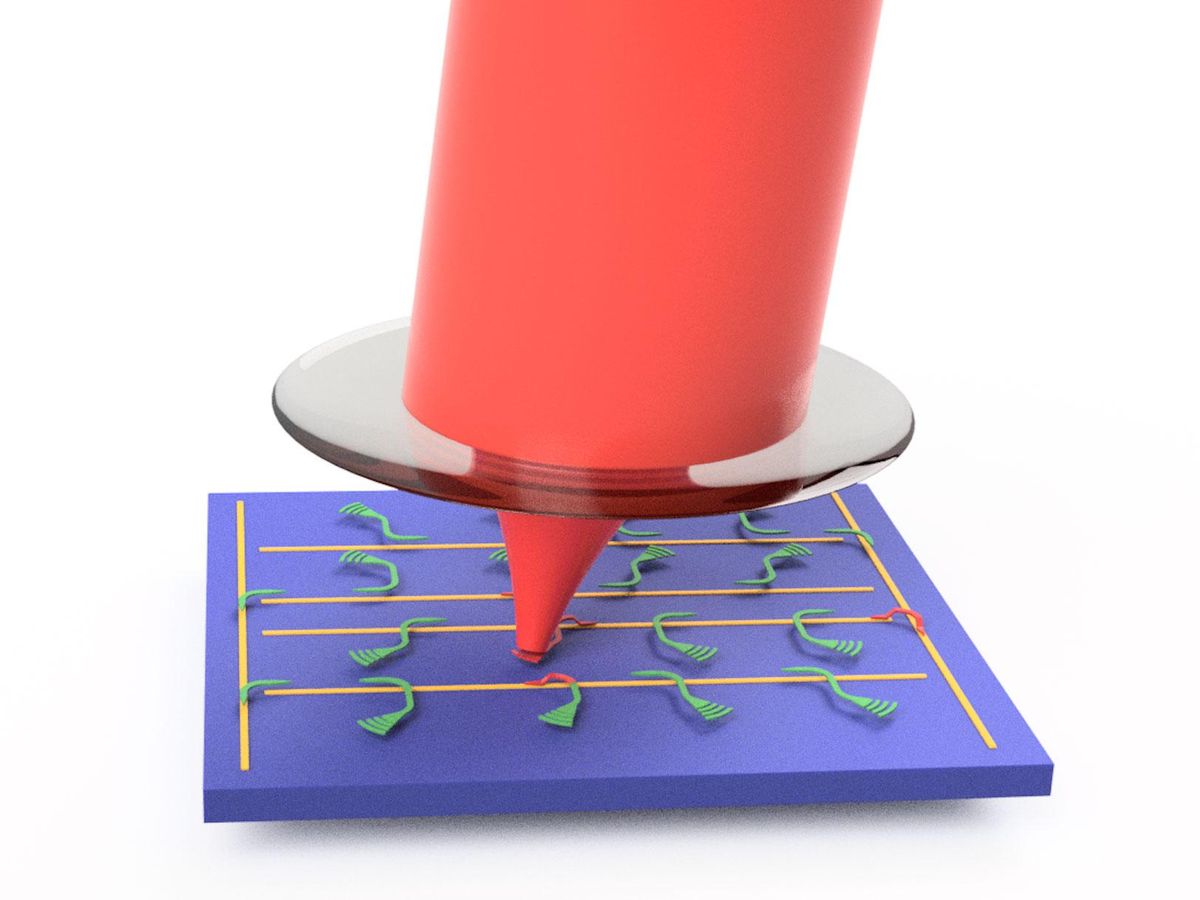

Another strategy for solid-state lidar involves what are called focal-plane switch arrays. These involve a chip divided into pixels, each with one antenna devoted to a patch of space in the sensor’s field of view. Optical switches in the chip route light down a series of channels to each antenna, which emit and receive laser pulses. This array of antennas is placed behind a lens that focuses light going to and from the device.

“Our lidar is very similar to digital cameras,” says study senior author Ming Wu, an electrical engineer at the University of California, Berkeley. “We simply replace the CMOS image sensor with our optical switch array. Instead of just capturing natural light entering the camera, each pixel of our lidar can emit a laser light and receive the reflected light from the target. This enables us to measure the target distance pixel by pixel, extending 2D images to 3D. The similarity means we can potentially make lidars as compact as today’s smartphone cameras.”

However, until now, the size of the switches and the high power they needed limited such lidar sensors to 512 pixels or less. Now Wu and his colleagues have developed a focal-plane switch array lidar with 16,384 pixels, the highest resolution for solid-state lidar to date, which is integrated onto a 110-square-millimeter silicon photonic chip.

A key advance enabling the new device is the use of switches based on microelectromechanical systems (MEMS). These offer small footprints, low power consumption and fast switching time.

The 128 by 128 array of antennas can aim a laser beam in 16,384 distinct directions, covering a wide field of view of 70 degrees. In comparison, the horizontal field of view of human binocular vision spans about 120 to 140 degrees. “Seventy degrees is very close to the view angles of smartphone cameras,” Wu says.

“CMOS cameras are ubiquitous because they are small and cheap. Solid-state lidars the size of smartphone cameras will enable many new applications.”

—Ming Wu, University of California, Berkeley

In experiments, the device has a resolution of 1.7 centimeters at a range of about 10 meters. The device can operate at 100 kilohertz speed, which the researchers say is suitable for lidar scanners.

The new sensor does have relatively low lateral resolution of 0.13 degrees, note Hongyan Fu at Tsinghua University and Qian Li at Peking University, both in Shenzhen, China, in a commentary on the new study. This will restrict its applicability for long-distance detection, a shortcoming of many focal-plane switch array lidar systems, they say. They add that increasing the chip size or shrinking the footprint of each pixel can improve this resolution, likely by further optimizing the MEMS switches.

The scientists envision shrinking the current footprint of each 55-by-55-micrometer pixel down to 10-by-10 micrometers in size and increasing the chip size to 1-by-1 centimeter for a megapixel solid-state lidar. They note that 180 or greater degree fields of view are possible with fisheye lenses.

“The pixel size of our lidar, 50 micrometers, is about the same as the first CMOS image sensor when it was invented 30 years ago,” Wu says. “We expect the resolution of our lidar to increase as technology advances, just like CMOS image sensors. Hopefully, it will take much less than 30 years to scale it to megapixel resolution.”

The researchers note that their device can be mass-produced using standard semiconductor processes in commercial CMOS foundries. “CMOS cameras are ubiquitous because they are small and cheap. Solid-state lidars the size of smartphone cameras will enable many new applications,” Wu says. “They will enable robots to interact more precisely with humans, and with one another.”

In addition to self-driving cars and driving assistance, these lidar sensors “can be used in self-navigating drones—home security drones will be an interesting application,” Wu says. “Some sweeping robots already have some rudimentary lidars; high-resolution LiDAR will provide 3D maps of your house with much higher precision. Other applications include mobile 3D sensing, including augmented reality.”

The scientists detailed their findings online 9 March in the journal Nature.

- CES 2018: Waiting for the $100 Lidar - IEEE Spectrum ›

- Quanergy Announces $250 Solid-State LIDAR for Cars, Robots, and ... ›

- Lidar - IEEE Spectrum ›

- Aeva Unveils Lidar on a Chip - IEEE Spectrum ›

- MIT and DARPA Pack Lidar Sensor Onto Single Chip - IEEE Spectrum ›

- New Lidar System Promises 3D Vision for Cameras, Cars, and Bots - IEEE Spectrum ›

- More LiDAR with Less Data Crunching ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.