Creating a Prosthetic Hand That Can Feel

DARPA’s HAPTIX program aims to develop a prosthetic hand that’s just as capable as the original

Wearing a blindfold and noise-canceling headphones, Igor Spetic gropes for the bowl in front of him, reaches into it, and picks up a cherry by its stem. He uses his left hand, which is his own flesh and blood. His right hand, though, is a plastic and metal prosthetic, the consequence of an industrial accident. Spetic is a volunteer in our research at the Louis Stokes Cleveland Veterans Affairs Medical Center, and he has been using this “myoelectric” device for years, controlling it by flexing the muscles in his right arm. The prosthetic, typical of those used by amputees, provides only crude control. As we watch, Spetic grabs the cherry between his prosthetic thumb and forefinger so that he can pull off the stem. Instead, the fruit bursts between his fingers.

Next, my colleagues and I turn on the haptic system that we and our partners have been developing at the

Functional Neural Interface Lab at Case Western Reserve University, also in Cleveland. Previously, surgeons J. Robert Anderson and Michael Keith had implanted electrodes in Spetic’s right forearm, which now make contact with three nerves at 20 locations. Stimulating different nerve fibers produces realistic sensations that Spetic perceives as coming from his missing hand: When we stimulate one spot, he feels a touch on his right palm; another spot produces sensation in his thumb, and so on.

To test whether such sensations would give Spetic better control over his prosthetic hand, we put thin-film force sensors in the device’s index and middle fingers and thumb, and we use the signals from those sensors to trigger the corresponding nerve stimulation. Again we watch as Spetic grasps another cherry. This time, his touch is delicate as he pulls off the stem without damaging the fruit in the slightest.

In our trials, he’s able to perform this task 93 percent of the time when the haptic system is turned on, versus just 43 percent with the haptics turned off. What’s more, Spetic reports feeling as though he is grabbing the cherry, not just using a tool to grab it. As soon as we turn the stimulation on, he says, “It is my hand.”

Restoration Hardware: Igor Spetic (standing), who lost his right hand in an industrial accident, has been working with author Dustin J. Tyler (seated) to develop an experimental haptic system that lets Spetic feel sensations in his missing hand.

Mike McGregor

Eventually, we hope to engineer a prosthesis that is just as capable as the hand that was lost. Our more immediate goal is to get so close that Spetic might forget, even momentarily, that he has lost a hand. Right now, our haptic system is rudimentary and can be used only in the lab: Spetic still has wires sticking out of his arm that connect to our computer during the trials, allowing us to control the stimulation patterns. Nevertheless, this is the first time a person without a hand has been able to feel a variety of realistic sensations for more than a few weeks in the missing limb. We’re now working toward a fully implantable system, which we hope to have ready for clinical trials within five years.

What would adding a sense of touch to prosthetics do? Right now, people with prostheses typically can use their fake limbs only for tasks that don’t require precision, such as bracing and holding. The sensory feedback from our haptic system would improve control and confidence, allowing greater use of the prosthesis for all the many small tasks of daily life.

Beyond that, we hope to restore one of the most basic forms of human contact. Imagine what it must be like to lose your sense of touch—touch gives us such a profound sense of connection to others. When we ask Spetic and other prosthetic wearers how to improve their mechanical limbs, universally they say they want to hold a loved one’s hand and really feel it. Our technology should one day enable them to achieve this very human goal.

I’ve spent my entire career studying the marriage between human and machine. My work at the intersection of biomedical engineering and neural engineering has driven me to seek the answers to some basic questions: How can electronic circuits speak to the nervous system in a way that the nervous system will understand? How can we use that capability to restore a sweeping range of sensations to someone who has lost a hand? And how can that technology be used to enhance and augment other people’s lives?

The past few decades have seen remarkable advances in the field, including better hardware that can be implanted in the brain or body and better software that can understand and mimic the natural neural code. In that code, electrical impulses in the nervous system convey information between brain cells or along the neurons in the peripheral nerves that stretch throughout the body. These signals drive the actuators of the body, such as the muscles, and they provide feedback in the form of sensation, limb position, muscle force, and so on.

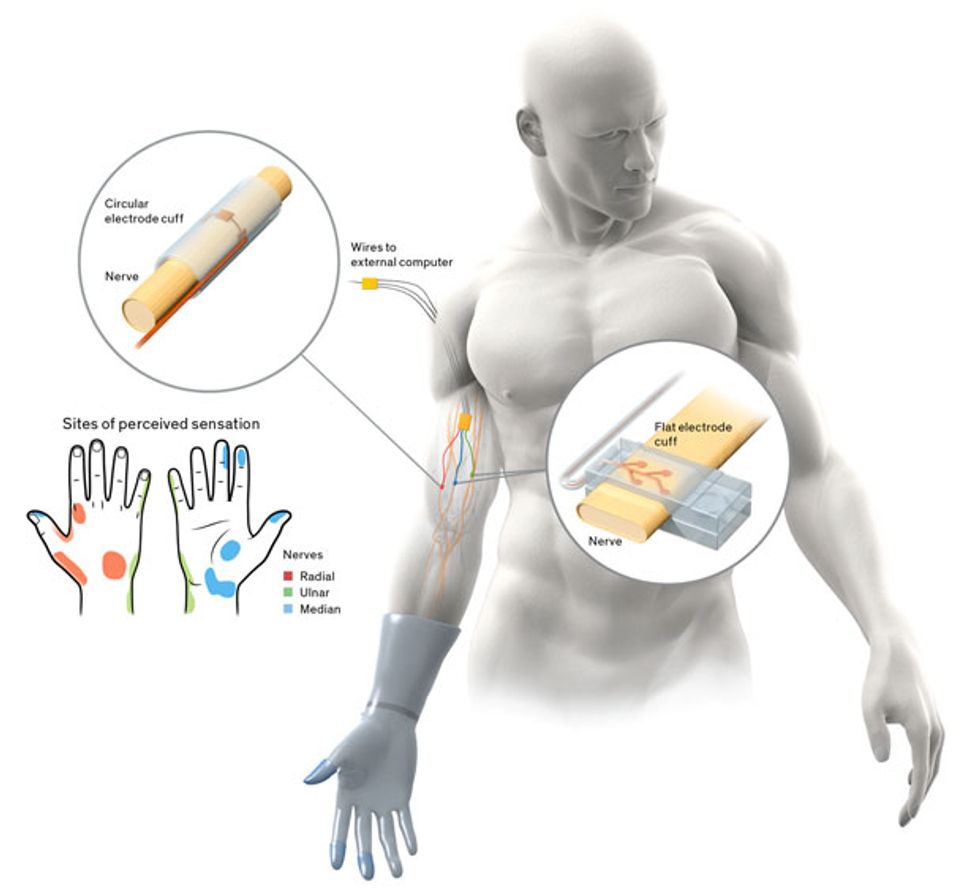

By inserting electrodes directly into muscles or wrapping them around the nerves that control the contraction of the muscles, we can send commands to those electrodes that roughly replicate the signals associated with moving a hand, standing up, or lifting a foot, for example. More recent efforts are aimed at understanding and restoring the sensory system, through funding from the U.S. Department of Veterans Affairs and the Defense Advanced Research Projects Agency’s Hand Proprioception and Touch Interfaces (HAPTIX) program.

Our work on haptic interfaces falls under both of these new programs, but the focus is instead on restoring the sensory signals from the missing limb to the brain. Engineering such an interface is difficult because it has to allow precise patterns of stimulation to the person’s peripheral nerves, without damaging or otherwise altering the nerves. It also must function reliably for years within the harsh environment of the body.

There are several approaches to designing an implanted interface. The least invasive is to embed electrodes in a muscle, near the point where the target nerve enters that muscle. Such systems have been used to restore function following spinal-cord injury, stroke, and other forms of neurological damage. The body tolerates the electrodes well, and surgically replacing them is relatively easy. When the electrodes need to activate a muscle, however, it often requires a current of up to 20 milliamperes, about the same amount you get when you shuffle across a carpet and get “shocked”; even then, the muscle isn’t always completely activated.

The most invasive approach involves inserting electrodes deep into the nerve. Placing the stimulating contacts so close to the target axons—the parts of nerve cells that conduct electrical impulses—means that less current is required and that very small groups of axons can be selectively activated. But the body tends to reject foreign materials placed within the protective layers of its nerves. In animal experiments, the normal inflammatory process often pushes these electrodes out of the nerve.

Somewhere between these two approaches are systems that encircle the nerve and place electrical contacts on the surface of the nerve. Simple systems that stimulate just one site on one nerve are commercially available to treat epilepsy and to help stroke patients speak and swallow. More complicated, multiple-channel versions have been used reliably for nearly a decade in clinical trials to restore upper- and lower-extremity function following a spinal-cord injury.

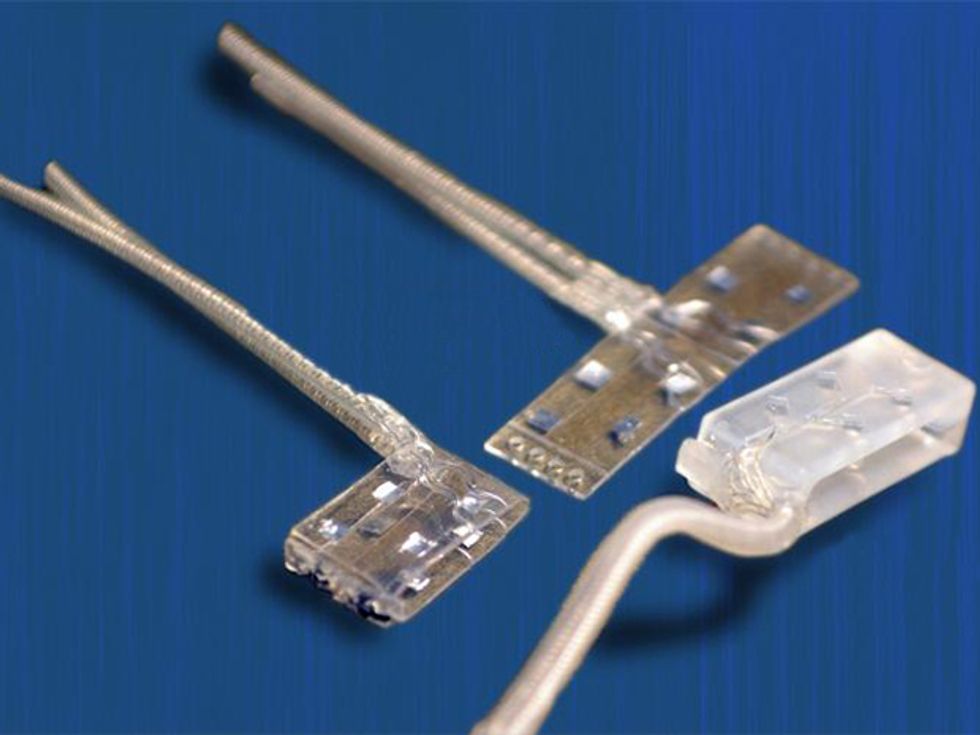

Since the late 1990s, my group has been working on such encircling electrodes, also known as nerve cuffs. One early problem we tackled was how to increase access to the nerve without actually penetrating it. The small surface area and cylindrical shape of a traditional electrode cuff weren’t well suited to the task. We therefore flattened out the nerve cuff so that it fit around an oblong cross section of the nerve.

In 2014, we unveiled the latest version of the flattened cuff, which has eight contact points, each connected to a different channel for stimulation. To date, we’ve implanted our eight-channel cuff in a handful of subjects. Spetic, the cherry-plucking volunteer, has the flat electrode cuffs placed around the median and ulnar nerves, two of the three main nerves in his arm. He has a traditional circular electrode placed around the radial nerve. This provides a total of 20 stimulation channels in his forearm: eight each on the median and ulnar nerves and four on the radial nerve.

The first time Spetic tested our system, we didn’t know whether any of the channels would actually translate to different sensations or different locations. Anxiously, we turned it on and activated a contact on Spetic’s median nerve. “Wow!” he said. “That’s the tip of my thumb. That’s the first time I’ve felt my hand since the injury.” It was one of those moments a researcher lives for.

Further testing revealed that our 20 stimulation points created sensations at 19 places on Spetic’s missing hand, including spots on the left and right sides of his palm, the back of his hand, his wrist, his thumb, and his fingertips.

The next generation of our cuff will have four times as many contacts. The more channels, the more selectively we’ll be able to access small groups of axons and provide a more useful range of sensations. In addition to the tactile, we’d like to produce sensations like temperature, joint position (known as proprioception), and even pain. Despite its negative connotation, pain is an important protective mechanism. During our tests, one stimulation channel did cause a painful sensation. Eventually, we would like to include such protective mechanisms.

For now, we are exploring the other channels and continuing to work with Spetic, who has had the implanted system since May 2012. It’s still working well. When the system is turned off, he says, he doesn’t even realize he has anything implanted in his body.

Of course, triggering a basic sensation is one thing; controlling how that sensation feels is another. It’s analogous to talking: You need to generate sound, but to be understood, that sound has to come out in distinct patterns that can be interpreted as language. In our first experiments, we excited the nerves with regular pulses at a constant strength. This regular stimulation resulted in a tingling sensation called paresthesia—the pins-and-needles feeling of a foot that’s fallen asleep. So we were generating sound but not speech.

Such electrical impulses aren’t part of the nervous system’s repertoire when it’s operating properly: The only time we see them in the brain is during abnormal activity, such as an epileptic seizure. We think this kind of stimulation causes a group of several hundred neurons to fire together, creating an unusual signal that the brain interprets as a generic sensation of tingling.

Complete Control: With the haptics in his prosthetic hand turned on, Spetic can perform delicate tasks like plucking a grape, grasping a flower petal, and unscrewing a cap. “It is my hand,” he says.

In our next experiments we varied the pattern of electric pulses that we sent up the nerves to the brain. We tried changing the timing of pulses and interspersing the sequence with pairs of pulses. Neither of these tests made a significant difference. And because there were so many variables, it proved difficult and time-consuming to understand how changing the pattern of pulses affected what Spetic felt.

To move the experiment forward, I ended up testing many of the patterns on myself. Using a clinically available, noninvasive nerve-stimulation system, a team member placed electrodes on my finger where they could activate a superficial nerve, and then I got my students to “buzz” me with varying patterns. We found that changing the pulse strength in a wavelike pattern, increasing and then decreasing in about a one-second cycle, changed the sensation from tingling to a more natural feeling of pressure—it felt as though something was squeezing my finger.

We were then ready to try the pattern on Spetic. As the stimulation started, he looked confused for a moment, and then he placed the fingers of his remaining hand on his neck. “It doesn’t feel like tingling anymore,” he said. “It’s a pulsing pressure, like I put my fingers on my neck and felt my pulse.” With a little adjustment, we were able to remove the pulsing, and he reported a natural touch, “like someone just laid a finger on my hand.”

We think that the weaker pulses activate fewer of the neurons in the nerve, whereas stronger pulses cause more of them to fire. The variation in the firing rates of the different neurons is part of the neural code that the brain understands. If the pattern we apply resembles a pattern that the brain already knows, it interprets the sensation according to its experience: In effect, the brain says, Okay, that’s touch.

We are now working to understand how more complex patterns can produce more nuanced perceptions of sensation. So far, Spetic has reported feeling textures that he described as Velcro and sandpaper and also feeling objects moving, fluttering, and tapping on his skin. What’s more, Spetic can manipulate fine and delicate objects in a manner that he was unable to do before. He no longer has to rely on vision alone to know how his prosthesis is performing. And he’s far more confident using the prosthesis when he has sensation than when he does not.

So how will all this knowledge help others? Working with our partners at Medtronic and Lawrence Livermore National Laboratory, we are creating a fully implantable stimulation system paired with an advanced anthropomorphic haptic prosthetic. The project aims to have a working device within three years so that it will be ready for clinical trials by the last year of our five-year contract.

Building a sophisticated neural stimulation device that actually works outside the laboratory won’t be easy. The prosthesis will need to continuously monitor hundreds of tactile and position sensors on the prosthesis and feed that information back to the implanted stimulator, which then must translate that data into a neural code to be applied to the nerves in the arm. At the same time, our system will determine the user’s intent to move the prosthesis by recording the activity of up to 16 muscles in the residual limb. This information will be decoded, wirelessly transmitted out of the body, and converted to motor-drive commands, which will move the prosthesis. In total, the system will have 96 stimulation channels and 16 recording channels that will need to be coordinated to create motion and feeling. And all of this activity must be carried out with minimal time delays.

As we refine our system, we’re trying to find the optimal number of contacts. If we use three flattened electrode cuffs that each have 32 contacts, for example, we could hypothetically provide sensation at 96 points across the hand. So how many channels does a user need to have excellent function and sensation? And how is information across these channels coordinated and interpreted?

To make a self-contained device that doesn’t rely on an external computer, we’ll need miniature processors that can be inserted into the prosthesis to communicate with the implant and send stimulation to the electrode cuffs. The implanted electronics must be robust enough to last years inside the human body and must be powered internally, with no wires sticking out of the skin. We’ll also need to work out the communication protocol between the prosthesis and the implanted processor.

It’s a daunting engineering challenge, but when we succeed, this haptic technology could benefit more than just prosthetic users. Such an interface would allow people to touch things in a way that was never before possible. Imagine an obstetrician feeling a fetus’s heartbeat, rather than just relying on Doppler imaging. Imagine a bomb disposal specialist feeling the wires inside a bomb that is actually being handled by a remotely operated robot. Imagine a geologist feeling the weight and texture of a rock that’s thousands of kilometers away or a salesperson tweeting a handshake to a new customer.

Such scenarios could become reality within the next decade. Sensation tells us what is and isn’t part of us. By extending sensation to our machines, we will expand humanity’s reach—even if that reach is as simple as holding a loved one’s hand.

This article appears in the May 2016 print issue as “Restoring the Human Touch.”

About the Author

Dustin J. Tyler is head of the Functional Neural Interface Lab at Case Western Reserve University in Cleveland, where he creates technology and studies new, direct ways that human can interact with machines.