Video Friday is your weekly selection of awesome robotics videos, collected by your Automaton bloggers. We’ll also be posting a weekly calendar of upcoming robotics events for the next few months; here’s what we have so far (send us your events!):

ICARSC 2020 – April 15-17, 2020 – [Online Conference]

ICRA 2020 – May 31-4, 2020 – [Online Conference]

ICUAS 2020 – June 9-12, 2020 – Athens, Greece

RSS 2020 – July 12-16, 2020 – [Online Conference]

CLAWAR 2020 – August 24-26, 2020 – Moscow, Russia

Let us know if you have suggestions for next week, and enjoy today’s videos.

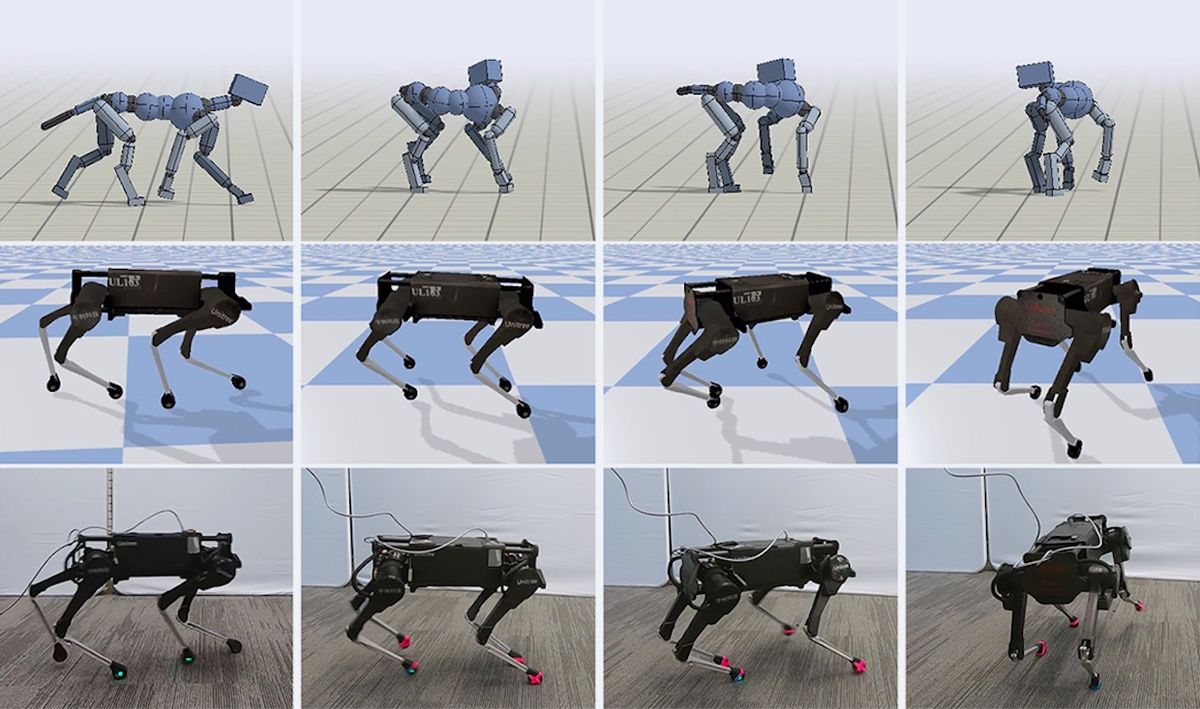

Whether it’s a dog chasing after a ball, or a monkey swinging through the trees, animals can effortlessly perform an incredibly rich repertoire of agile locomotion skills. But designing controllers that enable legged robots to replicate these agile behaviors can be a very challenging task. The superior agility seen in animals, as compared to robots, might lead one to wonder: can we create more agile robotic controllers with less effort by directly imitating animals?

In this work, we present a framework for learning robotic locomotion skills by imitating animals. Given a reference motion clip recorded from an animal (e.g. a dog), our framework uses reinforcement learning to train a control policy that enables a robot to imitate the motion in the real world. Then, by simply providing the system with different reference motions, we are able to train a quadruped robot to perform a diverse set of agile behaviors, ranging from fast walking gaits to dynamic hops and turns. The policies are trained primarily in simulation, and then transferred to the real world using a latent space adaptation technique, which is able to efficiently adapt a policy using only a few minutes of data from the real robot.

Engineers at the University of California San Diego have developed a new method that doesn’t require any special equipment and works in just minutes to create soft, flexible, 3D-printed robots. The innovation comes from rethinking the way soft robots are built: instead of figuring out how to add soft materials to a rigid robot body, the UC San Diego researchers started with a soft body and added rigid features to key components. The structures were inspired by insect exoskeletons, which have both soft and rigid parts—the researchers called their creations “flexoskeletons.”

[ UCSD ]

Working from home means this is the new normal.

That is a seriously heat resistant robot arm.

[ YouTube ]

Thanks Aaron!

In this video we present the ongoing development of a simple robotic system that integrates a 60W UV Germicidal Lamp. The system is a 4-wheel robotic rover that autonomously guides itself and navigates with the help of a LiDAR sensor. This experimental design is made to examine the potential of using such a system for disinfecting purposes during this critical period. The key goal of using a UV germicidal lamp on a robot relates to its ability to position itself at many locations thus providing wide disinfecting coverage.

This development is done cognizant of the fact that it is the health system and the medical professionals who we all rely on during this crisis. We have seen that robots have had such use and investigate the relevant potential. In addition, this development was done based on an existing robot of our lab and while #StayingAtHome as per the official guidelines. This simple test was the only point during which 2 lab members had to be together to coordinate robot deployment and video recording. Members worked while respecting 2m-distance guidelines and using PPE.

[ ARL ]

Sorry doggo, some things, robots are just better at.

By using its patented technologies, the Aertos 120-UVC can fly stably inside buildings contaminated by the COVID-19 virus, allowing humans to stay safely away from infected areas. Digital Aerolus’ industrial drones do not use GPS or external sensors, enabling them to operate stably in places other drones cannot go, including small and confined spaces. When the drone flies at 6 feet above a surface for 5 minutes, it provides a greater than 99% disinfection rate of more than a 2 x 2-meter surface.

So a 2-by-2-meter surface takes 5 minutes—that is a long time for drones, so the amount of surfaces that this thing can disinfect before it needs a recharge must be pretty limited. Maybe a swarm of them, though?

[ Digital Aerolus ]

Thanks Sarah!

A new autonomous robot developed by engineers at NASA and tested in Antarctica by a team of researchers, including an engineer from The University of Western Australia, is destined for a trip into outer space and could, in the future, search for signs of life in ocean worlds beyond Earth.

[ UWA ]

Robots can be very useful in disinfecting surfaces with COVID-19, which can be a dangerous task for humans. At the USC Viterbi Center for Advanced Manufacturing, we have developed ADAMMS, a robot to do machine tending tasks. We are modifying this robot to perform disinfection tasks in public spaces such as offices, labs, schools, hotels, and dorms. We are calling this ADAMMS-UV.

ADAMMS-UV is a semi-autonomous mobile manipulator that uses UV light wand mounted on a robot arm to reach spaces that cannot be treated by UV source mounted on the mobile base. It can use the gripper to open drawers, closets and manipulate objects to perform a detailed sanitization on hard to reach surfaces. ADAMMS-UV is controlled by a remote operator located far away from the risk zone.

[ RROS ]

Highlight videos from both the systems and virtual tracks of the DARPA SubT Urban Circuit.

[ SubT ]

At Kunming No.3 Middle School and Kunming Dianchi Middle School in Yunnan province, southwest of China, students are returning to school after a prolonged absence due to Covid-19, and are now greeted by a robot, instead of a human. The robot, named AIMBOT, takes students’ temperature and checks their faces for masks, which helps school personnel improve monitoring efficiency while reducing cross-infection risks. The AIMBOT robot can detect people’s temperatures with 99 percent accuracy up to 3.5 meters away by using infrared technology. They can also monitor the temperatures of up to 15 people at once.

[ UBTECH ]

EMYS has way more friends than I do and I’m not sure how to feel about that.

[ EMYS ]

Thanks Jan!

In this video we present results on autonomous subterranean exploration inside an unfinished nuclear power plant in Washington, USA, using the ANYmal quadrupedal robot.

[ ETHZ RSL ]

Thanks Marco!

I need one of these mini snack packaging systems for my kitchen. And I won’t need anything else for my kitchen.

[ABB ]

On Feb 18-27, 2020, we participated in the Urban Circuit of @DARPAtv Subterranean Challenge held in unfinished Satsop Nuclear Power Plant in Washington, USA. Together as CTU-CRAS-NORLAB, we won the 3rd overall place among 10 participating teams and placed 1st among the teams non-funded by DARPA. In this video, we show various fragments of autonomous UAV path planning, exploration, and mapping obtained during the competition.

[ MRS ]

With humankind facing new and increasingly large-scale challenges in the medical and domestic spheres, automation of the service sector carries a tremendous potential for improved efficiency, quality, and safety of operations. This work presents a mobile manipulation system that combines perception, localization, navigation, motion planning and grasping skills into one common workflow for fetch and carry applications in unstructured indoor environments.

[ ASL ]

This video highlights an exploration and mapping system onboard an autonomous aerial vehicle. An overview of the system is presented in simulation using a mesh of a cave from West Virginia. Hardware results are demonstrated at Laurel Caverns, a commercially owned and operated cave in Southwestern, PA, USA.

Few state-of-the-art, high-resolution perceptual modeling techniques quantify the time to transfer the model across low bandwidth, high reliability communications channels such as radio. To bridge this gap in the state of the art, this work compactly represents sensor observations as Gaussian mixture models and maintains a local occupancy grid map for a motion planner that greedily maximizes an information-theoretic objective function. Hardware results are presented in complete darkness with the autonomous aerial vehicle equipped with a depth camera for mapping, downward-facing camera for state estimation, and forward and downward lights.

[ CMU ]

Our Highly Dexterous Manipulation System is a a dual-arm robotic system that includes two highly dexterous mobile manipulator arms, a moveable torso that can be mounted onto a variety of mobile platforms, and the capability to perform tasks with human-like strength and dexterity in a variety of indoor and outdoor environments.

[ RE2 ]

If you are a manufacturer ramping up production of mission-critical goods, Robotiq wants to help you deploy a cobot application in two weeks. Two weeks! LET’S GO!

[ Robotiq ]

Thanks Sam!

Another Spot video from Adam Savage.

I’d be making videos like these too, but I don’t have a Spot. YET.

[ Tested ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the director of digital innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.