If autonomous vehicles and robots are ever going to be safe and efficient, they need to perceive their surroundings. They also need to be able to predict the behavior of the stuff around them—whether that’s other robots, vehicles, or even people—and plan their paths and make decisions accordingly. In other words, they need machine vision.

Traditionally, machine vision is accomplished with a combination of cameras and sensors including radar, sonar, and lidar. But machine vision also often relies on heat. “Heat radiation comes from all objects that have nonzero temperatures,” says Zubin Jacob, an electrical and computer engineering professor at Purdue University. “Leaves, trees, plants, buildings—they’re all emitting thermal radiation, but as this is invisible infrared radiation, our eyes and conventional cameras cannot see it.” But because thermal waves constantly scatter, the images generated by infrared cameras lack material specificity, resulting in hazy, “ghostlike” images with no depth or texture.

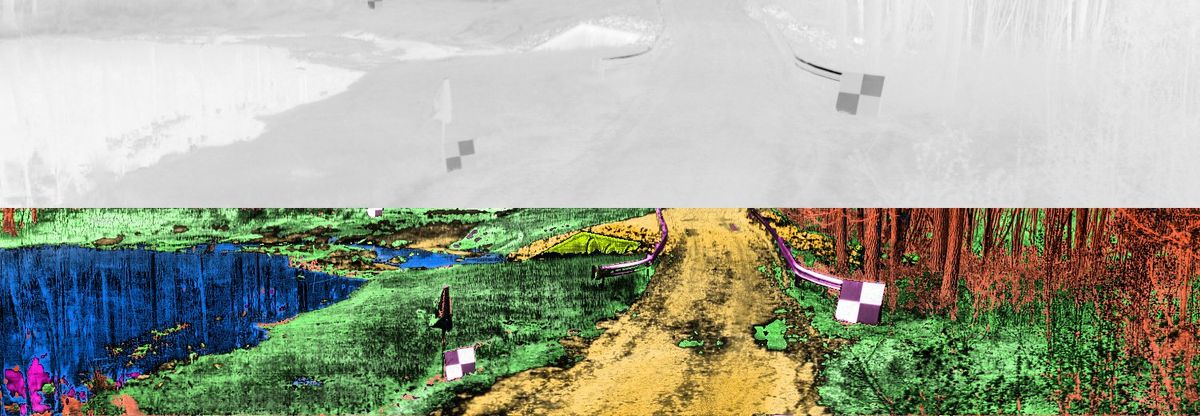

As an alternative to “ghost” images, Jacob and his colleagues at Purdue and Michigan State universities have developed a heat-assisted detection and ranging (HADAR) technique that resolves cluttered heat signals to “see” texture and depth. In a proof-of-concept experiment, they demonstrated that HADAR ranging during nighttime is as good as RGB stereovision in the day. Their work was published on 26 July in Nature.

Humans see a rich variety of colors, textures, and depth in the day or settings with adequate light, but even in dim or dark situations, there are plenty of thermal photons bouncing around. Though humans can’t see it, “this limitation need not apply to machines,” says Jacob, “But we needed to develop new sensors and new algorithms to leverage that information.”

For their experiment, the researchers chose an outdoor space in a marshy area, far from roads and urban illumination. They collected thermal images in the infrared spectrum across almost 100 different frequencies. And just as each pixel in RGB images is encoded by three visible frequencies (R for red, G for green, B for blue), each pixel in the experiment was labeled with three thermal physics attributes, TeX—temperature (T), material fingerprint or emissivity (e), and texture or surface geometry (X). “T and e are reasonably well understood, but the crucial insight about texture is actually in X,” says Jacob. “X is really the many little suns in your scene that’s illuminating your specific area of interest.”

The researchers fed all the collected TeX information into a machine-learning algorithm to generate images with depth and texture. They used what they call TeX decomposition to untangle temperature and emissivity, and recover texture from the heat signal. The decluttered T, e, and Xattributes were then used to resolve colors in terms of hue, saturation, and brightness in the same way humans see color. “At nighttime, in pitch darkness, our accuracy was the same when we came back in the daytime and did the ranging and detection with RGB cameras,” Jacob says.

The biggest advantage of HADAR is that it is passive, Jacob adds. “Which means you don’t have to illuminate the scene with a laser, sound waves, or electromagnetic waves. Also, in active approaches like lidar, sonar, or radar, if there are many agents in the scene, there can be a lot of crosstalk between them.”

As a new technology, HADAR is in a fairly nascent stage, Jacob says. At present, data collection requires almost a minute. By comparison, an autonomous vehicle driving at night, for example, would need to image its surroundings in milliseconds. Also, the cameras required for data collection are bulky, pricey, and power hungry: “Great for a scientific demonstration, but not really for kind of widespread adoption,” according to Jacob. The researchers are currently working on these problems, and Jacob predicts another few years of research will be directed to address them.

However, there are a few applications that are immediately possible, Jacob says, such as nocturnal wildlife monitoring. Another place where millisecond decision-making ability is not required is some medical applications, such as measuring temperature gradients on the human body.

“The big challenge now is hardware improvements,” Jacob says, “[into which] we can incorporate our advanced algorithms.” The next generation of cameras, he predicts, will be high-speed and compact, and work at room temperatures, for which they will need completely new materials.

Payal Dhar (she/they) is a freelance journalist on science, technology, and society. They write about AI, cybersecurity, surveillance, space, online communities, games, and any shiny new technology that catches their eye. You can find and DM Payal on Twitter (@payaldhar).