This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

The ocean contains a seemingly endless expanse of territory yet to be explored—and mapping out these uncharted waters globally poses a daunting task. Fleets of autonomous underwater robots could be invaluable tools to help with mapping, but these need to be able to navigate cluttered areas while remaining efficient and accurate.

In a study published 24 June in the IEEE Journal of Oceanic Engineering, one research team has developed a novel framework that allows autonomous underwater robots to map cluttered areas with high efficiency and low error rates.

A major challenge in mapping underwater environments is the uncertainty of the robot’s position.

“Because GPS is not available underwater, most underwater robots do not have an absolute position reference, and the accuracy of their navigation solution varies,” explains Brendan Englot, an associate professor of mechanical engineering at the Stevens Institute of Technology, in Hoboken, N.J., who was involved in the study. “Predicting how it will vary as a robot explores uncharted territory will permit an autonomous underwater vehicle to build the most accurate map possible under these challenging circumstances.”

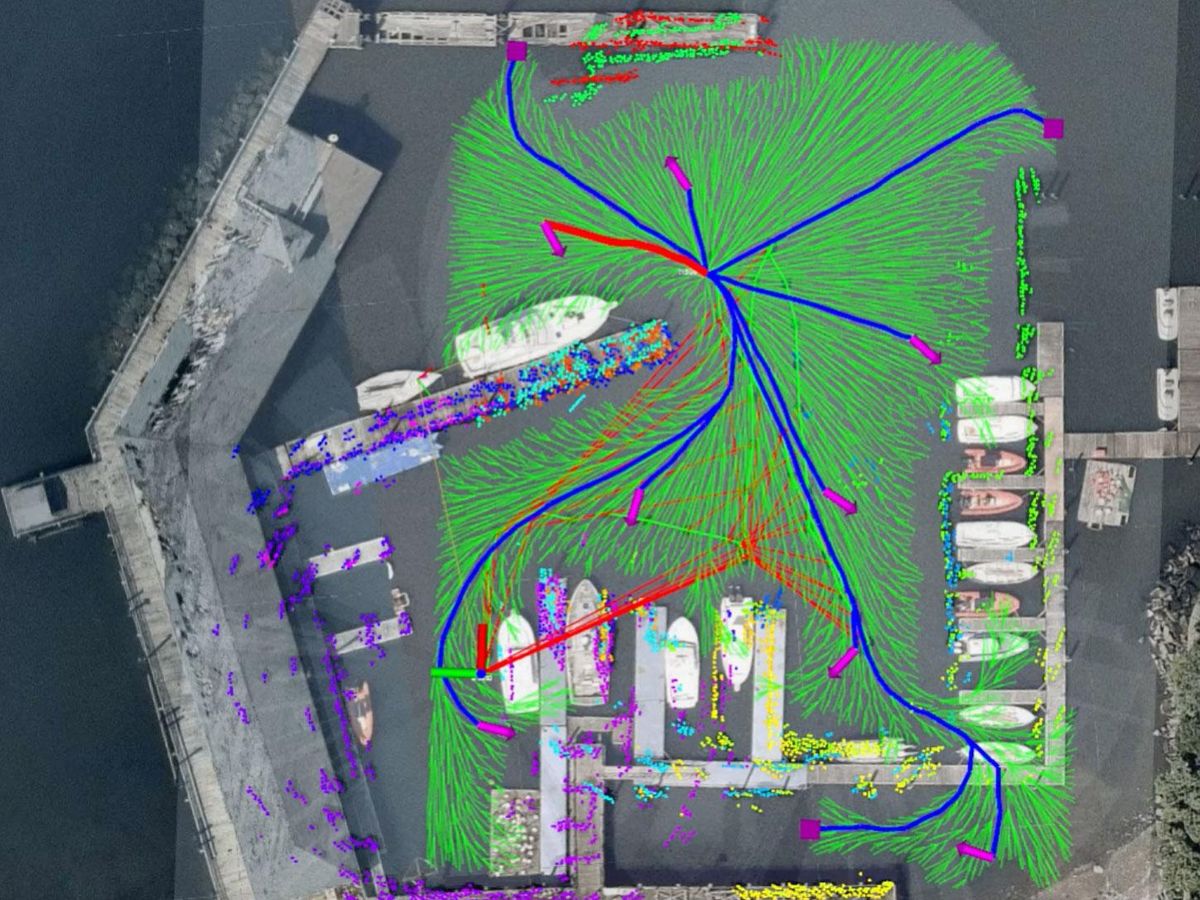

The model created by Englot’s team uses a virtual map that abstractly represents the surrounding area that the robot hasn’t seen yet. They developed an algorithm that plans a route over this virtual map in a way that takes the robot’s localization uncertainty and perceptual observations into account.

The perceptual observations are collected using sonar imaging, which helps detect objects in the environment in front of the robot within a 30-meter range and a 120-degree field of view. “We process the imagery to obtain a point cloud from every sonar image. These point clouds indicate where underwater structures are located relative to the robot,” explains Englot.

The research team then tested their approach using a BlueROV2 underwater robot in a harbor at Kings Point, N.Y., an area that Englot says was large enough to permit significant navigation errors to build up, but small enough to perform numerous experimental trials without too much difficulty. The team compared their model to several other existing ones, testing each model in at least three 30-minute trials in which the robot navigated the harbor. The different models were also evaluated through simulations.

“The results revealed that each of the competing [models] had its own unique advantages, but ours offered a very appealing compromise between exploring unknown environments quickly while building accurate maps of those environments,” says Englot.

He notes that his team has applied for a patent that would consider their model for subsea oil and gas production purposes. However, they envision that the model will also be useful for a broader set of applications, such as inspecting offshore wind turbines, offshore aquaculture infrastructure (including fish farms), and civil infrastructure, such as piers and bridges.

“Next, we would like to extend the technique to 3D mapping scenarios, as well as situations where a partial map may already exist, and we want a robot to make effective use of that map, rather than explore an environment completely from scratch,” says Englot. “If we can successfully extend our framework to work in 3D mapping scenarios, we may also be able to use it to explore networks of underwater caves or shipwrecks.”

- Stanford AI Grads Launch Low(ish)-Cost Underwater Robot - IEEE ... ›

- Huge Six-Legged Robot Crabster Goes Swimming - IEEE Spectrum ›

- AeroVironment's Mola Robot Flies Underwater on Solar Power ... ›

- A Routing Scheme to Make Underwater Networks More Reliable ... ›

- Ensuring Underwater Robots Survive in Hot Tropical Waters - IEEE Spectrum ›

- Underwater Robot Explores the Seafloor Without Damaging It - IEEE Spectrum ›

Michelle Hampson is a freelance writer based in Halifax. She frequently contributes to Spectrum's Journal Watch coverage, which highlights newsworthy studies published in IEEE journals.