Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We’ll also be posting a weekly calendar of upcoming robotics events for the next few months; here's what we have so far (send us your events!):

ICRA 2022 – May 23-27, 2022 – Philadelphia, PA, USA

As always, let us know if you have suggestions for upcoming editions of Video Friday, which will be back in 2022. Enjoy today's videos and Happy Holidays!

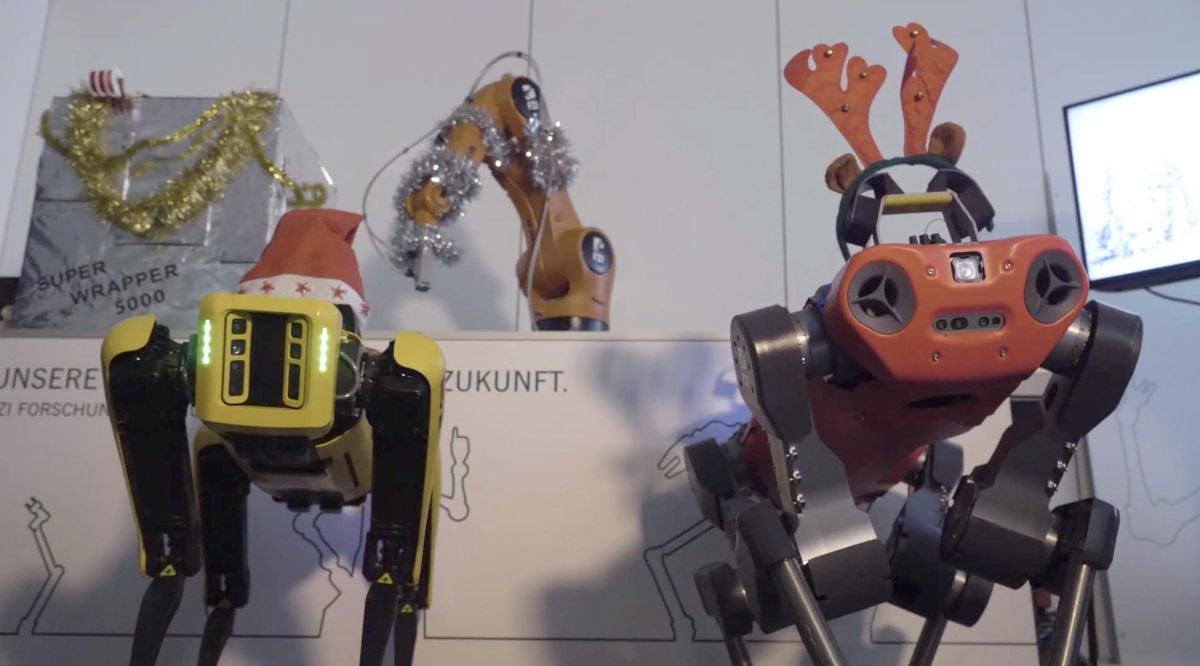

We wish you a Merry Christmas and a Happy New Year!

Frohe Weihnachten und ein gutes neues Jahr!

[ FZI ]

Thanks Arne!

The PAL Robotics team wishes you Happy Holidays!

[ PAL Robotics ]

For this Christmas special, the team is observing the most rare of creatures in its natural habitat - the 'Common Justin'.

[ DLR RMC ]

Watch Santa solve the challenge of increased throughput in Santa's Workshop!

We wish the Happiest of Holidays to our Customers, Integrators, Suppliers, Associates and the FANUC Family of Employees! 🎄

[ FANUC America ]

See how our new Christmas Executive Officers busy working for the holiday!

[ Flexiv ]

Cultivating peaches is a complex and manually-intensive process that has put a strain on many farms stretched for time and workers. To solve this problem, the Georgia Tech Research Institute (GTRI) has developed an intelligent robot designed to handle the human-based tasks of thinning peach trees, which could result in significant cost savings for peach farms in Georgia.

[ Georgia Tech Research Institute ]

ROBO FIGHT is a Humanoid robot fighting sports.

27 humanoid robots will compete to advance to the ROBO-ONE final.

In this game players will win if you knock down opponent with a valid attack 3 times.

[ Vstone ]

Teams across multiple NASA centers and the European Space Agency are working together to prepare a set of missions that would return the samples being collected by the Mars Perseverance rover safely back to Earth. From landing on the Red Planet and collecting the samples to launching them off the surface of Mars for their potential return to Earth, groundbreaking technologies and methods are being developed and tested.This video features some of that prototype testing underway for the proposed Sample Retrieval Lander, Mars Ascent Vehicle launch systems, and the Earth Entry System. A variety of testing is taking place at NASA's Jet Propulsion Laboratory in Pasadena, California, Marshall Space Flight Center in Greenbelt, Maryland, and Langley Research Center in Hampton, Virginia.

[ NASA JPL ]

Supplementary video of our paper submitted to ICRA 2022: Omni-Roach: A legged robot capable of traversing multiple types of large obstacles and self-righting.

This is the final project video for the Bottle Butler. It was produced by Team Red in the Fall of 2021 for a project-based class at Georgia Tech named Robotic Caregivers.

[ Georgia Tech Healthcare Robotics Lab ]

Four Stanford faculty explain what they expect to see in AI in 2022 in their respective industries.

Featuring:

Erik Brynjolfsson, HAI Senior Fellow and Faculty Director, Stanford Digital Economy Lab

Chelsea Finn, Stanford Assistant Professor in Computer Science and Electrical Engineering

Russ Altman, HAI Associate Director, Kenneth Fong Professor, and Professor of Bioengineering, of Genetics, of Medicine, and of Biomedical Data Science

Laura Blattner, Stanford GSB Assistant Professor of Finance

[ Stanford HAI ]

Nimble provides AI-powered logistics and fulfillment robotics. Our robots are helping fulfill online orders for some of the most iconic brands like Best Buy, Puma, Victoria's Secret, iHerb, Adore Me, Weee! and more!

Our robots have handled more than 15 million objects, across 500,000 unique products ranging from eye-liners, belts, body wash and loofahs to keyboards, mice, USB sticks and game-consoles to lingerie, hoodies, hats, and footwear – everything from daily essentials to holiday gift favorites.

[ Nimble Robotics ]

Closing Keynote: Human-Centered AI for sustainability: Case Social Robots

Kaisa Väänänen

ISS ’21: ACM Interactive Surfaces and Spaces Conference

Abstract:

AI applications are entering all areas of society. While research and development in AI technologies have taken major leaps, AI’s sustainability perspectives are not extensively integrated in the development of AI applications. The first part of this talk addresses the founding questions of Human-Centered AI (HCAI) and proposes approaches that can be used to ensure that the sustainability needs are in the centre of AI development. Then, in the second part of the talk, examples from the context of studies of social robots are presented to highlight the HCAI concepts in practice. Finally, an outline for interdisciplinary sustainable AI design methodology is proposed.

[ ACM ]

The prevalent approach to object manipulation is based on the availability of explicit 3D object models. By estimating the pose of such object models in a scene, a robot can readily reason about how to pick up an object, place it in a stable position, or avoid collisions. Unfortunately, assuming the availability of object models constrains the settings in which a robot can operate, and noise in estimating a model’s pose can result in brittle manipulation performance. In this talk, I will discuss our work on learning to manipulate unknown objects directly from visual (depth) data. Without any explicit 3D object models, these approaches are able to segment unknown object instances, pickup objects in cluttered scenes, and re-arrange them into desired configurations. I will also present recent work on combining pre-trained language and vision models to efficiently teach a robot to perform a variety of manipulation tasks. I’ll conclude with our initial work toward learning implicit representations for objects.

[ GRASP Lab ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.