This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

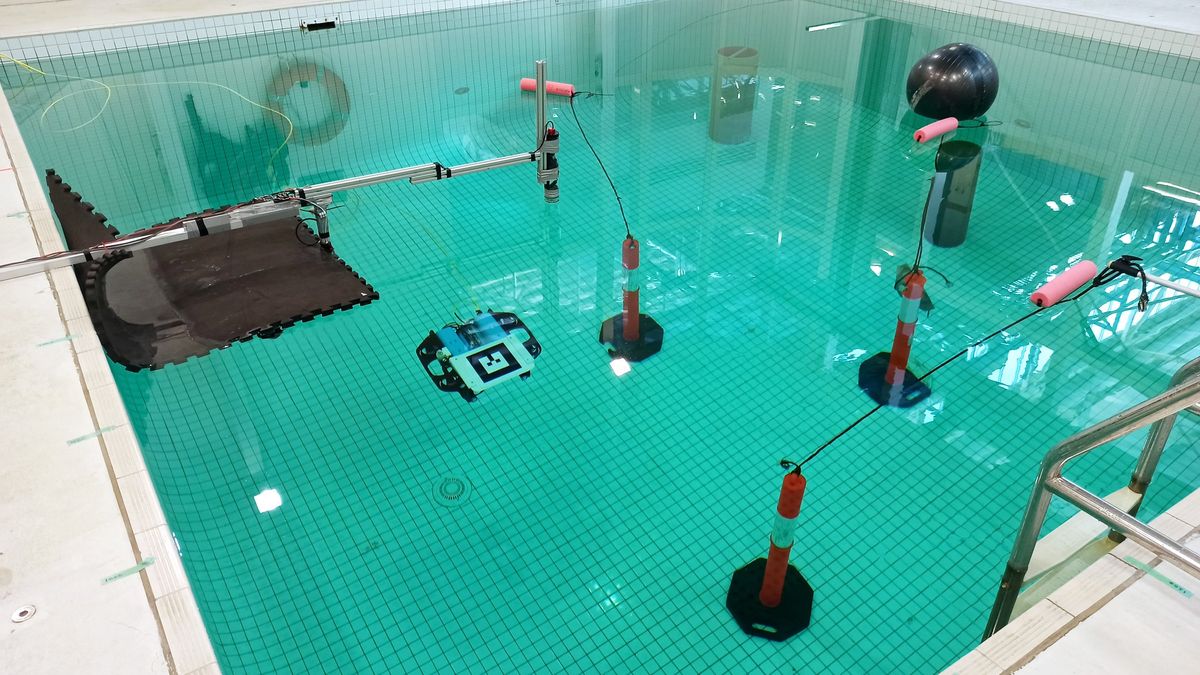

Uncrewed underwater vehicles (UUVs) are underwater robots that operate without humans inside. Early use cases for the vehicles have included jobs like deep-sea exploration and the disabling of underwater mines. However, UUVs suffer from poor communication and navigation control because of water’s distorting effect. So researchers have begun to develop machine learning techniques that can help UUVs navigate better autonomously.

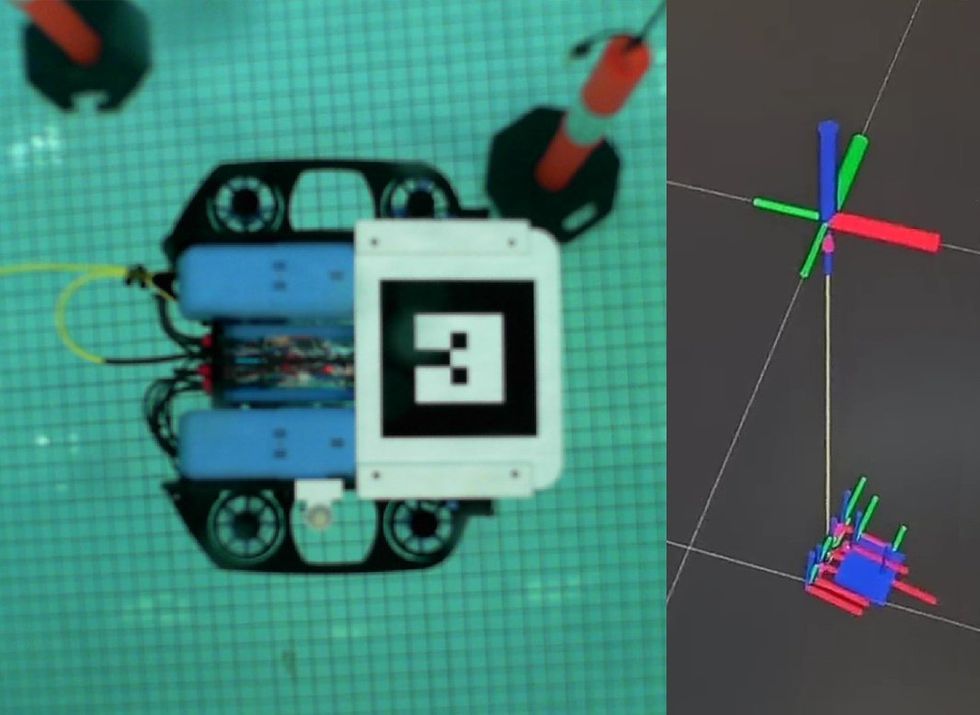

Perhaps the biggest challenge the researchers are grappling with is the absence of GPS signals, which can’t penetrate beneath the water’s surface. Other types of navigational techniques that rely on cameras are also ineffective, because underwater cameras suffer from low visibility.

Researchers altered the UUV’s training so that it sampled from its memory buffer in a way more akin to how human brains learn.

One of their motivations, the researchers say, is ultimately to tackle the dangerous work of scrubbing off bio organisms that accumulate on ship hulls. Those accumulations, also known as biofilms, pose a threat to the environment by introducing invasive species and add to shipping costs by increasing drag on ships.

In the study, which was published last month in the journal IEEE Access, researchers from Australia and France used a type of machine learning called deep reinforcement learning to teach UUVs to navigate more accurately under difficult conditions.

In reinforcement learning, UUV models start by performing random actions, then observe the results of those actions and compare them to the goal—in this case, navigating as closely as possible to the target destination. Actions that lead to positive results are reinforced, while actions that lead to poor results are avoided.

The ocean adds another layer of complication to UUVs’ navigational challenges that reinforcement models must learn to overcome. Ocean currents are strong and can carry vehicles far from their intended path in unpredictable directions. UUVs therefore need to navigate while also compensating for interference from the currents.

To achieve the best performance, the researchers tweaked a longstanding convention of reinforcement learning. Lead author on the study Thomas Chaffre—research associate in the college of science and engineering at Flinders University in Adelaide, Australia—said his group’s departure is part of a larger migration in the field. Machine learning researchers today, including from Google DeepMind, Chaffre said that questioning long-held assumptions about reinforcement learning’s training process is becoming increasingly commonplace, searching for small changes that can significantly improve training performance.

In this case, the researchers focused on making changes to reinforcement learning’s memory buffer system, which is used to store the outcomes of past actions. Actions and results stored in the memory buffer are sampled at random throughout the training process to update the model’s parameters. Usually this sampling is done in an “independent and identically distributed” way, Chaffre said, meaning that what actions it uses to update from is entirely random.

Researchers made a change to the training process so that it sampled from its memory buffer in a way more akin to how human brains learn. Instead of having an equal chance of learning from all past experiences, more weight is given to actions that resulted in large positive gains and also to those that happened more recently.

“When you learn to play tennis, you tend to focus more on recent experience,” Chaffre said. “As you progress, you don’t care about how you played when you started training, because it doesn’t have any information anymore for your current level.”

Similarly, when a reinforcement algorithm is learning from past experiences, Chaffre said, it should be concentrating mostly on recent actions that led to big positive gains.

Researchers found that when using this adapted-memory-buffer technique, UUV models could train more quickly, while also consuming less power. Both improvements, Chaffre said, offer a significant advantage when a UUV is deployed, because while trained models come ready to use, it still needs to be fine tuned.

“Because we are working on underwater vehicles, it’s very costly to use them, and it’s very dangerous to train reinforcement learning algorithms with them,” Chaffre said. So, he added, reducing the amount of time the model spends fine-tuning can prevent damage to the vehicles and save money on repairs.

He said the team’s future plans include testing the new training algorithm on physical UUVs in the ocean.

- Robots Made Out of Branches Use Deep Learning to Walk ›

- Deep Reinforcement Learning - IEEE Spectrum ›