Memory Chips That Compute Will Accelerate AI

Samsung could double performance of neural nets with processing-in-memory

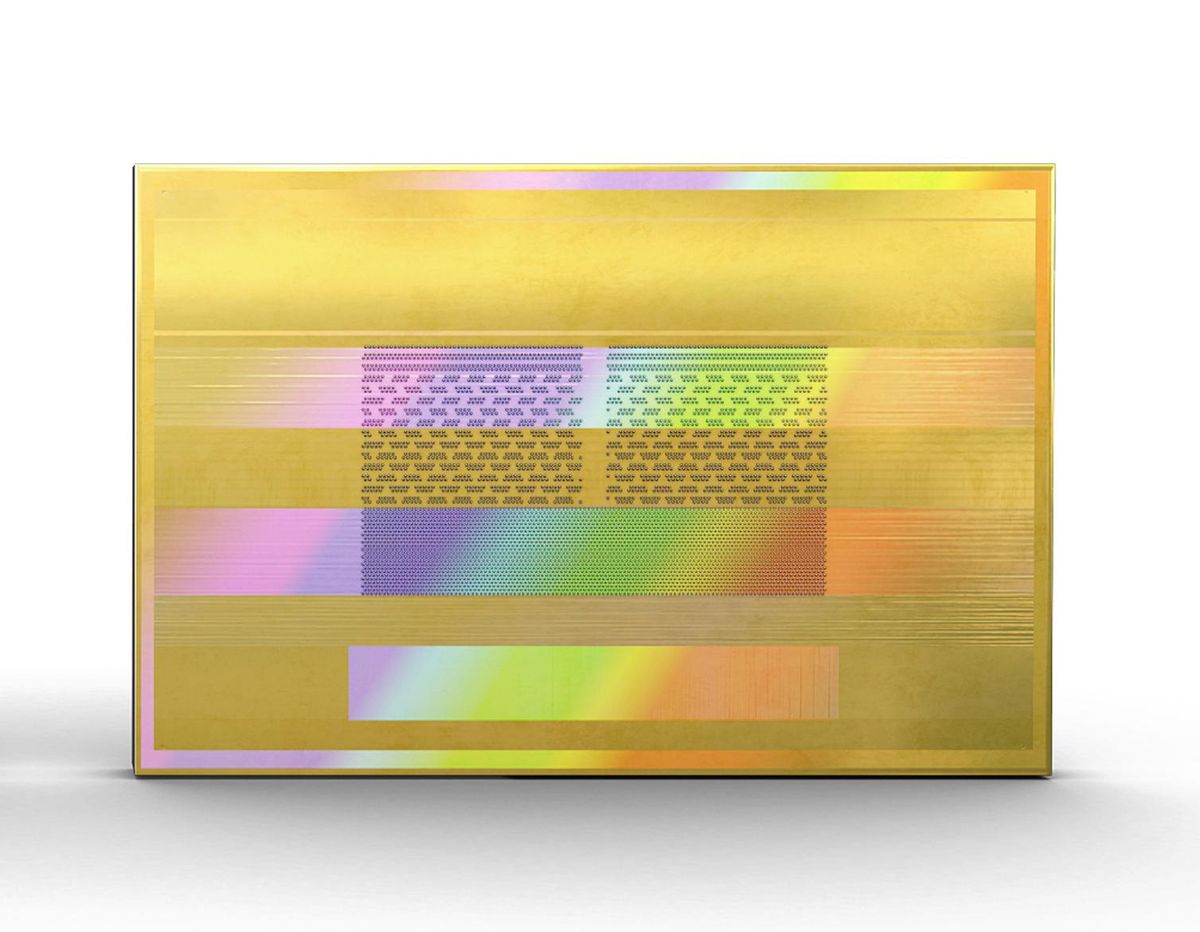

Samsung added AI compute cores to DRAM memory dies to speed up machine learning.

John von Neumann’s original computer architecture, where logic and memory are separate domains, has had a good run. But some companies are betting that it’s time for a change.

In recent years, the shift toward more parallel processing and a massive increase in the size of neural networks mean processors need to access more data from memory more quickly. And yet “the performance gap between DRAM and processor is wider than ever,” says Joungho Kim, an expert in 3D memory chips at Korea Advanced Institute of Science and Technology, in Daejeon, and an IEEE Fellow. The von Neumann architecture has become the von Neumann bottleneck.

What if, instead, at least some of the processing happened in the memory? Less data would have to move between chips, and you’d save energy, too. It’s not a new idea. But its moment may finally have arrived. Last year, Samsung, the world’s largest maker of dynamic random-access memory (DRAM), started rolling out processing-in-memory (PIM) tech. Its first PIM offering, unveiled in February 2021, integrated AI-focused compute cores inside its Aquabolt-XL high-bandwidth memory. HBM is the kind of specialized DRAM that surrounds some top AI accelerator chips. The new memory is designed to act as a “drop-in replacement” for ordinary HBM chips, said Nam Sung Kim, an IEEE Fellow, who was then senior vice president of Samsung’s memory business unit.

Last August, Samsung revealed results from tests in a partner’s system. When used with the Xilinx Virtex Ultrascale + (Alveo) AI accelerator, the PIM tech delivered a nearly 2.5-fold performance gain and a 62 percent cut in energy consumption for a speech-recognition neural net. Samsung has been providing samples of the technology integrated into the current generation of high-bandwidth DRAM, HBM2. It’s also developing PIM for the next generation, HBM3, and for the low-power DRAM used in mobile devices. It expects to complete the standard for the latter with JEDEC in the first half of 2022.

There are plenty of ways to add computational smarts to memory chips. Samsung chose a design that’s fast and simple. HBM consists of a stack of DRAM chips linked vertically by interconnects called through-silicon vias (TSVs). The stack of memory chips sits atop a logic chip that acts as the interface to the processor.

Some Processing-in-Memory Projects

Micron Technology

The third-largest DRAM maker says it does not have a processing-in-memory product. However, in 2019 it acquired the AI-tech startup Fwdnxt, with the goal of developing “innovation that brings memory and computing closer together.”

NeuroBlade

The Israeli startup has developed memory with integrated processing cores designed to accelerate queries in data analytics.

Engineers at the DRAM-interface-tech company did an exploratory design for processing-in-memory DRAM focused on reducing the power consumption of high-bandwidth memory (HBM).

Samsung

Furthest along, the world’s largest DRAM maker is offering the Aquabolt-XL with integrated AI computing cores. It has also developed an AI accelerator for memory modules, and it’s working to standardize AI-accelerated DRAM.

Engineers at the second-largest DRAM makers and Purdue University unveiled results for Newton, an AI-accelerating HBM DRAM in 2019, but the company decided not to commercialize it and pursue PIM for standard DRAM instead.

The highest data bandwidth in the stack lies within each chip, followed by the TSVs, and finally the connections to the processor. So Samsung chose to put the processing on the DRAM chips to take advantage of the high bandwidth there. The compute units are designed to do the most common neural-network calculation, called multiply and accumulate, and little else. Other designs have put the AI logic on the interface chip or used more complex processing cores.

Samsung’s two largest competitors, SK hynix and Micron Technology, aren’t quite ready to take the plunge on PIM for HBM, though they’ve each made moves toward other types of processing-in-memory.

Icheon, South Korea–based SK hynix, the No. 2 DRAM supplier, is exploring PIM from several angles, says Il Park, vice president and head of memory-solution product development. For now it is pursuing PIM in standard DRAM chips rather than HBM, which might be simpler for customers to adopt, says Park.

HBM PIM is more of a mid- to long-term possibility, for SK hynix. At the moment, customers are already dealing with enough issues as they try to move HBM DRAM physically closer to processors. “Many experts in this domain do not want to add more, and quite significant, complexity on top of the already busy situation involving HBM,” says Park.

That said, SK hynix researchers worked with Purdue University computer scientists on a comprehensive design of an HBM-PIM product called Newton in 2019. Like Samsung’s Aquabolt-XL, it places multiply-and-accumulate units in the memory banks to take advantage of the high bandwidth within the dies themselves.

“Samsung has put a stake in the ground,” —Bob O’Donnell, chief analyst at Technalysis Research

Meanwhile, Rambus, based in San Jose, Calif., was motivated to explore PIM because of power-consumption issues, says Rambus fellow and distinguished inventor Steven Woo. The company designs the interfaces between processors and memory, and two-thirds of the power consumed by system-on-chip and its HBM memory go to transporting data horizontally between the two chips. Transporting data vertically within the HBM uses much less energy because the distances are so much shorter. “You might be going 10 to 15 millimeters horizontally to get data back to an SoC,” says Woo. “But vertically you’re talking on the order of a couple hundred microns.”

Rambus’s experimental PIM design adds an extra layer of silicon at the top of the HBM stack to do AI computation. To avoid the potential bandwidth bottleneck of the HBM’s central through-silicon vias, the design adds TSVs to connect the memory banks with the AI layer. Having a dedicated AI layer in each memory chip could allow memory makers to customize memories for different applications, argues Woo.

How quickly PIM is adopted will depend on how desperate the makers of AI accelerators are for the memory-bandwidth relief it provides. “Samsung has put a stake in the ground,” says Bob O'Donnell, chief analyst at Technalysis Research. “It remains to be seen whether [PIM] becomes a commercial success.

This article appears in the January 2022 print issue as "AI Computing Comes to Memory Chips." It was corrected on 30 December to give the correct date for SK hynix's HBM PIM design.