Video Friday is your weekly selection of awesome robotics videos, collected by your Automaton bloggers. We’ll also be posting a weekly calendar of upcoming robotics events for the next few months; here’s what we have so far (send us your events!):

RSS 2018 – June 26-30, 2018 – Pittsburgh, Pa., USA

Ubiquitous Robots 2018 – June 27-30, 2018 – Honolulu, Hawaii

MARSS 2018 – July 4-8, 2018 – Nagoya, Japan

AIM 2018 – July 9-12, 2018 – Auckland, New Zealand

ICARM 2018 – July 18-20, 2018 – Singapore

ICMA 2018 – August 5-8, 2018 – Changchun, China

SSRR 2018 – August 6-8, 2018 – Philadelphia, Pa., USA

ISR 2018 – August 24-27, 2018 – Shenyang, China

BioRob 2018 – August 26-29, 2018 – University of Twente, Netherlands

RO-MAN 2018 – August 27-30, 2018 – Nanjing, China

Let us know if you have suggestions for next week, and enjoy today’s videos.

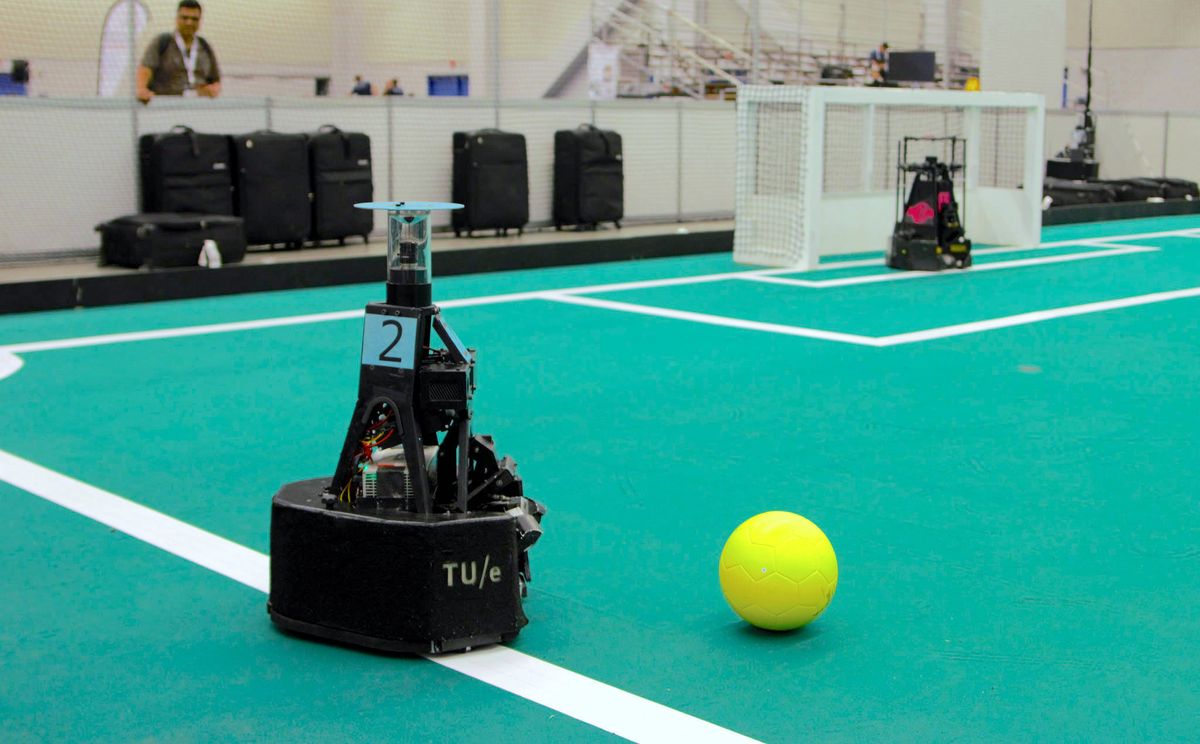

Robot soccer is getting really, really good. RoboCup (which just concluded in Montreal) is basically exactly the same as human World Cup, just with fewer writhing around on the ground and clutching of ankles. Tech United Eindhoven was there competing, and put together these highlight videos:

And here are the semi-final and final matches:

[ Tech United ]

These robot best friends make dumplings! And they have LITTLE CHEF HATS!

[ AIT ]

The new single-arm YuMi is ABB’s most agile and compact collaborative robot yet and can easily integrate into existing production.

[ ABB ]

At Hannover Fair 2018 we introduced the LBR iisy, KUKA’s next collaborative robot that rounds out our collaborative robotics platform. It’s small and lightweight - powerful enough for experts, simple enough for beginners.

[ Kuka ]

Who gets tired of listening to Marc Raibert talk about robots? Nobody, that’s who. This talk is from CEBIT, just this month.

Raibert said Boston Dynamics is testing SpotMini with a variety of potential customers, using the robot as a platform for security, delivery, construction, and home applications. The idea is turning SpotMini into the “Android of Robots.” It will be available starting next year, Raibert said, and so far they’ve built 10 units by hand and are now building 100 with manufacturing partners. By mid 2019, production will ramp up to 1000 units per year.

[ Boston Dynamics ]

Can you name all the robots in this video from Toyota Research Institute?

If you missed the Toyota HSR, that’s okay, so did I.

[ TRI ]

Although quadrotors, and aerial robots in general, are inherently active agents, their perceptual capabilities in literature so far have been mostly passive in nature. Researchers and practitioners today use traditional computer vision algorithms with the aim of building a representation of general applicability: a 3D reconstruction of the scene. Using this representation, planning tasks are constructed and accomplished to allow the quadrotor to demonstrate autonomous behavior. These methods are inefficient as they are not task driven and such methodologies are not utilized by flying insects and birds. Such agents have been solving the problem of navigation and complex control for ages without the need to build a 3D map and are highly task driven.

In this paper, we propose this framework of bio-inspired perceptual design for quadrotors. We use this philosophy to design a minimalist sensori-motor framework for a quadrotor to fly though unknown gaps without a 3D reconstruction of the scene using only a monocular camera and onboard sensing. We successfully evaluate and demonstrate the proposed approach in many real-world experiments with different settings and window shapes, achieving a success rate of 85% at 2.5m/s even with a minimum tolerance of just 5cm. To our knowledge, this is the first paper which addresses the problem of gap detection of an unknown shape and location with a monocular camera and onboard sensing.

[ UMD ]

What if we could control robots more intuitively, using just hand gestures and brainwaves? A new system spearheaded by researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) aims to do exactly that, allowing users to instantly correct robot mistakes with nothing more than brain signals and the flick of a finger.

By monitoring brain activity, the system can detect in real-time if a person notices an error as a robot does a task. Using an interface that measures muscle activity, the person can then make hand gestures to scroll through and select the correct option for the robot to execute.

The team demonstrated the system on a task in which a robot moves a power drill to one of three possible targets on the body of a mock plane. Importantly, they showed that the system works on people it’s never seen before, meaning that organizations could deploy it in real-world settings without needing to train it on users.

[ MIT ]

All aboard for today’s mandatory Cassie video!

Cassie is an efficient, compliant, dynamic bipedal robot. Here in the Dynamic Robotics Lab directed by Prof. Jonathan Hurst we are researching control strategies that exploit the robot’s natural dynamics. The controller here is demonstrating robust blind walking over unperceived disturbances.

[ DRL ]

Robotic materials combines traditional camera-based perception with tactile perception to help robots be more effective at interacting with the world around them.

Thanks Nikolaus!

Mapping and navigation is one of the trickiest parts of doing robot stuff with a robot, but Misty’s got you covered.

[ Misty Robotics ]

I’m almost certain that this is the first robot I’ve ever seen with a built-in watermelon slapper.

A student-lead team designed the agBOT watermelon harvester to help aging farmers. The machine can autonomously identify and harvest ripe watermelons. A passive slapper mechanism thumps the watermelons in a way that is similar to how a person tests one at the supermarket, and an onboard microphone helps agBOT determine whether or not to harvest the watermelon.

[ Virginia Tech ]

In collaboration with DENSO Robotics, and in consultation with Innotech Corporation, Osaro is proud to publicly unveil FoodPick, which performs automated food assembly tasks using deep learning and other AI and robotics techniques.

[ Osaro ]

Musica Automata is a name of an upcoming album by Leonardo Barbadoro, an Italian electronic music producer and electroacoustic music composer from Florence known also under the alias Koolmorf Widesen. The album will include music for robots controlled from a laptop computer.

These robots are more than 50 acoustic instruments (piano, organs, wind instruments, percussions etc) which are part of the Logos Foundation in Gent (Belgium). They receive digital MIDI messages that contain precise informations for their performance.

If you like the sound of this, you can help make it happen on Kickstarter.

[ Kickstarter ]

Thanks Leonardo!

The project Entern is concerned with technologies for the autonomous operation of robots in lunar and planetary exploration missions. It covers the subjects of operations & control, environment modelling and navigation. The goal of the project is to improve the autonomous capabilities of individually acting robots in difficult situations such as craters and caves. On-board simulation is used within the project for this purpose. It allows the robot to improve the assessment of critical situations without external help.

[ Entern ]

Robots become every day more ‘intelligent’. What if robots will be so intelligent to say NO to war? This would be a happier future.

This short robot film is the result of the student project between Sheffield Robotics of the University of Sheffield and the Institute of Arts of Sheffield Hallam University.

[ DiODe ]

On this week’s episode of Robots in Depth, Per interviews Nicola Tomatis from BlueBotics.

Nicola Tomatis talks about his long road into robotics and how BlueBotics handles indoor navigation and integrates it in automated guided vehicles (AGV). Like many, Nicola started out tinkering when he was young, and then got interested in computer science as he wanted to understand it better. Nicola gives us an overview of indoor navigation and its challenges.He shares a number of interesting projects, including professional cleaning and intralogistics in hospitals. We also find out what someone who wants use indoor navigation and AGV should think about.

[ Robots in Depth ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.