As the steady hum of artificial intelligence activity carries on from 2023 into the new year, the research group AI Impacts has released the results of their most recent outlook survey among AI researchers and engineers. Their accompanying preprint (not peer reviewed as of press time) details the results. The group’s analysis of their 2023 Expert Survey on Progress in AI summarizes responses of 2,788 AI researchers to a series of questions regarding the present and far future of AI research.

“When will particular things happen in the future? Is there a risk of bad things happening? In practice, we’re interested in human extinction.”

—Katja Grace, AI Impacts

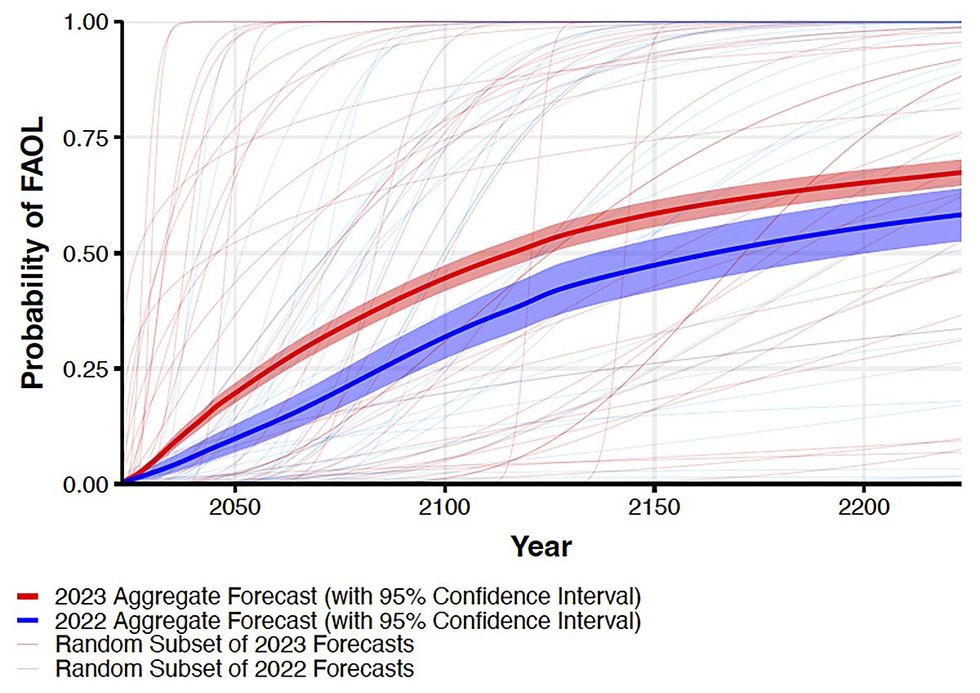

The 2023 survey follows AI Impact’s 2022 survey, whose median participant reasoned the likelihood of AI-caused “human extinction or similarly permanent and severe disempowerment of the human species” at 5 percent. The likelihood of that same extinction or subjugation resulting from “human inability to control future advanced AI systems” was at a median 10 percent. The median responses to those same questions in the 2023 survey were unchanged, while the median 2023 prediction for AI-driven extinction by the year 2100 was 5 percent.

AI Impacts has been conducting this survey since 2016. Katja Grace, one of the organization’s cofounders and current lead researcher, started the group to map out potential consequences of advancing AI technology. “We’re trying to answer decision-relevant questions about the future of AI,” says Grace. “When will particular things happen in the future? Is there a risk of bad things happening? In practice, we’re interested in human extinction.”

The group runs their now-annual surveys to sample the opinion of the AI research community regarding those consequences. “We are most interested in timelines. We ask about narrow things that will likely happen sooner, which I trust more as straightforward information about what’s plausible,” says Grace. “For longer goals, the numbers are so all over the place that I don’t expect to learn what date they’ll happen, but more expect to learn what the feeling is in the AI community.” Grace goes on to state that mood has some dark corners. “From the survey it’s somewhat hard to judge what the overall mood is, but it seems like there is a fairly widespread belief that there is non-negligible risk of AI destroying humanity in the long run. I have less of a sense of with what mood that belief is held.”

“They marketed it, framed it, as ‘the leading AI researchers believe…something,’ when in fact the demographic includes a variety of students.”

—Nirit Weiss-Blatt, author, The Techlash

Since its founding, AI Impacts has attracted substantial attention for the more alarming results produced from its surveys. The group—currently listing seven contributors on its website—has also received at least US $2 million in funding as of December 2022. This funding came from a number of individuals and philanthropic associations connected to the effective altruism movement and concerned with the potential existential risk of artificial intelligence. This includes Open Philanthropy, technologist Jaan Tallinn, and the now-defunct FTX Future Fund. AI Impacts operates within the Machine Intelligence Research Institute, an AI existential risk research group codirected by Eliezer Yudkowsky. Yudkowsky has previously called for bringing a decisive and potentially violent end to advanced AI research.

The 2023 survey also asked participants to estimate the likelihoods and development timelines of a number of AI research milestones. Participants predicted that some AI tasks—generating functional software in Python, creating a “random video game,” writing a top-40 pop song—were likely achievable by the end of the decade. Arguably, LLM code generation has already achieved the first of those. Other goals, like winning a 5K road race as a bipedal robot or fully automating the work of long-haul truck drivers were given longer median timeframes by the researchers.

“What they are doing is running a well-funded panic campaign. So, that’s not good journalism.”

—Nirit Weiss-Blatt

The 2022 survey’s participant-selection methods were criticized for being skewed and narrow. AI Impacts sent the survey to 4,271 people—738 responded—whose research was published at the 2021 machine-learning conferences Neural Information Processing Society (NeurIPS) and the International Conference on Machine Learning (ICML). Communication researcher and prominent IT author Nirit Weiss-Blatt, who was publicly critical of the survey, points out that this approach created a skewed survey population. “People that send papers to those conferences, they can be undergrads and grad students,” says Weiss-Blatt. “They marketed it, framed it, as ‘the leading AI researchers believe…something,’ when in fact the demographic includes a variety of students.” AI Impacts changed its selection criteria in the 2023 survey, sending surveys to the participants of a larger number of machine-learning conferences and only to participants with a Ph.D.

Beyond methodological issues, Weiss-Blatt points out how the survey plays into the current media coverage and public opinion of AI. “The coverage of AI becomes crazy because of headlines like ‘There’s a 5 percent chance of AI causing humans to go extinct, say scientists,’ ” says Weiss-Blatt. “When a regular person sees that, then they think they should panic as well. What they are doing is running a well-funded panic campaign. So, that’s not good journalism. A better representation of this survey would indicate that it was funded, phrased, and analyzed by ‘x-risk’ effective altruists. Behind ‘AI Impacts’ and other ‘AI Safety’ organizations, there’s a well-oiled ‘x-risk’ machine. When the media is covering them, it has to mention it.”

AI Impacts plans to continue adapting their surveys in response to future criticisms. For the coming 2024 survey, the group has reached out to external sources for advice. “A different thing we’ve done this time was try to run the methodology by more people,” says Grace. “We sent it to Nate Silver because he’s more of an expert in this field than us, probably. He said it was great.” Silver confirmed his involvement when contacted by IEEE Spectrum.

Michael Nolan is a writer and reporter covering developments in neuroscience, neurotechnology, biometric systems and data privacy. Before that, he spent nearly a decade wrangling biomedical data for a number of labs in academia and industry. Before that he received a masters degree in electrical engineering from the University of Rochester.