Replicating the human brain in software and silicon is a longstanding goal of artificial intelligence (AI) research. And while neuromorphic chips have made significant inroads in being able to run multiple computations simultaneously and can both compute and store data, they are nowhere close to emulating the energy efficiency of the brain.

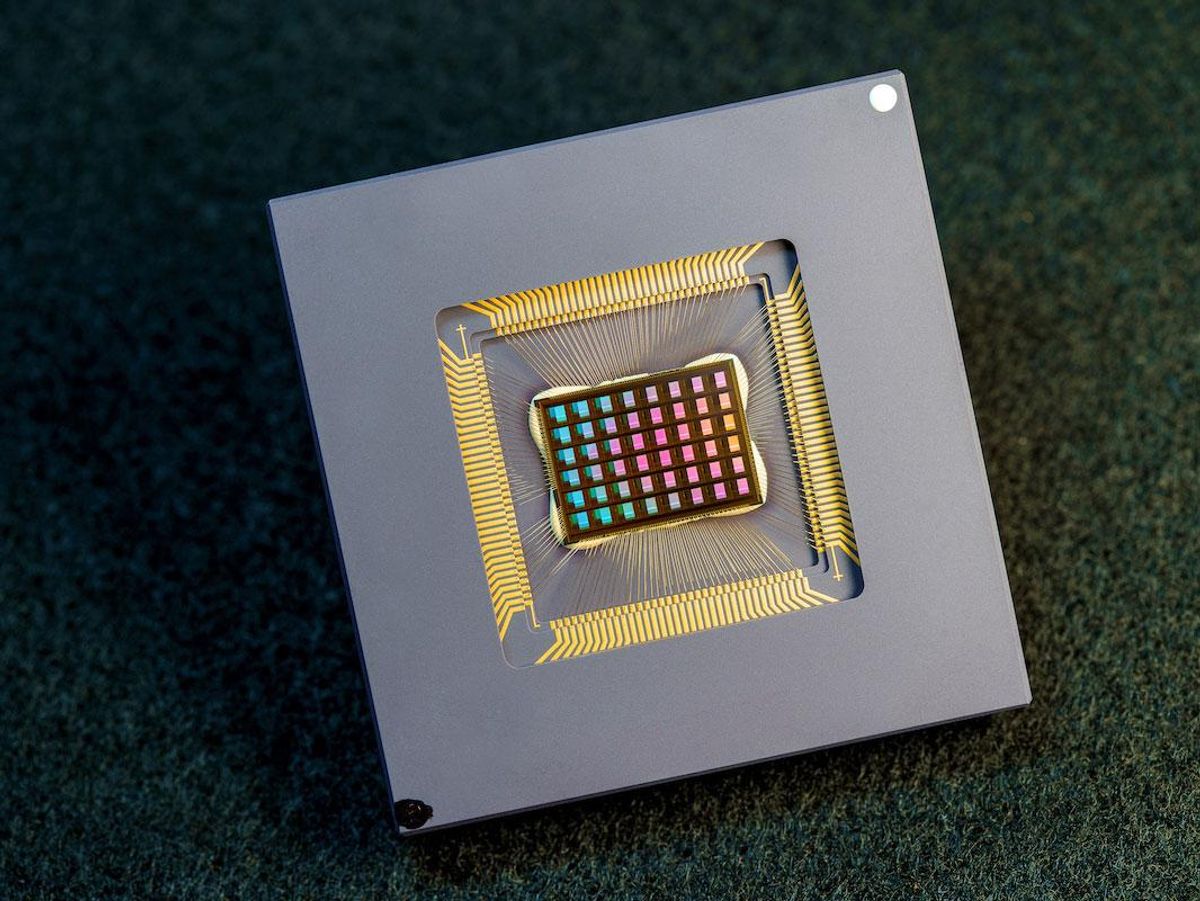

AI computation is very energy intensive, but most of the energy consumed is not by the computation itself. The energy-intensive part is moving data between the memory and compute units within AI chips. To tackle this issue, a team of researchers have developed a prototype of a new compute-in-memory (CIM) chip that eliminates the need for this separation. Their prototype, they claim in their paper published in Nature on 17 August, demonstrates twice the efficiency of existing AI platforms. Called the NeuRRAM—because it uses a type of RAM called resistive random access memory (RRAM)—this 48-core RRAM-CIM hardware supports a wide array of neural-network models and architectures.

RRAM has many benefits in comparison to conventional memory, says Stanford University researcher Weier Wan, first author of the paper. One of these is higher capacity within the same silicon area, making it possible to implement bigger AI models. It is also nonvolatile, meaning there is no power leakage. This makes RRAM-based chips ideal for edge workloads, he adds. The researchers envisage the NeuRRAM chip efficiently handling a range of sophisticated AI applications on lower-power edge devices, without relying on a network connection to the cloud.

To design the NeuRRAM, the team had to weigh efficiency, versatility, and accuracy, without sacrificing any one of them. “The main innovation is that we use a novel analog-to-digital conversion scheme,” Wan says, as this is considered the main bottleneck for the energy efficiency in CIM chips. “[We] invented a new scheme, which is based on sensing the voltage, whereas previous schemes are based on sensing current.” The voltage-mode sensing also allows for higher parallelism in the RRAM array in a single computing cycle.

They also explored some new architectures, such as the transposable neurosynaptic array (TNSA), to flexibly control data-flow directions. “For accuracy,” explains Wan, “the key is algorithm and hardware codesign. This basically allowed us to model the hardware characteristics directly within these AI models.” This, in turn, allows the algorithm to adapt to hardware non-idealities and retain accuracy. In other words, Wan summarizes, they optimized across the entire stack—from device to circuit to architecture to algorithm, to engineer a chip that is at once efficient, versatile, and accurate.

Gert Cauwenberghs, a researcher at the University of California, San Diego, one of the paper’s coauthors, says, “Most of the advances in compute-in-memory have been limited to software-level demonstrations, basically using an array of synapses. But here we have put it all together in a horizontal level on the stack.”

NeuRRAM achieved 99 percent accuracy on a handwritten digit-recognition task, 85.7 percent on an image-classification task, 84.7 percent on a Google speech command-recognition task, and a 70 percent reduction in image-reconstruction error on an image-recovery task. “These results are comparable to existing digital chips that perform computation under the same bit-precision, but with drastic savings in energy,” the researchers conclude.

Comparing NeuRRAM to Intel’s Loihi 2 neuromorphic chip—the building block of the 8 million–neuron Pohoiki Beach system—the researchers say that their chip gives better efficiency and density. “Basically Loihi is a standard digital processor [with] SRAM banks [and] a specific, programmable ISA [instruction set architecture],” adds Siddharth Joshi, another coauthor and researcher at the University of Notre Dame. “They use a much more von Neumann–ish architecture—whereas our computation is on the bit-line itself.”

Recent studies also contend that neuromorphic chips, including Loihi, may have a broader range of applications than just AI, including medical and economics analysis, and in quantum-computing needs. The makers of NeuRRAM agree, stating that compute-in-memory architectures are the future. Cauwenberghs adds that scalability of NeuRRAM pans out nicely on the architecture side “because we have this parallel array of cores, each doing their computation independently, and this is how we can implement large networks with arbitrary connectivity.”

It is early days yet to think about commercialization, the researchers say. While they believe the chip’s efficient hardware implementation with compute-in-memory is a winning combination, widespread adoption will still depend on getting the energy-efficiency benchmarks down.

“We’re continuing working on integrating learning rules,” Cauwenberghs reports, “so future versions will be able to learn with a chip in the loop with advances in RRAM technology to allow for incremental or product learning on a large scale.” Wan also adds for commercialization to become a reality, RRAM technology has to become more readily available to chip designers.

- How AI Will Change Chip Design - IEEE Spectrum ›

- Cerebras' New Monster AI Chip Adds 1.4 Trillion Transistors - IEEE ... ›

- This AI Chip Hits New Ultralow Power Lows - IEEE Spectrum ›

- Generative AI’s Energy Problem Today is Foundational - IEEE Spectrum ›

- Electron E1: Efficient Dataflow Architecture - IEEE Spectrum ›

Payal Dhar (she/they) is a freelance journalist on science, technology, and society. They write about AI, cybersecurity, surveillance, space, online communities, games, and any shiny new technology that catches their eye. You can find and DM Payal on Twitter (@payaldhar).