This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

Our brains may not be big, but they pack a lot of computing power, given their size. For this reason, many researchers have been interested in creating artificial networks that mimic the neural signal processing of the brain. These artificial networks, called spiking neural networks (SNNs), could be used to create intelligent robots, or to better understand the underpinnings of the brain itself.

However, the brain has 100 billion tiny neurons, each of which are connected to 10,000 other neurons via synapses and which represent information through coordinated patterns of electrical spiking. Mimicking these neurons using hardware on a compact device—all while ensuring that computation is done in an energy-efficient manner—has proven challenging.

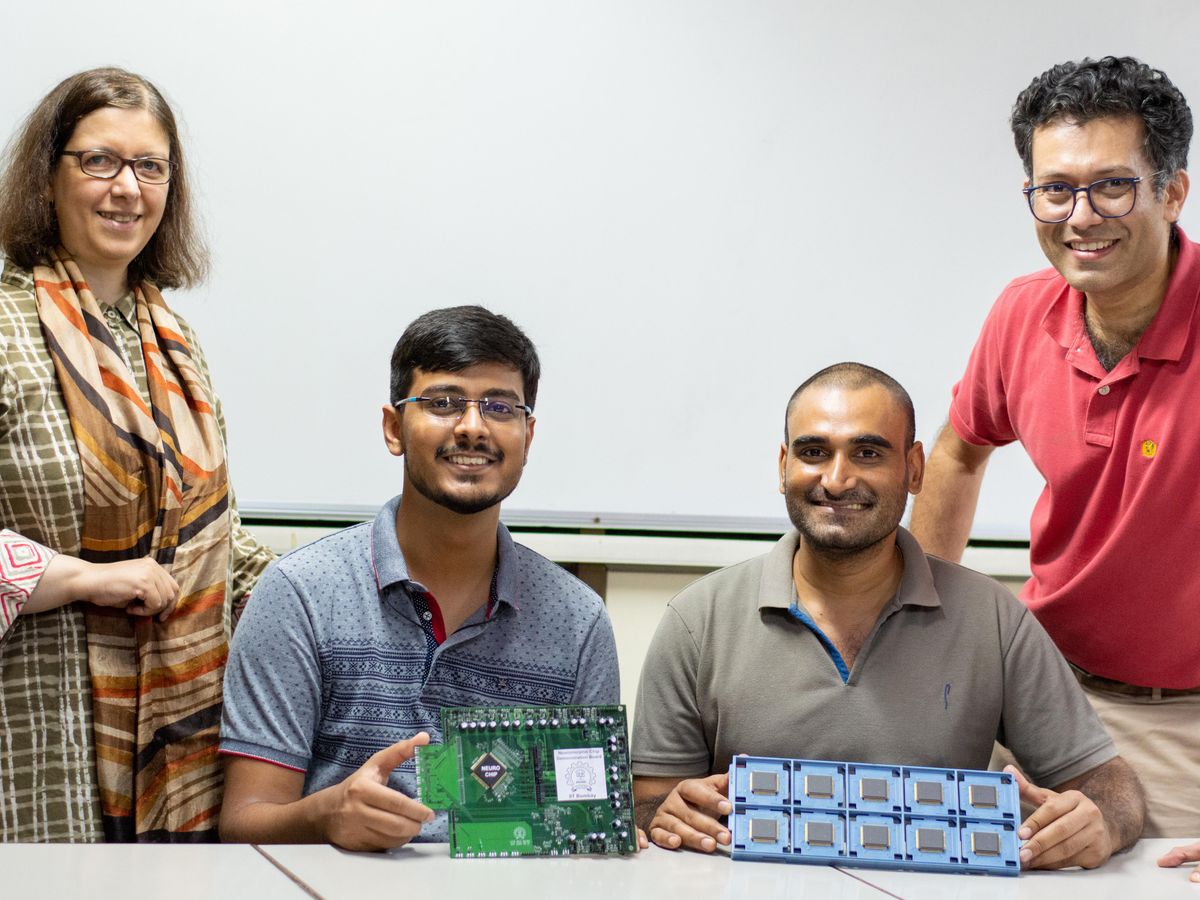

In a recent study, researchers in India achieved ultralow-energy artificial neurons that allow the SNNs to be more compactly arranged. The results are published 25 May in IEEE Transactions on Circuits and Systems I: Regular Papers.

Just as neurons in the brain spike at a given energy threshold, SNNs rely on a network of artificial neurons where a current source charges up a leaky capacitor until a threshold level is hit and the artificial neurons fires, and the stored charge is reset to zero. However, many existing SNNs require large transistor currents to charge up their capacitors, which leads to high power consumption or artificial neurons that fire too quickly.

In their study, Udayan Ganguly, a professor at the Indian Institute of Technology, Bombay, and his colleagues created a SNN that relies on a new and compact current source to charge capacitors, called band-to-band-tunneling (BTBT) current.

With BTBT, quantum tunneling current charges up the capacitor with ultralow current, meaning less energy is required. Quantum tunneling in this case means the current can flow through the forbidden gap in the silicon of the artificial neuron via quantum-wave-like behavior. The BTBT approach also spares the need for large capacitors to store high amounts of current, paving the way for smaller capacitors on a chip and thus saving space. When the researchers tested their BTBT neuron approach using 45-nanometer commercial silicon-on-insulator transistor technology, they saw substantial energy and space savings.

“In comparison to state-of-art [artificial] neurons implemented in hardware spiking neural networks, we achieved 5,000 times lower energy per spike at a similar area and 10 times lower standby power at a similar area and energy per spike,” explains Ganguly.

The researchers then applied their SNN to a model of speech recognition inspired by the brain’s auditory cortex. Using 20 artificial neurons for initial input coding and 36 additional artificial neurons, the model could effectively recognize spoken words, demonstrating real-world feasibility of the approach.

Notably, this type of technology could be well suited for a range of applications, including voice-activity detection, speech classification, motion-pattern recognition, navigation, biomedical signals, classification, and more. And Ganguly notes that while these applications can be done using current servers and supercomputers, SNNs could enable these applications to be used with edge devices, such as mobile phones and IoT sensors—and especially when energy constraints are tight.

He says that while his team has shown their BTBT approach to be usefulfor specific applications such as keyword detection, they are interested in demonstrating a general-purpose reusable neurosynaptic core for a wide variety of applications and clients, and have created a startup company, called Numelo Tech, to drive commercialization. Their goal, he says, “is an extremely low-power neurosynaptic core and developing a real-time on-chip learning mechanism, which are key for autonomous biologically inspired neural networks. This is the holy grail.”

- RISC-V AI Chips Will Be Everywhere - IEEE Spectrum ›

- Low-Power AI Startup Eta Compute Delivers First Commercial Chips ... ›

- New AI Chip Twice As Energy Efficient As Alternatives - IEEE Spectrum ›

Michelle Hampson is a freelance writer based in Halifax. She frequently contributes to Spectrum's Journal Watch coverage, which highlights newsworthy studies published in IEEE journals.