Robots Will Navigate the Moon With Maps They Make Themselves

Astrobotic’s autonomous navigation will help lunar landers, rovers, and drones find their way on the moon

Neil Armstrong made it sound easy. “Houston, Tranquility Base here. The Eaglehas landed,” he said calmly, as if he had just pulled into a parking lot. In fact, the descent of the Apollo 11 lander was nerve-racking. As the Eagle headed to the moon’s surface, Armstrong and his colleague Buzz Aldrin realized it would touch down well past the planned landing site and was heading straight for a field of boulders. Armstrong started looking for a better place to park. Finally, at 150 meters, he leveled off and steered to a smooth spot with about 45 seconds of fuel to spare.

“If he hadn’t been there, who knows what would have happened?” says Andrew Horchler, throwing his hands up. He’s sitting in a glass-walled conference room in a repurposed brick warehouse, part of Pittsburgh’s Robotics Row, a hub for tech startups. This is the headquarters of space robotics company Astrobotic Technology. In the coming decades, human forays to the moon will rely heavily on robotic landers, rovers, and drones. Horchler leads a team whose aim is ensuring those robotic vessels—including Astrobotic’s own Peregrine lander—can perform at least as well as Armstrong did.

Astrobotic’s precision-navigation technology will let both uncrewed and crewed landers touch down exactly where they should, so a future Armstrong won’t have to strong-arm her landing vessel’s controls. Once they’re safely on the surface, robots like Astrobotic’s will explore the moon’s geology, scout out sites for future lunar bases, and carry equipment and material destined for those bases, Horchler says. Eventually, rovers will help mine for minerals and water frozen deep in craters and at the poles.

Astrobotic was founded in 2007 by roboticists at Carnegie Mellon University to compete for the Google Lunar X Prize, which challenged teams to put a robotic spacecraft on the moon. The company pulled out of the competition in 2016, but its mission has continued to evolve. It now has a 20-person staff and contracts with a dozen organizations to deliver payloads to the moon, at US $1.2 million per kilogram, which the company says is the lowest in the industry. Late last year, Astrobotic was one of nine companies that NASA chose to carry payloads to the moon for its 10-year, $2.6 billion Commercial Lunar Payload Services (CLPS) program. The space agency announced the first round of CLPS contracts in late May, with Astrobotic receiving $79.5 million to deliver its payloads by July 2021.

Meanwhile, China, India, and Israel have all launched uncrewed lunar landers or plan to do so soon. The moon will probably be a much busier place by the 60th anniversary of Apollo 11, in 2029.

The moon’s allure is universal, says John Horack, an aerospace engineer at Ohio State University. “The moon is just hanging in the sky, beckoning to us. That beckoning doesn’t know language or culture barriers. It’s not surprising to see so many thinking about how to get to the moon.”

On the moon, there is no GPS, compass-enabling magnetic field, or high-resolution maps for a lunar craft to use to figure out where it is and where it’s going. Any craft will also be limited in the computing, power, and sensors it can carry. Navigating on the moon is more like the wayfinding of the ancient Polynesians, who studied the stars and ocean currents to track their boats’ trajectory, location, and direction.

A spacecraft’s wayfinders are inertial measurement units that use gyroscopes and accelerometers to calculate attitude, velocity, and direction from a fixed starting point. These systems extrapolate from previous estimates, so errors accumulate over time. “Your knowledge of where you are gets fuzzier and fuzzier as you fly forward,” Horchler says. “Our system collapses that fuzziness down to a known point.”

A conventional guidance system can put a vessel down within an ellipse that’s several kilometers long, but Astrobotic’s system will land a craft within 100 meters of its target. This could allow touchdowns near minable craters, at the heavily shadowed icy poles, or on a landing pad next to a moon base. “It’s one thing to land once at a site, a whole other thing to land repeatedly with precision,” says Horchler.

Astrobotic’s terrain-relative navigation (TRN) sensor contains all the hardware and software needed for smart navigation. It uses 32-bit processors that have worked well on other missions and FPGA hardware acceleration for low-level computer-vision processing. The processors and FPGAs are all radiation hardened. The brick-size unit can be bolted to any spacecraft. The sensor will take a several-megapixel image of the lunar surface every second or so as the lander approaches. Algorithms akin to those for facial recognition will spot unique features in the images, comparing them with stored maps to calculate lunar coordinates and orientation.

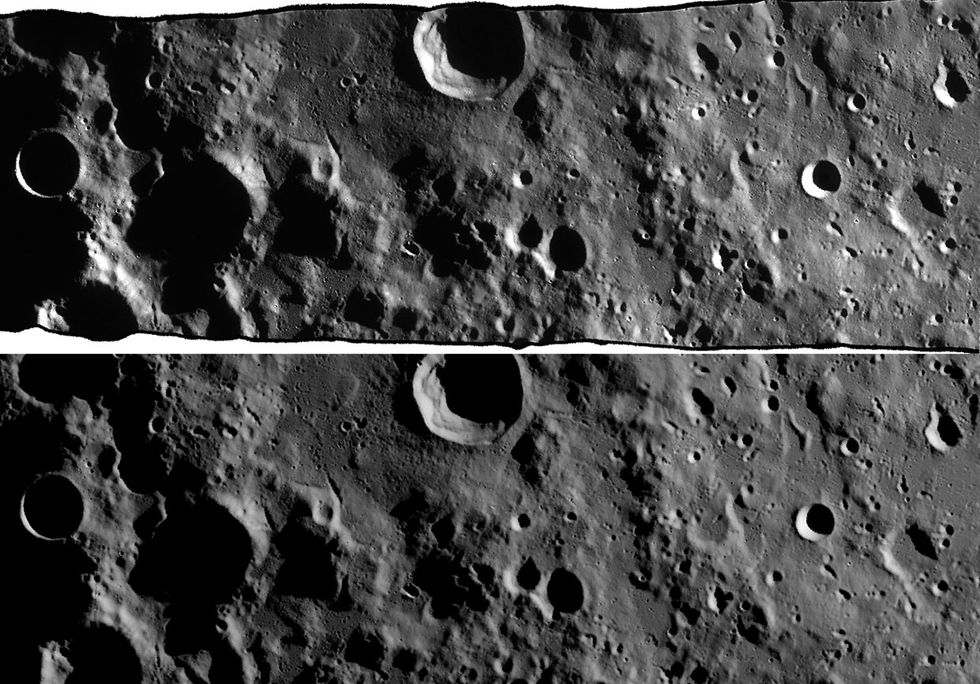

Those stored maps are a computing marvel. Images taken by NASA’s Lunar Reconnaissance Orbiter (LRO), which has been mapping the moon since 2009, have very different perspectives and shadows from what the lander will see as it descends. This is especially true at the poles, where the angle of the sun changes the lighting dramatically.

So software wizards at Astrobotic are creating synthetic maps. Their software starts with elevation models based on LRO data. It fuses those terrain models with data on the relative positions of the sun, moon, and Earth; the approximate location of the lander; and the texture and reflectiveness of the lunar soil. Finally, a physics-based ray-tracing system, similar to what’s used in animated films to create synthetic imagery, puts everything together.

Horchler pulls up two images of a 50-by-200-kilometer patch near the moon’s south pole. One is a photo taken by the LRO. The other is a digitally rendered version created by the Astrobotic software. I can’t tell them apart. Future TRN systems may be able to build high-fidelity maps on the fly as the lander descends, but that’s impossible with current onboard computing power, Horchler says.

To confirm the TRN’s algorithms, Astrobotic has run tests in the Mojave Desert. A 2014 video shows the TRN sensor mounted on a vertical-takeoff-and-landing vehicle made by Masten Space Systems, another company chosen for NASA’s CLPS program. Astrobotic engineers had mapped the scrubby area beforehand, including a potential landing site littered with sandbags to mimic large rocks. In the video, the vehicle takes off without a programmed destination. The navigation sensor scans the ground, matching what it sees to the stored maps. The hazard-detection sensor uses lidar and stereo cameras to map shapes and elevation on the rocky terrain and track the lander’s distance to the ground. The craft lands safely, avoiding the sandbags.

Astrobotic expects its first CLPS mission to launch in July 2021, aboard a United Launch Alliance Atlas V rocket. The 28 payloads aboard the stout Peregrine lander will include NASA scientific instruments, another scientific instrument from the Mexican Space Agency, rovers from startups in Chile and Japan, and personal mementos from paying customers.

In a space that Astrobotic employees call the Tiger’s Den, a large plush tiger keeps an eye on aerospace engineer Jeremy Hardy, who looks like he’s having too much fun. He’s flying a virtual drone onscreen through a landscape of trees and rocks. When he switches to a drone’s-eye view, the landscape fills with green dots, each a unique feature that the drone is tracking, like a corner or an edge.

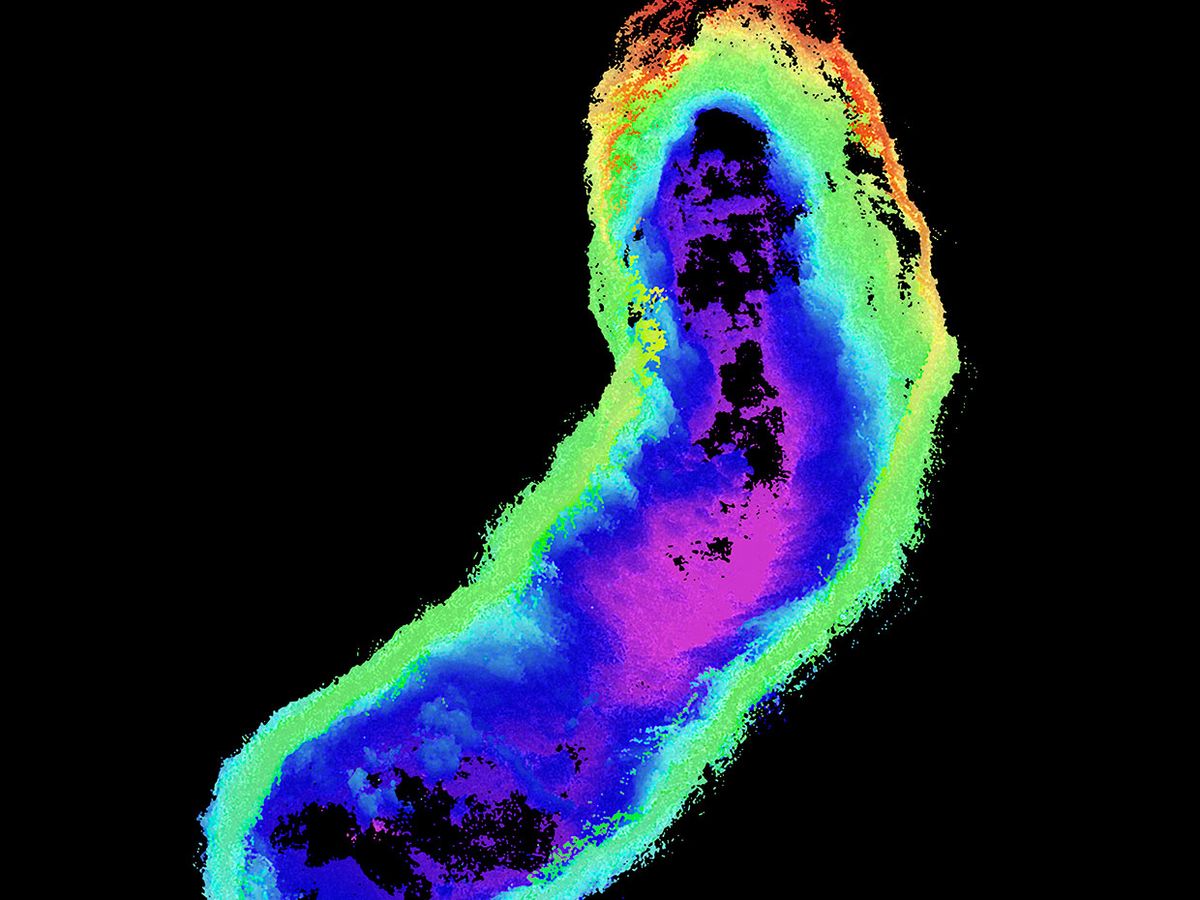

The program Hardy is using is called AstroNav, which will guide propulsion-powered drones as they fly through the moon’s immense lava tubes. These temperature-stable tunnels are believed to be tens of kilometers long and “could fit whole cities within them,” Horchler says. The drones will map the tunnels as they fly, coming back out to recharge and send images to a lunar station or to Earth.

Hardy’s drone is flying in unchartered territory. AstroNav uses a simultaneous localization and mapping (SLAM) algorithm, a heavyweight technology also used by self-driving cars and office delivery robots to build a map of their surroundings and compute their own location within that map. AstroNav blends data from the drone’s inertial measurement units, stereo-vision cameras, and lidar. The software tracks the green-dotted features across many frames to calculate where the drone is.

The company has tested AstroNav-guided hexacopters in West Virginian caves, craters in New Mexico, and the Lofthellir lava tube of Iceland. Similar SLAM techniques could guide autonomous lunar rovers as they explore permanently shadowed regions at the poles.

Astrobotic has plenty of competition. Another CLPS contractor is Draper Laboratory, which helped guide Apollo missions. The lab’s navigation system, also built around image processing and recognition, will take Japanese startup Ispace’s lander to the moon.

Draper’s “special sauce” is software developed for the U.S. Army’s Joint Precision Airdrop System, which delivers supplies via parachute in war zones, says space systems program manager Alan Campbell. Within a box called an aerial guidance unit is a downward-facing camera, motors, and a small computer running Draper’s software. The software determines the parachute’s location by comparing terrain features in the camera’s images with commercial satellite images to land the parachute within 50 meters of its target.

The unit also uses Doppler lidar, which detects hazards and measures relative velocity. “When you’re higher up, you can compare images to maps,” says Campbell. At lower altitudes, a different method tracks features and how they’re moving. “Lidar will give you a finer-grain map of hazards.”

Draper’s long experience dating back to Apollo gives the lab an edge, Campbell adds. “We’ve landed on the moon before, and I don’t think our competitors can say that.”

Other nations with lunar aspirations are also relying on autonomous navigation. China’s Chang’e 4, for example, became the first craft to land on the far side of the moon, in early January. In its landing video, the craft hovers for a few seconds above the surface. “That indicates it has lidar or [a] camera and is taking an image of the field to make sure it’s landing on a safe spot,” says Campbell. “It’s definitely an autonomous system.”

Israel’s lunar spacecraft Beresheet was also expected to make a fully automated touchdown in April. It relied on image-processing software run on a computer about as powerful as a smartphone, according to reports. However, just moments before it was to land, it crashed on the lunar surface due to an apparent engine failure.

In the race to the moon, there will be no one winner, Ohio State’s Horack says. “We need a fair number of successful organizations from around the world working on this.”

Astrobotic is also looking further out. Its AstroNav could be used on other cosmic bodies for which there are no high-resolution maps, like the moons of Jupiter and Saturn. The challenge will be scaling back the software’s appetite for computing power. Computing in space lags far behind computing on Earth, Horchler notes. Everything needs to be radiation tolerant and designed for a thermally challenging environment. “It tends to be very custom,” he says. “You don’t have a new family of processors every two years. An Apple Watch has more computing power than a lot of spacecraft out there.”

The moon will be a crucial test-bed for precision landing and navigation. “A lot of the technology that it takes to land on the moon is similar to what it takes to land on Mars or icy moons like Europa,” Horchler says. “It’s much easier to prove things out at our nearest neighbor than at bodies halfway across the solar system.”

This article appears in the July 2019 print issue as “Turn Left at Tranquility Base.”