Why Rat-Brained Robots Are So Good at Navigating Unfamiliar Terrain

Running algorithms that mimic a rat’s navigation neurons, heavy machines will soon plumb Australia’s underground mines

If you take a common brown rat and drop it into a lab maze or a subway tunnel, it will immediately begin to explore its surroundings, sniffing around the edges, brushing its whiskers against surfaces, peering around corners and obstacles. After a while, it will return to where it started, and from then on, it will treat the explored terrain as familiar.

Roboticists have long dreamed of giving their creations similar navigation skills. To be useful in our environments, robots must be able to find their way around on their own. Some are already learning to do that in homes, offices, warehouses, hospitals, hotels, and, in the case of self-driving cars, entire cities. Despite the progress, though, these robotic platforms still struggle to operate reliably under even mildly challenging conditions. Self-driving vehicles, for example, may come equipped with sophisticated sensors and detailed maps of the road ahead, and yet human drivers still have to take control in heavy rain or snow, or at night.

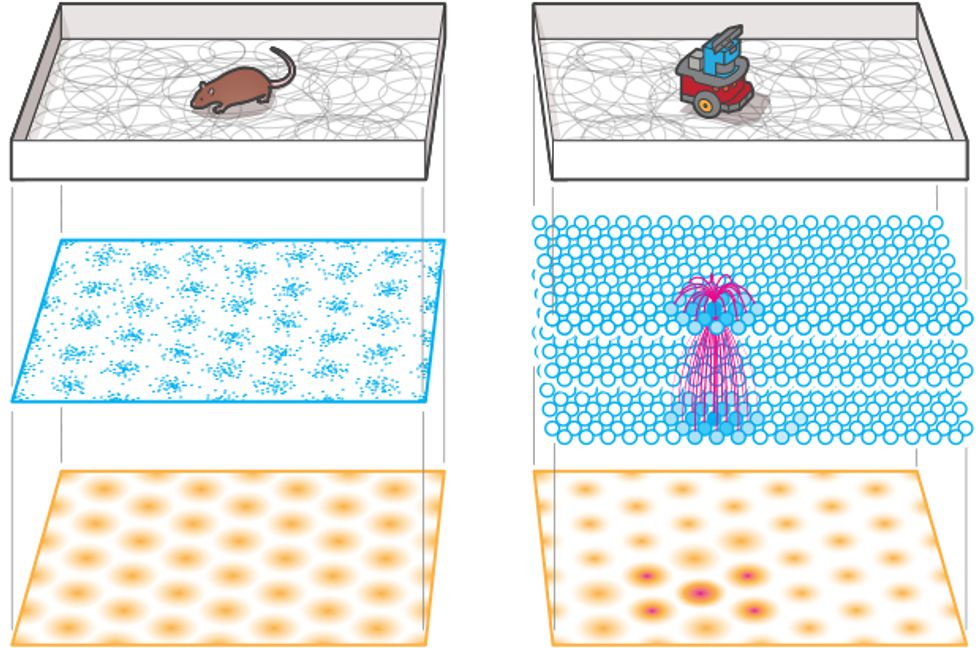

The lowly brown rat, by contrast, is a nimble navigator that has no problem finding its way around, under, over, and through the toughest spaces. When a rat explores an unfamiliar territory, specialized neurons in its 2-gram brain fire, or spike, in response to landmarks or boundaries. Other neurons spike at regular distances—once every 20 centimeters, every meter, and so on—creating a kind of mental representation of space [PDF]. Yet other neurons act like an internal compass, recording the direction in which the animal's head is turned [PDF]. Taken together, this neural activity allows the rat to remember where it's been and how it got there. Whenever it follows the same path, the spikes strengthen, making the rat's navigation more robust.

So why can't a robot be more like a rat?

The answer is, it can. At the Queensland University of Technology (QUT), in Brisbane, Australia, Michael Milford and his collaborators have spent the last 14 years honing a robot navigation system modeled on the brains of rats. This biologically inspired approach, they hope, could help robots navigate dynamic environments without requiring advanced, costly sensors and computationally intensive algorithms.

An earlier version of their system allowed an indoor package-delivery bot to operate autonomously for two weeks in a lab. During that period, it made more than 1,100 mock deliveries, traveled a total of 40 kilometers, and recharged itself 23 times. Another version successfully mapped an entire suburb of Brisbane, using only the imagery captured by the camera on a MacBook. Now Milford's group is translating its rat-brain algorithms into a rugged navigation system for the heavy-equipment maker Caterpillar, which plans to deploy it on a fleet of underground mining vehicles.

Milford, who's 35 and looks about 10 years younger, began investigating brain-based navigation in 2003, when he was a Ph.D. student at the University of Queensland working with roboticist Gordon Wyeth, who's now dean of science and engineering at QUT.

At the time, one of the big pushes in robotics was the “kidnapped robot" problem: If you take a robot and move it somewhere else, can it figure out where it is? One way to solve the problem is SLAM, which stands for simultaneous localization and mapping. While running a SLAM algorithm, a robot can explore strange terrain, building a map of its surroundings while at the same time positioning, or localizing, itself within that map.

Wyeth had long been interested in brain-inspired computing, starting with work on neural networks in the late 1980s. And so he and Milford decided to work on a version of SLAM that took its cues from the rat's neural circuitry. They called it RatSLAM.

There already were numerous flavors of SLAM, and today they number in the dozens, each with its own advantages and drawbacks. What they all have in common is that they rely on two separate streams of data. One relates to what the environment looks like, and robots gather this kind of data using sensors as varied as sonars, cameras, and laser scanners. The second stream concerns the robot itself, or more specifically, its speed and orientation; robots derive that data from sensors like rotary encoders on their wheels or an inertial measurement unit (IMU) on their bodies. A SLAM algorithm looks at the environmental data and tries to identify notable landmarks, adding these to its map. As the robot moves, it monitors its speed and direction and looks for those landmarks; if the robot recognizes a landmark, it uses the landmark's position to refine its own location on the map.

But whereas most implementations of SLAM aim for highly detailed, static maps, Milford and Wyeth were more interested in how to navigate through an environment that's in constant flux. Their aim wasn't to create maps built with costly lidars and high-powered computers—they wanted their system to make sense of space the way animals do.

“Rats don't build maps," Wyeth says. “They have other ways of remembering where they are." Those ways include neurons called place cells and head-direction cells, which respectively let the rat identify landmarks and gauge its direction. Like other neurons, these cells are densely interconnected and work by adjusting their spiking patterns in response to different stimuli. To mimic this structure and behavior in software, Milford adopted a type of artificial neural network called an attractor network. These neural nets consist of hundreds to thousands of interconnected nodes that, like groups of neurons, respond to an input by producing a specific spiking pattern, known as an attractor state. Computational neuroscientists use attractor networks to study neurons associated with memory and motor behavior. Milford and Wyeth wanted to use them to power RatSLAM.

They spent months working on the software, and then they loaded it into a Pioneer robot, a mobile platform popular among roboticists. Their rat-brained bot was alive.

But it was a failure. When they let it run in a 2-by-2-meter arena, Milford says, “it got lost even in that simple environment."

Milford and Wyeth realized that RatSLAM didn't have enough information with which to reduce errors as it made its decisions. Like other SLAM algorithms, it doesn't try to make exact, definite calculations about where things are on the map it's generating; instead, it relies on approximations and probabilities as a way of incorporating uncertainties—conflicting sensor readings, for example—that inevitably crop up. If you don't take that into account, your robot ends up lost.

That seemed to be the problem with RatSLAM. In some cases, the robot would recognize a landmark and be able to refine its position, but other times the data was too ambiguous. After not too long, the accrued error was bigger than 2 meters—the robot thought it was outside the arena!

In other words, their rat-brain model was too crude. It needed better neural circuitry to be able to abstract more information about the world.

“So we engineered a new type of neuron, which we called a 'pose' cell," Milford says. The pose cell didn't just tell the robot its location or its orientation, it did both at the same time. Now, when the robot identified a landmark it had seen before, it could more precisely encode its place on the map and keep errors in check.

Again, Milford placed the robot inside the 2-by-2-meter arena. “Suddenly, our robot could navigate quite well," he recalls.

Interestingly, not long after the researchers devised these artificial cells, neuroscientists in Norway announced the discovery of grid cells, which are neurons whose spiking activity forms regular geometric patterns and tells the animal its relative position within a certain area. [For more on the neuroscience of rats, see "AI Designers Find Inspiration in Rat Brains."]

“Our pose cells weren't exactly grid cells, but they had similar features," Milford says. “That was rather gratifying."

The robot tests moved to bigger arenas with greater complexity. “We did a whole floor, then multiple floors in the building," Wyeth recalls. “Then I told Michael, 'Let's do a whole suburb.' I thought he would kill me."

Milford loaded the RatSLAM software into a MacBook and taped it on the roof of his red 1994 Mazda Astina. To get a stream of data about the environment, he used the laptop's camera, setting it to snap a photo of the street ahead of the car several times per second. To get a stream of the data about the robot itself—in this case, his car—he found a creative solution. Instead of attaching encoders to the wheels or using an IMU or GPS, he used simple image-processing techniques. By tracking and comparing pixels on sequences of photos from the MacBook, his SLAM algorithm could calculate the vehicle's speed as well as direction changes.

Milford drove for about 2 hours through the streets of the Brisbane suburb of St. Lucia [PDF], covering 66 kilometers. The result wasn't a precise, to-scale map, but it accurately represented the topology of the roads and could pinpoint exactly where the car was at any given moment. RatSLAM worked.

“It immediately drew attention and was widely discussed because it was very different from what other roboticists were doing," says David Wettergreen, a roboticist at Carnegie Mellon University, in Pittsburgh, who specializes in autonomous robots for planetary exploration. Indeed, it's still considered one of the most notable examples of brain-inspired robotics.

But though RatSLAM created a stir, it didn't set off a wave of research based on those same principles. And when Milford and Wyeth approached companies about commercializing their system, they found many keen to hear their pitch but ultimately no takers. “A colleague told me we should have called it 'NeuroSLAM,' " Wyeth says. “People have bad associations with rats."

That's why Milford is excited about the two-year project with Caterpillar, which began in March. “I've always wanted to create systems that had real-world uses," he says. “It took a lot longer than I expected for that to happen."

“We looked at their results and decided this is something we could get up and running quickly," Dave Smith, an engineer at Caterpillar's Australia Research Center, in Brisbane, tells me. “The fact that it's rat inspired is just a cool thing."

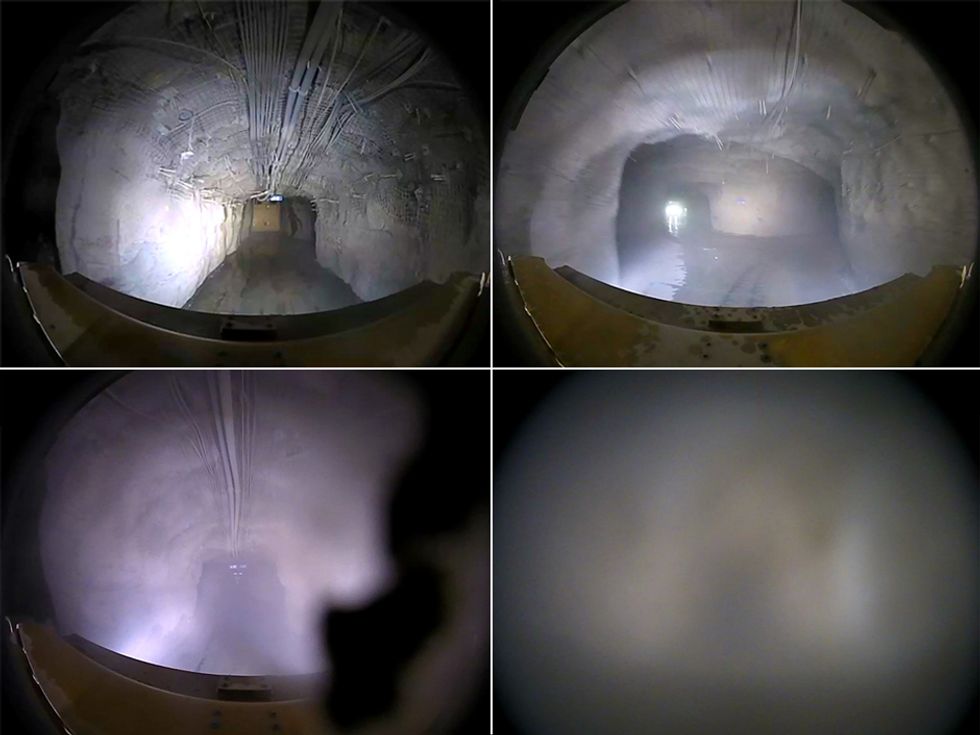

Underground mines are among the harshest man-made places on earth. They're cold, dark, and dusty, and due to the possibility of a sudden collapse or explosion, they're also extremely dangerous. For companies operating in such an extreme environment, improving their ability to track machines and people underground is critical.

In a surface mine, you'd simply use high-precision differential GPS, but that obviously doesn't work below ground. Existing indoor navigation systems, such as laser mapping and RF networks, are expensive and often require infrastructure that's difficult to deploy and maintain in the severe conditions of a mine. For instance, when Caterpillar engineers considered 3D lidar, like the ones used on self-driving cars, they concluded that “none of them can survive underground," Smith says.

One big reason that mine operators need to track their vehicles is to plan how they excavate. Each day starts with a “dig plan" that specifies the amount of ore that will be mined in various tunnels. At the end of the day, the operator compares the dig plan to what was actually mined, to come up with the next day's dig plan. “If you're feeding in inaccurate information, your plan is not going to be very good. You may start mining dirt instead of ore, or the whole tunnel could cave in," Smith explains. “It's really important to know what you've done."

The traditional method is for the miner to jot down his movements throughout the day, but that means he has to stop what he's doing to fill out paperwork, and he's often guessing what actually occurred. The QUT navigation system will more accurately measure where and how far each vehicle travels, as well as provide a reading of where the vehicle is at any given time. The first vehicle will drive into the mine and map the environment using the rat-brain-inspired navigation algorithm, while also gathering images of each tunnel with a low-cost 720p camera. The only unusual feature of the camera is its extreme ruggedization, which Smith says goes well beyond military specifications.

Subsequent vehicles will use those results to localize themselves within the mine, comparing footage from their own cameras with previously gathered images. The vehicles won't be autonomous, Milford notes, but that capability could eventually be achieved by combining the camera data with data from IMUs and other sensors. This would add more precision to the trucks' positioning, allowing them to drive themselves.

The QUT team has started collecting data within actual mines, which will be merged with another large data set from Caterpillar containing about a thousand hours of underground camera imagery. They will then devise a preliminary algorithm, to be tested in an abandoned mine somewhere in Queensland, with the help of Mining3, an Australian mining R&D company; the Queensland government is also a partner on the project. The system could be useful for deep open-pit mines, where GPS tends not to work reliably. If all goes well, Caterpillar plans to commercialize the system quickly. “We need these solutions," Smith says.

For now, Milford's team relies on standard computing hardware to run its algorithms, although they keep tabs on the latest research in neuromorphic computing. “It's still a bit early for us to dive in," Milford says. Eventually, though, he expects his brain-inspired systems will map well to neuromorphic chip architectures like IBM's True North and the University of Manchester's SpiNNaker. [For more on these chips, see "Neuromorphic Chips Are Destined for Deep Learning—or Obscurity," in this issue.]

Will brain-inspired navigation ever go mainstream? Many developers of self-driving cars, for instance, invest heavily in creating detailed maps of the roads where their vehicles will drive. The vehicles then use their cameras, lidars, GPS, and other sensors to locate themselves on the maps, rather than having to build their own.

Still, autonomous vehicles need to prove they can drive in conditions like heavy rain, snow, fog, and darkness. They also need to better handle uncertainty in the data; images with glare, for instance, might have contributed to a fatal accident involving a self-driving Tesla last year. Some companies are already testing machine-learning-based navigation systems, which rely on artificial neural networks, but it's possible that more brain-inspired approaches like RatSLAM could complement those systems, improving performance in difficult or unexpected scenarios.

Carnegie Mellon's Wettergreen offers a more tantalizing possibility: giving cars the ability to navigate to specific locations without having to explicitly plan a trajectory on a city map. Future robots, he notes, will have everything modeled down to the millimeter. “But I don't," he says, “and yet I can still find my way around. The human brain uses different types of models and maps—some are metric, some are more topological, and some are semantic."

A human, he continues, can start with an idea like “Somewhere on the south side of the city, there's a good Mexican restaurant." Arriving in that general area, the person can then look for clues as to where the restaurant may be. “Even the most capable self-driving car wouldn't know what to do with that kind of task, but a more brain-inspired system just might."

Some roboticists, however, are skeptical that such unconventional approaches to SLAM are going to pay off. As sensors like lidar, IMUs, and GPS get better and cheaper, traditional SLAM algorithms will be able to produce increasingly accurate results by combining data from multiple sources. People tend to ignore the fact that “SLAM is really a sensor fusion problem and that we are getting better and better at doing SLAM with lower-cost sensors," says Melonee Wise, CEO of Fetch Robotics, a company based in San Jose, Calif., that sells mobile robots for transporting goods in highly dynamic environments. “I think this disregard causes people to fixate on trying to solve SLAM with one sensor, like a camera, but in today's low-cost sensor world that's not really necessary."

Even if RatSLAM doesn't become practical for most applications, developing such brainlike algorithms offers us a window into our own intelligence, says Peter Stratton, a computer scientist at the Queensland Brain Institute who collaborates with Milford. He notes that standard computing's von Neumann architecture, where the processor is separated from memory and data is shuttled between them, is very inefficient.

“The brain doesn't work anything like that. Memory and processing are both happening in the neuron. It's 'computing with memories,' " Stratton says. A better understanding of brain activity, not only as it relates to responses to stimuli but also in terms of its deeper internal processes—memory retrieval, problem solving, daydreaming—is “what's been missing from past AI attempts," he says.

Milford notes that a lot of types of intelligence aren't easy to study using only animals. But when you observe how rats and robots perform the same tasks, like navigating a new environment, you can test your theories about how the brain works. You can replay scenarios repeatedly. You can tinker and manipulate your models and algorithms. “And unlike with an animal or an insect brain," he says, “we can see everything in a robot's 'brain.' "

This article appears in the June 2017 print issue as “Navigate Like a Rat."