AI Designers Find Inspiration in Rat Brains

Reverse engineering 1 cubic millimeter of brain tissue could lead to better artificial neural networks

Today’s artificial intelligence systems can destroy human champions at sophisticated games like chess, Go, and Texas Hold ’em. In flight simulators, they can shoot down top fighter pilots. They’re surpassing human doctors with more precise surgical stitching and more accurate cancer diagnoses. But there are some situations when a 3-year-old can easily defeat the fanciest AI in the world: when the contest involves a type of learning so routine that humans don’t even realize they’re doing it.

That last thought occurred to David Cox—Harvard neuroscientist, AI expert, and proud father of a 3-year-old—when his daughter spotted a long-legged skeleton at a natural history museum, pointed at it, and said, “Camel!” Her only other encounter with a camel had been a few months earlier, when he showed her a cartoon camel in a picture book.

AI researchers call this ability to identify an object based on a single example “one-shot learning,” and they’re deeply envious of toddlers’ facility with it. Today’s AI systems acquire their smarts through a very different process. Typically, in an autonomous training method called deep learning, a program is given masses of data from which to draw conclusions. To train an AI camel detector, the system would ingest thousands of images of camels—cartoons, anatomical diagrams, photos of the one-humped and two-humped varieties—all of which would be labeled “camel.” The AI would also take in thousands of other images labeled “not camel.” Once it had chewed through all that data to determine the animal’s distinguishing features, it would be a whiz-bang camel detector. But Cox’s daughter would have long since moved on to giraffes and platypuses.

Cox mentions his daughter by way of explaining a U.S. government program called Machine Intelligence from Cortical Networks (Microns). Its ambitious goal: to reverse engineer human intelligence so that computer scientists can build better artificial intelligence. First, neuroscientists are tasked with discovering the computational strategies at work in the brain’s squishy gray matter; then the data team will translate those strategies into algorithms. One of the big challenges for the resulting AIs will be one-shot learning. “Humans have an amazing ability to make inferences and generalize,” Cox says, “and that’s what we’re trying to capture.”

The five-year program, funded to the tune of US $100 million by the Intelligence Advanced Research Projects Agency (IARPA), keeps a tight focus on the visual cortex, the part of the brain where much visual-information processing occurs. Working with mice and rats, three Microns teams aim to map the layout of neurons inside 1 cubic millimeter of brain tissue. That may not sound like much, but that tiny cube contains about 50,000 neurons connected to one another at about 500 million junctures called synapses. The researchers hope that a clear view of all those connections will allow them to discover the neural “circuits” that are activated when the visual cortex is hard at work. The project requires specialized brain imaging that shows individual neurons with nanometer-level resolution, which has never before been attempted for a brain chunk of this size.

Although each Microns team involves multiple institutions, most of the participants of the team led by Cox, an assistant professor of molecular and cellular biology and of computer science at Harvard University, work in a single building on the Harvard campus. A tour through the halls reveals rodents busy with assignments in a rat “video arcade”; a machine that carves up brains as if it were the world’s most precise deli slicer; and some of the fastest and most powerful microscopes on the planet. With their equipment running full out and with huge amounts of human effort, Cox thinks they just might crack the code of that daunting cubic millimeter.

Try to wrap your mind around the sheer power of the human mind. To process information about the world and to keep your body running, electric pulses flash through 86 billion neurons packed into spongy folds of tissue inside your cranium. Each neuron has a long axon that winds through the tissue and allows it to link to thousands of other neurons, making for trillions of connections. Patterns of electric pulses correlate with everything a human being experiences: wiggling a finger, digesting lunch, falling in love, recognizing a camel.

Computer scientists have tried to emulate the brain since the 1940s, when they first devised software structures called artificial neural networks. Most of today’s fanciest AIs use some modern form of this architecture: There are deep neural networks, convolutional neural networks, recurrent neural networks, and so on. Loosely inspired by the brain’s structure, these networks consist of many computing nodes called artificial neurons, which perform small discrete tasks and connect to each other in ways that allow the overall systems to accomplish impressive feats.

Neural networks haven’t been able to copy the anatomical brain more closely because science still lacks basic information about neural circuitry. R. Jacob Vogelstein, the Microns project manager at IARPA, says researchers have typically worked on either the micro or macro scale. “The tools we used either involved poking individual neurons or aggregating signals across large swaths of the brain,” he says. “The big gap is understanding operations on a circuit level—how thousands of neurons work together to process information.”

The situation changed with recent technological advances that enable neuroscientists to make “connectome” maps revealing the multitude of connections among neurons. But Microns seeks more than a static circuitry diagram. The teams must show how those circuits activate when a rodent sees, learns, and remembers. “It’s very similar to how you’d try to reverse engineer an integrated circuit,” Vogelstein says. “You could stare at the chip in extreme detail, but you won’t really know what it’s meant to do unless you see the circuit in operation.”

For IARPA, the real payoff will come if researchers can trace the pattern of neurons involved in a cognitive task and translate that pattern into a more brainlike architecture for artificial neural networks. “Hopefully, the brain’s computational strategies are representable in mathematical and algorithmic terms,” Vogelstein says. The government’s big bet is that brainlike AI systems will be more adept than their predecessors at solving real-world problems. After all, it’s a noble quest to understand the brain, but intelligence agencies want AIs that can quickly learn to recognize not just a camel but also a half-obscured face in a grainy security-camera video.

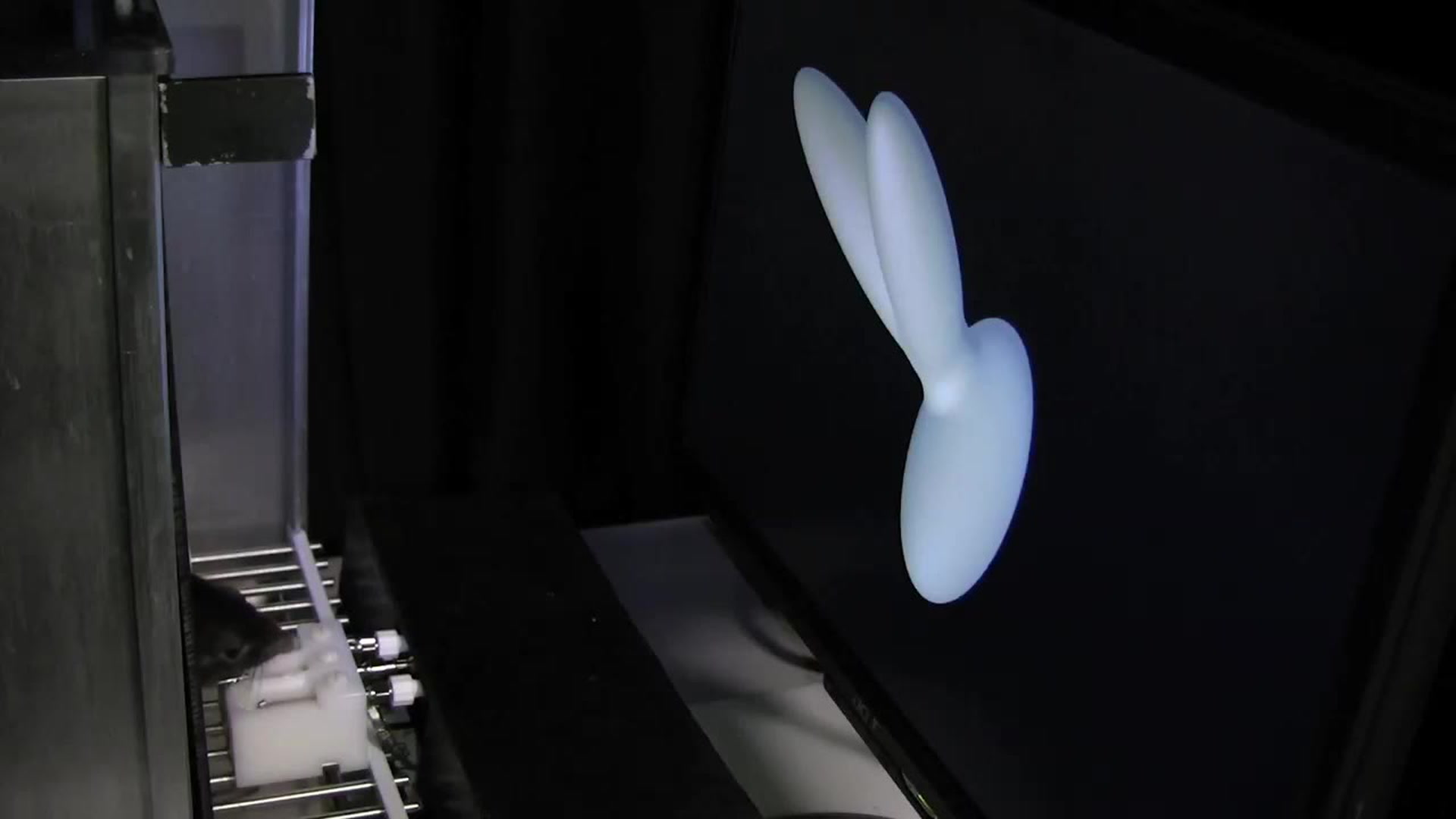

The video arcade for Cox’s rats is a small room where black boxes the size of microwaves are stacked four high. Inside each box stands a rat facing a computer screen, with two nozzles directly in front of its nose.

In the current experiment, the rat tries to master a sophisticated visual task. The screen displays three-dimensional computer-generated objects—nothing recognizable from the outside world, just lumpy abstract shapes. When the rat sees object A, it must lick the nozzle on the left to get a drop of sweet juice; when it sees object B, the juice will be in the right nozzle. But the objects are presented in various orientations, so the rat has to mentally rotate each shape on display and decide if it matches A or B.

Interspersed with training sessions are imaging sessions, for which the rats are taken down the hall to another lab where a bulky microscope is draped in black cloth, looking like an old-fashioned photographer’s setup. Here, the team uses a two-photon excitation microscope to examine the animal’s visual cortex while it’s looking at a screen displaying the now-familiar objects A and B, again in various orientations. The microscope records flashes of fluorescence when its laser hits active neurons, and the 3D video shows patterns that resemble green fireflies winking on and off in a summer night. Cox is keen to see how those patterns change as the animal becomes expert at its task.

The microscope’s resolution isn’t fine enough to show the axons that connect the neurons to one another. Without that information, the researchers can’t determine how one neuron triggers the next to create an information-processing circuit. For that next step, the animal must be killed, and its brain subjected to much closer study.

The researchers excise a tiny cube of the visual cortex, which goes via FedEx to Argonne National Laboratory, in Illinois. There, a particle accelerator uses powerful X-ray radiation to make a 3D map showing the individual neurons, other types of brain cells, and blood vessels. This map also doesn’t reveal the connecting axons inside the cube, but it helps later, when researchers compare the two-photon microscopy images with images produced with electron microscopes. “The X-ray is like a Rosetta stone,” Cox says.

Then the brain nugget comes back to the Harvard lab of Jeff Lichtman, a professor of molecular and cellular biology and a leading expert on the brain’s connectome. Lichtman’s team takes that 1 mm3 of brain and uses the machine that resembles a deli slicer to carve 33,000 slices, each only 30 nanometers thick. These gossamer sheets are automatically collected on strips of tape and arranged on silicon wafers. Next the researchers deploy one of the world’s fastest scanning electron microscopes, which slings 61 beams of electrons at each brain sample and measures how the electrons scatter. The refrigerator-size machine runs around the clock, producing images of each slice with 4-nm resolution.

Each image resembles a cross section of a cube of densely packed spaghetti. Image-processing software arranges the slices in order and traces each strand of spaghetti from one slice to the next, delineating the full length of each neuron’s axon along with its thousands of connections to other neurons. But the software sometimes loses track of strands or gets one confused with another. Humans are better at this task than computers, Cox says. “Unfortunately, there aren’t enough humans on earth to trace this much data.” Software engineers at Harvard and MIT are working on the tracing problem, which they must solve to make an accurate wiring diagram of the brain.

Overlaying that diagram with activity maps from the two-photon microscope should reveal the brain’s computational structures. For example, it should show which neurons form a circuit that lights up when a rat sees an odd lumpy object, mentally flips it upside down, and decides that it’s a match for object A.

Another big challenge for Cox’s team is speed. In the program’s first phase, which ended in May, each team had to show off results from a chunk of brain tissue that measured 100 micrometers cubed. For that smaller chunk, Cox’s team had the electron-microscopy and image-reconstruction step down to two weeks. Now, in phase two, the teams need to be able to process the same size chunk in a few hours. Scaling up from 100 μm3 to 1 mm3 is a volume increase of a thousandfold. That’s why Cox is obsessively focused on automating every step of the process, from the rats’ video training to the tracing of the connectome. “These IARPA projects force scientific research to look a lot more like engineering,” he says. “We need to turn the crank fast.”

Speeding up the experiments allows Cox’s team to test more theories regarding the brain’s circuits, which will help the AI researchers too. In machine learning, computer scientists set the overall architecture of the neural network while the program itself decides how to connect its many computations into sequences. So the researchers plan to train the rats and a neural network on the same visual-recognition task and compare both the patterns of links and the outcomes. “If we see certain connectivity motifs in the brain and we don’t see them in the models, maybe that’s a hint that we’re doing something wrong,” Cox says.

One area of investigation involves the brain’s learning rules. Object recognition is thought to occur through a hierarchy of processing, with a first set of neurons taking in the basics of color and form, the next set finding edges to separate the object from its background, and so on. As the animal gets better at a recognition task, researchers can ask: Which set of neurons in the hierarchy is changing its activity most drastically? And as the AI gets better at the same task, does the pattern of activity in its neural network change in the same way as the rat’s?

IARPA hopes the findings will apply not only to computer vision but also to machine learning in general. “There’s a bit of a leap of faith that we all take here, but I think it’s an evidence-based leap of faith,” Cox says. He notes that the cerebral cortex, the outer layer of neural tissue where high-level cognition occurs, has a “suspiciously similar” structure throughout. To neuroscientists and AI experts, that uniformity suggests that a fundamental type of circuit may be used throughout the brain for information processing, which they hope to discover. Defining that ur-circuit may be a step toward a generally intelligent AI.

While Cox’s team is turning the crank, attempting to make tried-and-true neuroscience procedures run faster, another Microns researcher is pursuing a radical idea. If it works, says George Church, a professor at the Wyss Institute for Biologically Inspired Engineering, at Harvard University, it could revolutionize brain science.

Church is coleading a Microns team with Tai Sing Lee of Carnegie Mellon University, in Pittsburgh. Church is responsible for the connectome-mapping part of the process, and he’s taking a drastically different approach from the other teams. He doesn’t use electron microscopy to trace axon connections; he believes the technique is too slow and produces too many errors. As the other teams try to trace axons across a cubic millimeter of tissue, Church says, errors will accumulate and muddy the connectome data.

Church’s method isn’t affected by the length of axons or the size of the brain chunk under investigation. He uses genetically engineered mice and a technique called DNA bar coding, which tags each neuron with a unique genetic identifier that can be read out from the fringy tips of its dendrites to the terminus of its long axon. “It doesn’t matter if you have some gargantuan long axon,” he says. “With bar coding you find the two ends, and it doesn’t matter how much confusion there is along the way.” His team uses slices of brain tissue that are thicker than those used by Cox’s team—20 μm instead of 30 nm—because they don’t have to worry about losing the path of an axon from one slice to the next. DNA sequencing machines record all the bar codes present in a given slice of brain tissue, and then a program sorts through the genetic information to make a map showing which neurons connect to one another.

Church and his collaborator Anthony Zador, a neuroscience professor at Cold Spring Harbor Laboratory, in New York, have proven in prior experiments that the bar coding and sequencing technique works, but they haven’t yet put the data together into the connectome maps that the Microns project requires. Assuming his team gets it done, Church says Microns will mark just the beginning of his brain-mapping efforts: He next wants to chart all the connections of an entire mouse brain, with its 70 million neurons and 70 billion connections. “Doing 1 cubic millimeter is extraordinarily myopic,” Church says. “My ambition doesn’t end there.”

Such large-scale maps could provide insights for developing AIs that more rigorously mimic biological brains. But Church, who relishes the role of provocateur, envisions another way forward for computing: Stop trying to build silicon copies of brains, he says, and instead build biological brains that are even better at handling the computational tasks that human brains are so good at. “I think we’ll soon have the ability to do synthetic neurobiology, to actually build brains that are variations on natural brains,” he says. While silicon-based computers beat biological systems when it comes to processing speeds, Church imagines engineered brains augmented with circuit elements to accelerate their operations.

In Church’s estimation, the Microns goal of reverse engineering the brain may not be achievable. The brain is so complex, he says, that even if researchers succeed in building these machines, they may not fully understand the brain’s mysteries—and that’s okay. “I think understanding is a bit of a fetish among scientists,” Church says. “It may be much easier to engineer the brain than to understand it.”

This article appears in the June 2017 print issue as “From Animal Intelligence to Artificial Intelligence.”