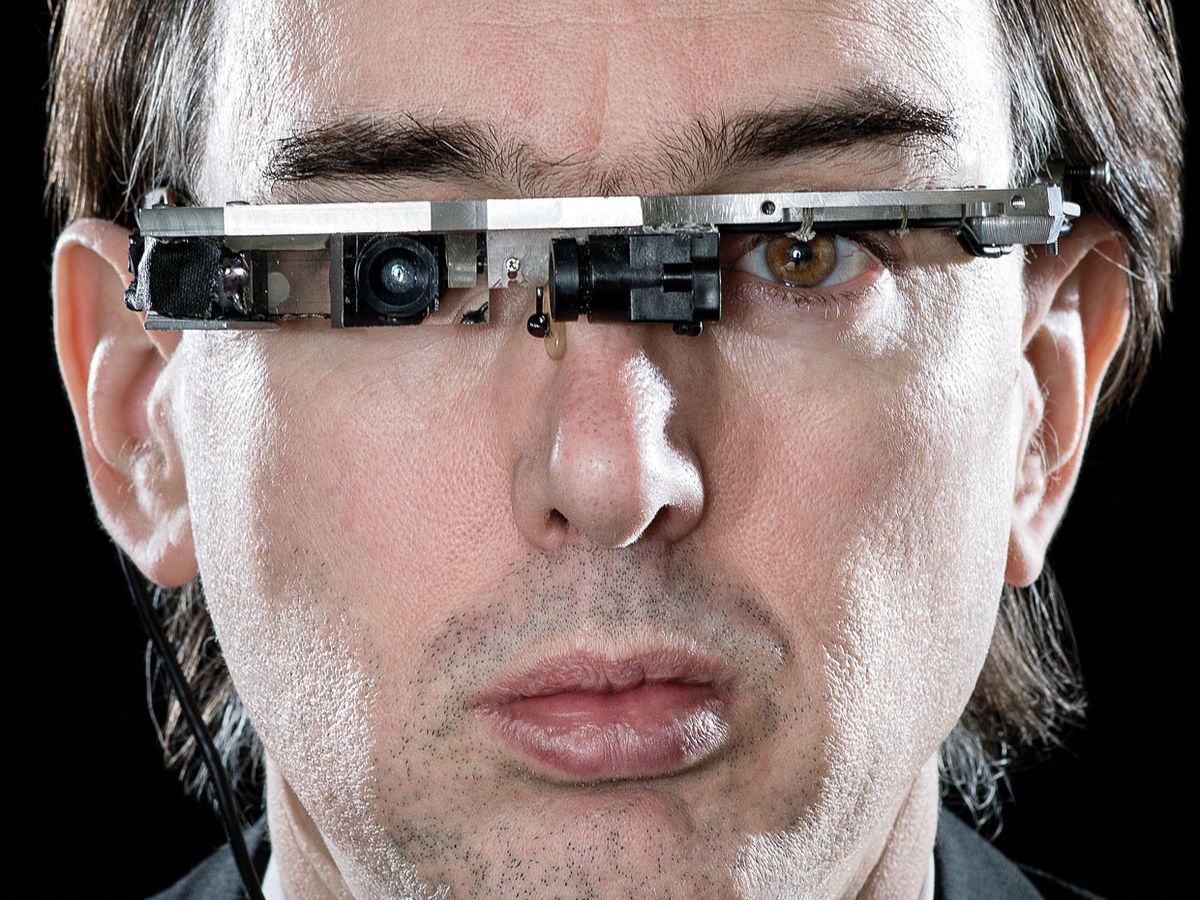

Steve Mann: My “Augmediated” Life

What I’ve learned from 35 years of wearing computerized eyewear

Back in 2004, I was awakened early one morning by a loud clatter. I ran outside, only to discover that a car had smashed into the corner of my house. As I went to speak with the driver, he threw the car into reverse and sped off, striking me and running over my right foot as I fell to the ground. When his car hit me, I was wearing a computerized-vision system I had invented to give me a better view of the world. The impact and fall injured my leg and also broke my wearable computing system, which normally overwrites its memory buffers and doesn't permanently record images. But as a result of the damage, it retained pictures of the car's license plate and driver, who was later identified and arrested thanks to this record of the incident.

Was it blind luck (pardon the expression) that I was wearing this vision-enhancing system at the time of the accident? Not at all: I have been designing, building, and wearing some form of this gear for more than 35 years. I have found these systems to be enormously empowering. For example, when a car's headlights shine directly into my eyes at night, I can still make out the driver's face clearly. That's because the computerized system combines multiple images taken with different exposures before displaying the results to me.

I've built dozens of these systems, which improve my vision in multiple ways. Some versions can even take in other spectral bands. If the equipment includes a camera that is sensitive to long-wavelength infrared, for example, I can detect subtle heat signatures, allowing me to see which seats in a lecture hall had just been vacated, or which cars in a parking lot most recently had their engines switched off. Other versions enhance text, making it easy to read signs that would otherwise be too far away to discern or that are printed in languages I don't know.

Believe me, after you've used such eyewear for a while, you don't want to give up all it offers. Wearing it, however, comes with a price. For one, it marks me as a nerd. For another, the early prototypes were hard to take on and off. These versions had an aluminum frame that wrapped tightly around the wearer's head, requiring special tools to remove.

Because my computerized eyewear can augment the dark portions of what I'm viewing while diminishing the amount of light in the bright areas, I say that it provides a “mediated" version of reality. I began using this phrasing long before the rise in popularity of the more widespread term “ augmented reality," which usually refers to something less interesting: the overlay of text or graphics on top of your normal vision. That doesn't improve your eyesight. Indeed, it often makes it worse by obscuring your view with a lot of visual clutter.

For a long time, computer-aided vision and augmented reality were rather obscure topics, of interest only to a few corporate researchers, academics, and a small number of passionate hobbyists. Recently, however, augmented reality has captured the public consciousness. In particular, Google has lately attracted enormous attention to its

Project Glass, an eyeglass-like smartphone with a wearable display. I suppose that's fine as far as it goes. But Google Glass is much less ambitious than the computer-mediated vision systems I constructed decades ago. What Google's involvement promises, though, is to popularize this kind of technology.

It's easy to see that coming: Wearable computing equipment, which also includes such items as health monitors and helmet-cams, is already close to a billion-dollar industry worldwide. And if Google's vigorous media campaign for its Project Glass is any indication of the company's commitment, wearable computers with head-mounted cameras and displays are poised finally to become more than a geek-chic novelty.

So here, then, let me offer some wisdom accumulated over the past 35 years. The way I figure it, thousands of people are about to experience some of the same weird sensations I first encountered decades ago. If I can prevent a few stumbles, so much the better.

The idea of building something to improve vision first struck me during my childhood, not long after my grandfather (an inveterate tinkerer) taught me to weld. Welders wear special goggles or masks to view their work and to protect their eyesight from the blindingly bright light, often from an electric arc. Old-fashioned welding helmets use darkened glass for this. More modern ones use electronic shutters. Either way, the person welding merely gets a uniformly filtered view. The arc still looks uncomfortably bright, and the surrounding areas remain frustratingly dim.

This long-standing problem for welders got me thinking: Why not use video cameras, displays, and computers to modify your view in real time? And why not link these wearable computer systems to centralized base stations or to one another? That would make them that much more versatile.

I started exploring various ways to do this during my youth in the 1970s, when most computers were the size of large rooms and wireless data networks were unheard of. The first versions I built sported separate transmitting and receiving antennas, including rabbit ears, which I'm sure looked positively ridiculous atop my head. But building a wearable general-purpose computer with wireless digital-communications capabilities was itself a feat. I was proud to have pulled that off and didn't really care what I looked like.

My late-1970s wearable computer systems evolved from something I first designed to assist photographers into units that featured text, graphics, video, audio, even radar capability by the early 1980s. As you can imagine, these required me to carry quite a bit of gear. Nearly everybody around me thought I was totally loony to wear all that hardware strapped to my head and body. When I was out with it, lots of people crossed the street to avoid me—including some rather unsavory-looking types who probably didn't want to be seen by someone wearing a camera and a bunch of radio antennas!

Why did I go to such extremes? Because I realized that the future of computing was as much about communications between people wearing computers as it was about performing colossal calculations. At the time, most engineers working with computers considered that a crazy notion. Only after I went to MIT for graduate school in the early 1990s did some of the people around me begin to see the merits of wearable computing.

The technical challenges at the time were enormous. For example, wireless data networks, so ubiquitous now, had yet to blossom. So I had to set up my own radio stations for data communications. The radio links I cobbled together in the late 1980s could transfer data at a then-blazing 56 kilobytes per second.

A few years before this, I had returned to my original inspiration—better welding helmets—and built some that incorporated vision-enhancing technology. While welding with such a helmet, I can discern the tip of the brilliant electrode, even the shape of the electric arc, while simultaneously seeing details of the surrounding areas. Even objects in the background, which would normally be swallowed up in darkness, are visible. These helmets exploit an image-processing technique I invented that is now commonly used to produce HDR (high-dynamic-range) photos. But these helmets apply the technique at a fast video rate and let the wearer view the stereoscopic output in real time.

Welding was only one of many early applications for my computer-aided vision systems, but it's been influential in shaping how I've thought about this technology all along. Just as welders refer to their darkened glass in the singular, I call my equipment “Digital Eye Glass" rather than “digital eyeglasses," even for the units I've built into what look like ordinary eyeglasses or sunglasses. That Google also uses the singular “Glass" to describe its new gizmo is probably no coincidence.

I have mixed feelings about the latest developments. On one hand, it's immensely satisfying to see that the wider world now values wearable computer technology. On the other hand, I worry that Google and certain other companies are neglecting some important lessons. Their design decisions could make it hard for many folks to use these systems. Worse, poorly configured products might even damage some people's eyesight and set the movement back years.

My concern comes from direct experience. The very first wearable computer system I put together showed me real-time video on a helmet-mounted display. The camera was situated close to one eye, but it didn't have quite the same viewpoint. The slight misalignment seemed unimportant at the time, but it produced some strange and unpleasant results. And those troubling effects persisted long after I took the gear off. That's because my brain had adjusted to an unnatural view, so it took a while to readjust to normal vision.

Research dating back more than a century helps explain this. In the 1890s, the renowned psychologist George Stratton constructed special glasses that caused him to see the world upside down. The remarkable thing was that after a few days, Stratton's brain adapted to his topsy-turvy worldview, and he no longer saw the world upside down. You might guess that when he took the inverting glasses off, he would start seeing things upside down again. He didn't. But his vision had what he called, with Victorian charm, “a bewildering air."

Through experimentation, I've found that the required readjustment period is, strangely, shorter when my brain has adapted to a dramatic distortion, say, reversing things from left to right or turning them upside down. When the distortion is subtle—a slightly offset viewpoint, for example—it takes less time to adapt but longer to recover.

The current prototypes of Google Glass position the camera well to the right side of the wearer's right eye. Were that system to overlay live video imagery from the camera on top of the user's view, the very same problems would surely crop up. Perhaps Google is aware of this issue and purposely doesn't feed live video back to the user on the display. But who knows how Google Glass will evolve or what apps others will create for it?

They might try to display live video to the wearer so that the device can serve as a viewfinder for taking pictures or video, for example. But viewing unaligned live video through one eye for an extended time could very well mess up the wearer's neural circuitry. Virtual-reality researchers have long struggled to eliminate effects that distort the brain's normal processing of visual information, and when these effects arise in equipment that augments or mediates the real world, they can be that much more disturbing. Prolonged exposure might even do permanent damage, particularly to youngsters, whose brains and eye muscles are still developing.

Google Glass and several similarly configured systems now in development suffer from another problem I learned about 30 years ago that arises from the basic asymmetry of their designs, in which the wearer views the display through only one eye. These systems all contain lenses that make the display appear to hover in space, farther away than it really is. That's because the human eye can't focus on something that's only a couple of centimeters away, so an optical correction is needed. But what Google and other companies are doing—using fixed-focus lenses to make the display appear farther away—is not good.

Using lenses in this way forces one eye to remain focused at some set distance while the focus of the other eye shifts according to whatever the wearer is looking at, near or far. Doing this leads to severe eyestrain, which again can be harmful, especially to children.

I have constructed four generations of Digital Eye Glass in the course of figuring out how to solve this and other problems. While my latest hardware is quite complicated, the basic principles behind it are pretty straightforward.

Many of my systems, like Google Glass, modify the view of just one eye. I find this works well. But I arrange the optics so that the camera takes in exactly the same perspective as that eye does. I also position the display so that the wearer sees it directly ahead and doesn't have to look up (as is necessary with Google Glass), down, or sideways to view it.

Getting a good alignment of views isn't complicated—a double-sided mirror is all it takes. One surface reflects incoming light to a side-mounted camera; the other reflects light from a side-mounted display to the eye. By adding polarizing filters, you can use a partially transparent mirror so that whatever is presented on the display exactly overlays the direct view through that eye. Problem No. 1 solved.

The second issue, the eyestrain from trying to focus both eyes at different distances, is also one I overcame—more than 20 years ago! The trick is to arrange things so that the eye behind the mirror can focus at any distance and still see the display clearly. This arrangement of optical components is what I call an “ aremac." (Aremac is just camera spelled backward.) Ideally, you'd use a pinhole aremac.

To appreciate why a pinhole aremac neatly solves the focusing problem, you first need to understand how a pinhole camera works. If you don't, consider this thought experiment. Imagine you want to record a brightly lit scene outside your window on a piece of photographic film. You can't just hold the film up and expect to get a clear image on it—but why not? Because light rays from every point in the scene would fall on every point on your film, producing a complete blur. What you want is for the light coming from each small point in the scene to land on a corresponding point on the film.

You can achieve that using a lightproof barrier with a tiny hole in it. Just put it between the scene and the film. Now, of all the light rays emanating from the upper-left corner of the scene, for instance, only one slips through the hole in the barrier, landing on the lower-right corner of the film in this case. Similar things happen for every other point in the scene, leading to a nice crisp (albeit inverted) image on the film.

Few cameras use pinholes, of course. Instead, they use lenses, which do the same job of forming an image while taking in more light. But lenses have a drawback: Objects at different distances require different focus settings. Pinholes don't have that shortcoming. They keep everything in focus, near or far. In the jargon of photographers, they are said to have an infinite depth of field.

My pinhole aremac is the reverse of a pinhole camera: It ensures that you see a sharp image through the display, no matter how you focus your eyes. This aremac is more complicated than a barrier with a pinhole, though. It requires a laser light source and a spatial light modulator, similar to what's found inside many digital projectors. With it, you can focus both eyes normally while using one eye to look through the mediated-vision system, thus avoiding eyestrain.

It's astounding to me that Google and other companies now seeking to market head-wearable computers with cameras and displays haven't leapfrogged over my best design (something I call “ EyeTap Generation-4 Glass") to produce models that are even better. Perhaps it's because no one else working on this sort of thing has spent years walking around with one eye that's a camera. Or maybe this is just another example of not-invented-here syndrome.

Until recently, most people tended to regard me and my work with mild curiosity and bemusement. Nobody really thought much about what this technology might mean for society at large. But increasingly, smartphone owners are using various sorts of augmented-reality apps. And just about all mobile-phone users have helped to make video and audio recording capabilities pervasive. Our laws and culture haven't even caught up with that. Imagine if hundreds of thousands, maybe millions, of people had video cameras constantly poised on their heads. If that happens, my experiences should take on new relevance.

The system I routinely use looks a bit odd, but it's no more cumbersome than Google Glass. Wearing it in public has, however, often brought me grief—usually from people objecting to what they think is a head-mounted video recorder. So I look forward to the day when wearing such things won't seem any stranger than toting around an iPhone.

Putting cameras on vast numbers of people raises important privacy and copyright issues, to be sure. But there will also be benefits. When police mistakenly shot to death Jean Charles de Menezes, a Brazilian electrician, at a London tube station in 2005, four of the city's ubiquitous closed-circuit television cameras were trained on the platform. Yet London authorities maintain that no video of the incident was recovered. A technical glitch? A cover-up? Either way, imagine if many of the bystanders were wearing eyeglass-like recording cameras as part of their daily routines. Everyone would know what happened.

But there's a darker side: Instead of acting as a counterweight to Big Brother, could this technology just turn us into so many Little Brothers, as some commentators have suggested? (I and other participants will be discussing such questions in June at the 2013 IEEE International Symposium on Technology and Society, in Toronto.)

I believe that like it or not, video cameras will soon be everywhere: You already find them in many television sets, automatic faucets, smoke alarms, and energy-saving lightbulbs. No doubt, authorities will have access to the recordings they make, expanding an already large surveillance capability. To my mind, surveillance videos stand to be abused less if ordinary people routinely wear their own video-gathering equipment, so they can watch the watchers with a form of inverse surveillance.

Of course, I could be wrong. I can see a lot of subtle things with my computerized eyewear, but the future remains too murky for me to make out.

This article originally appeared in print as "Vision 2.0."