This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

Imagine doing a simple hand gesture and the object in front of you is manipulated the way you desire. Now this act of creation is possible—in the world of virtual reality (VR).

Researchers in the United Kingdom have designed a new system called HotGestures, which allows users to execute quick hand gestures indicating which tool they want to use as they create objects and designs in virtual environments. In two experiments, a small handful of study participants piloted the approach, reporting that it was fast and easy to use. The results are described in a study published in October in IEEE Transactions on Visualization and Computer Graphics.

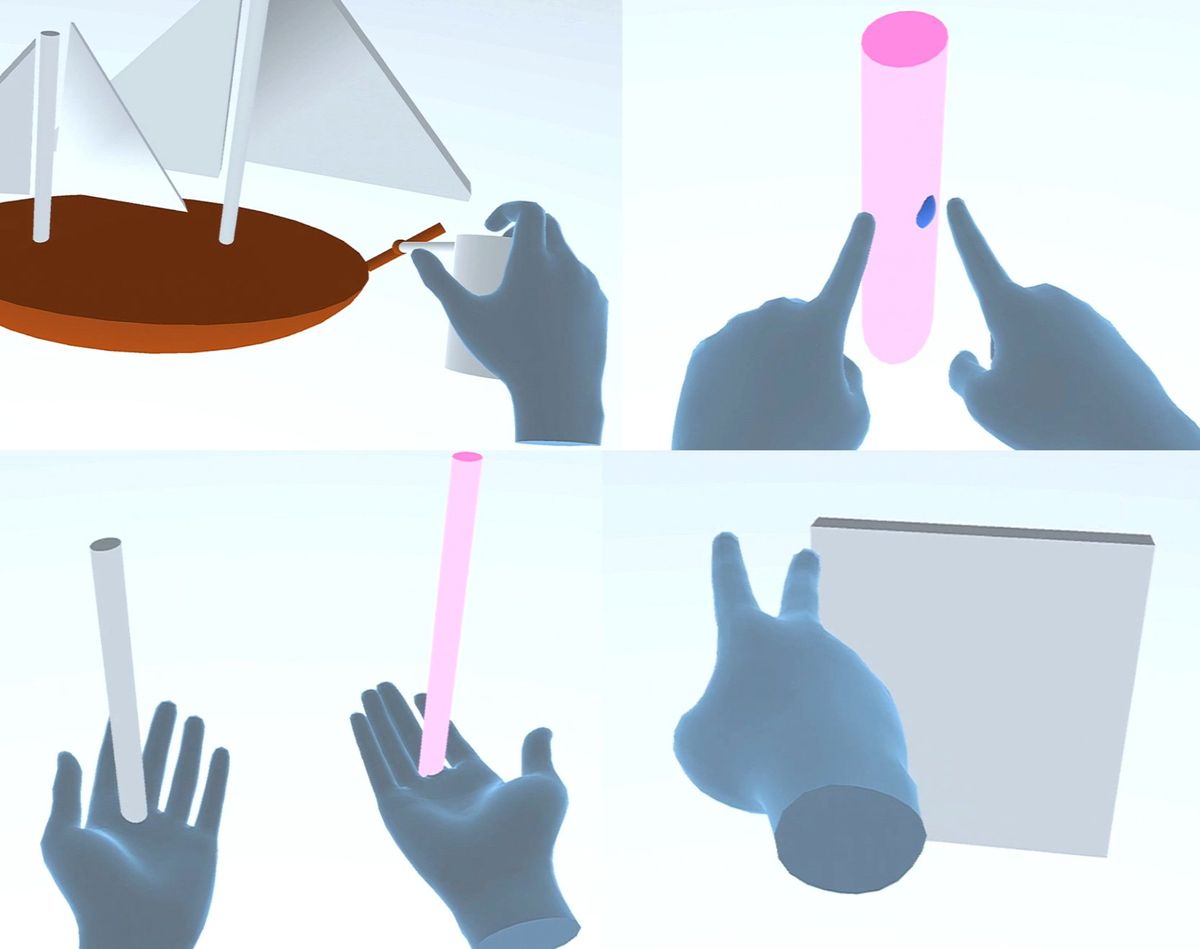

HotGestures give users “superhuman“ ability to open and control tools in virtual reality.University of Cambridge

Current VR systems allow users to select tools—for example, a pen—from a menu to create new objects and images in front of them. But this involves first selecting the menu, and then successfully selecting the correct tool.

Per Ola Kristensson is a professor of interactive systems engineering in the department of engineering at the University of Cambridge, in the U.K., and codirector of the Centre for Human-Inspired Artificial Intelligence. His team was interested in creating a system in which the user makes a simple hand gesture to indicate how they want to manipulate objects in VR, bypassing the need to select the tool from the menu.

“The system continuously receives observations of the user’s finger and hand movements for both hands through the headset’s integrated hand tracker,” explains Kristensson, adding that the AI evaluates the hand movements and predicts which tool the user wants.

HotGestures was designed so that users can also complete multistate gestures—for example, a user can make the hand signal for a pen and then indicate the desired width of the pen stroke by the distance between their fingers. “In this instance, it is also possible for the user to vary the stroke width by dynamically moving their index finger and thumb apart,” says Kristensson.

In their study, Kristensson’s team had 16 study participants pilot the new system. The volunteers were presented with a video demonstration on how to use HotGestures and had as much time as they wanted to practice 10 different hand gestures, which were a mix of static and dynamic movements. Participants were timed on how long it took them to complete an action using a gesture versus selecting that same action via the menu. After piloting HotGestures, participants were surveyed about their experience.

The results show that using the gesture mode was faster than the menu selection mode for the majority of actions, but especially for the pen, cube, sphere, and duplicate actions. The researchers also found that there was no significant difference in error rate between the two modes.

The survey results show that participants perceived the approach using hand gestures to be faster but also a bit more mentally taxing to use and slightly less accurate than selecting from the menu. In their paper, the research team speculates that, since the idea of using gestures for 3D modeling is likely unfamiliar to most participants, it may lead to more hesitation and uncertainty compared with the use of a more conventional menu.

In a second experiment, the same participants were allowed to choose whether to use HotGestures or the selection menu. In this hybrid scenario, users tended to rely on HotGestures most of the time but preferred choosing from the menu for some commands. Overall, 14 out of 16 participants preferred the hybrid solution for selecting commands, and the remaining two participants preferred HotGestures only for selecting commands. None preferred using only the menu.

Kristensson says he is looking forward to this approach to VR being adopted more broadly. “We have made the source code and the data freely available, as we hope this can be an established, standard way of using virtual tools in virtual and augmented reality,” he says.

- Augmented-Reality Platform Lets Consumers Customize Products ›

- How a Parachute Accident Helped Jump-start Augmented Reality ›

Michelle Hampson is a freelance writer based in Halifax. She frequently contributes to Spectrum's Journal Watch coverage, which highlights newsworthy studies published in IEEE journals.