Dear reader. This is your editor. This morning I found a note from Evan saying that, after working on this Video Friday post until 5 a.m., writing up an insane number of videos, he was too exhausted and unable to finish the post. Evan collapsed. Conked out. Shut down. I know, I was very worried too. This was serious. What a terrible thing to happen. A Friday without Video Friday?! So I am going to finish this post for you. You will not be let down. You will have Video Friday today!

How’s Evan? Oh, dude is fine. He just needed some sleep and will be back next week.

A long time ago (in 2008), there was a competition in Japan for really, really stupid robots. I don’t know why it stopped, but now we have something better: a competition where stupid robots try, and generally fail, to wrestle each other.

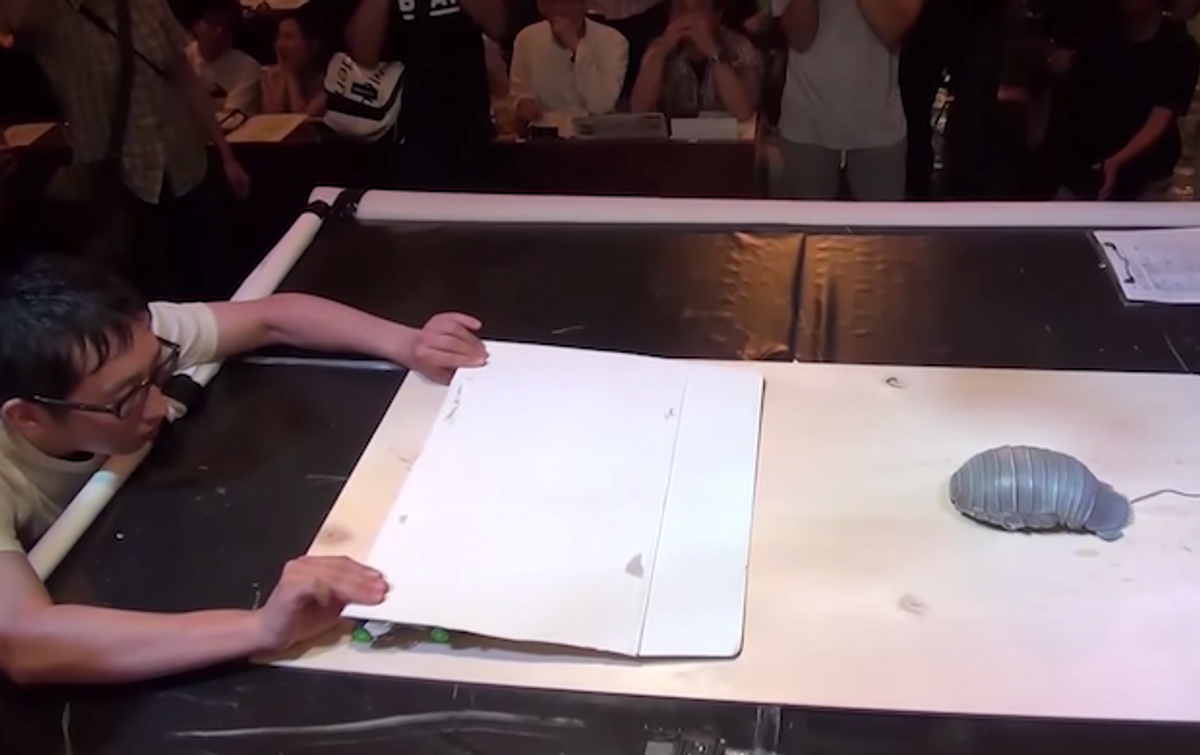

Hebocon is a robot sumo-wrestling tournament for those who don’t have the technical skills to actually make robots.

31 pseudo-robots that don’t even move properly came together to go head to head.

The robots in this tournament are nothing like what their builders initially imagined them to be, robots are forgotten on the train, the strategies made with careful planning only result in failure, and the entrants who enter the ring brag about their robot’s secret moves that don’t really exist.

This is the heated battle between crappy, but lovable robots.

The DelFly Explorer flapping-wing micro-UAV has learned to use onboard vision to localize and escape from a room through a window, which is pretty remarkable:

[ arXiv ]

Want to know how much fun Dash is to play with? Just ask this dog:

[ Dash Robotics ]

The description for this is “Closed Kinematic Chain Random Sampling,” but I just imagine this robot TOTALLY ROCKING OUT to some kind of crazy techno soundtrack that only it can hear:

Also, we need to see this on the real robot. Badly.

[ YouTube ]

This, in case you were wondering, is what happens when an airplane and a quadcopter have a love child. It’s the latest version of the Arcturus Jump VTOL UAV:

[ Arcturus UAV ]

Christmas? Who cares about Christmas! I can’t wait for December 15:

[ ASL ]

We’re very familiar with the magic that Kiva Systems brought to Amazon’s fulfillment process, but that doesn’t make it any less fun to watch. Plus, robot arms and some of the fastest conveyor belts I’ve ever seen.

[ Amazon ]

This is a really, really cool thing to do with drones:

Whenever you’re not using a telepresence robot, shackle it to a drain pipe in your basement to keep this from happening:

Via [ Hackaday ]

Impressive: dressing Jibo up as a turkey for Thanksgiving. More impressive: that kid’s shirt with the six retro robots on it.

[ Jibo ]

Plen. Plen is adorable.

[ Plen ]

Roboticists Julie Shah of MIT, Michael Peshkin of Northwestern, Allison Okamura of Stanford, and Lynn Parker of NSF talk about the promises and challenges of cooperative robots.

From disaster recovery to caring for the elderly in the home, NSF-funded scientists and engineers are developing robots that can handle critical tasks in close proximity to humans, safely and with greater resilience than previous generations of intelligent machines.

[ NSF ]

Here’s an example of how much faster (and more autonomous) DRC robots have gotten since the 2013 trials:

[ MIT DRC ]

This is what’s been going on lately at the Nimbus Lab at the University of Nebraska Lincoln. There’s a whole bunch of stuff (it’s a 14 minute video), but I think the most interesting clips are at 9:08 (integrating ROS and Google Glass) and 10:15 (Multiple UAV Flight and Landing).

[ Nimbus Lab ]

As it turns out, rubble piles are really hard to navigate, both for humans and for robots like RHex:

They’re working on it, though.

[ Kod*lab ]

Now you can just tap some commands on your iPad and Rollin’ Justin will bring you breakfast in bed. Sort of.

We choose an object-centered high level of abstraction to command a robot. The symbolic and geometric reasoning is thereby shifted from the operator to the robot allowing the operator to focus on the task rather then on robot-specific capabilities. Evaluating the possibility of actions in advance allows us to simplify the operator frontend by providing only possible actions for commanding the robot. By directly displaying video from the robot, we obtain an egocentric perspective which we augment with information about the internal state of the robot. We provide a mechanism for the operator to directly interact with the displayed information by applying a point-and-click paradigm. This way, commanding manipulation tasks is reduced to intuitive selection of the objects to be manipulated replicating the real world experience of the operator.

[DLR RMC ]

Not exactly what I was hoping for in Aldebaran’s next robot, but it’s pretty cute.

You can buy one in the brand new Aldebaran online store for $40.

[ Aldebaran ]

How eerie is this hexapod color detecting behavior?

Via [ Hackaday ]

You probably don’t need to listen to all of Clearpath’s PR2 Community Call, but at 8:30, they talk about PR2 upgraaades! Yes, PR2 is still one of the most capable research robots out there, and until something better comes along, Clearpath is going to keep them up and running.

[ Clearpath PR2 ]

Lastly this week, we have IEEE Spectrum’s very own Eliza Strickland moderating a panel discussion on “How the Age of Machine Consciousness is Transforming our Lives,” featuring Max Tegmark (MIT professor, author of “Our Mathematical Universe”) and David Ferrucci (ex-IBM and one of the creators of Watson, now with hedge fund Bridgewater Associates).

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.