Meta’s chief AI scientist, Yann LeCun, doesn’t lose sight of his far-off goal, even when talking about concrete steps in the here and now. “We want to build intelligent machines that learn like animals and humans,” LeCun tells IEEE Spectrum in an interview.

Today’s concrete step is a series of papers from Meta, the company formerly known as Facebook, on a type of self-supervised learning (SSL) for AI systems. SSL stands in contrast to supervised learning, in which an AI system learns from a labeled data set (the labels serve as the teacher who provides the correct answers when the AI system checks its work). LeCun has often spoken about his strong belief that SSL is a necessary prerequisite for AI systems that can build “world models” and can therefore begin to gain humanlike faculties such as reason, common sense, and the ability to transfer skills and knowledge from one context to another. The new papers show how a self-supervised system called a masked auto-encoder (MAE) learned to reconstruct images, video, and even audio from very patchy and incomplete data. While MAEs are not a new idea, Meta has extended the work to new domains.

By figuring out how to predict missing data, either in a static image or a video or audio sequence, the MAE system must be constructing a world model, LeCun says. “If it can predict what’s going to happen in a video, it has to understand that the world is three-dimensional, that some objects are inanimate and don’t move by themselves, that other objects are animate and harder to predict, all the way up to predicting complex behavior from animate persons,” he says. And once an AI system has an accurate world model, it can use that model to plan actions.

“Images, which are signals from the natural world, are not constructed to remove redundancy. That’s why we can compress things so well when we create JPGs.”

—Ross Girshick, Meta

“The essence of intelligence is learning to predict,” LeCun says. And while he’s not claiming that Meta’s MAE system is anything close to an artificial general intelligence, he sees it as an important step.

Not everyone agrees that the Meta researchers are on the right path to human-level intelligence. Yoshua Bengio is credited, in addition to his co–Turing Award winners LeCun and Geoffrey Hinton, with the development of deep neural networks, and he sometimes engages in friendly sparring with LeCun over big ideas in AI. In an email to IEEE Spectrum, Bengio spells out both some differences and similarities in their aims.

“I really don’t think that our current approaches (self-supervised or not) are sufficient to bridge the gap to human-level intelligence,” Bengio writes. He adds that “qualitative advances” in the field will be needed to really move the state of the art anywhere closer to human-scale AI.

While he agrees with LeCun that the ability to reason about the world is a key element of intelligence, Bengio’s team isn’t focused on models that can predict, but rather those that can render knowledge in the form of natural language. Such a model “would allow us to combine these pieces of knowledge to solve new problems, perform counterfactual simulations, or examine possible futures,” he notes. Bengio’s team has developed a new neural-net framework that has a more modular nature than those favored by LeCun, whose team is working on end-to-end learning (models that learn all the steps between the initial input stage and the final output result).

The transformer craze

Meta’s MAE work builds on a trend toward a type of neural network architecture called transformers. Transformers were first adopted in natural-language processing, where they caused big jumps in performance for models like Google’s BERT and OpenAI’s GPT-3. Meta AI researcher Ross Girshick says that transformers’ success with language caused people in the computer-vision community to “work feverishly to try to replicate those results” in their own field.

Meta’s researchers weren’t the first to successfully apply transformers to visual tasks; Girshick says that Google research on a Vision Transformer (ViT) inspired the Meta team. “By adopting the ViT architecture, it eliminated obstacles that had been standing in the way of experimenting with some ideas,” he tells Spectrum.

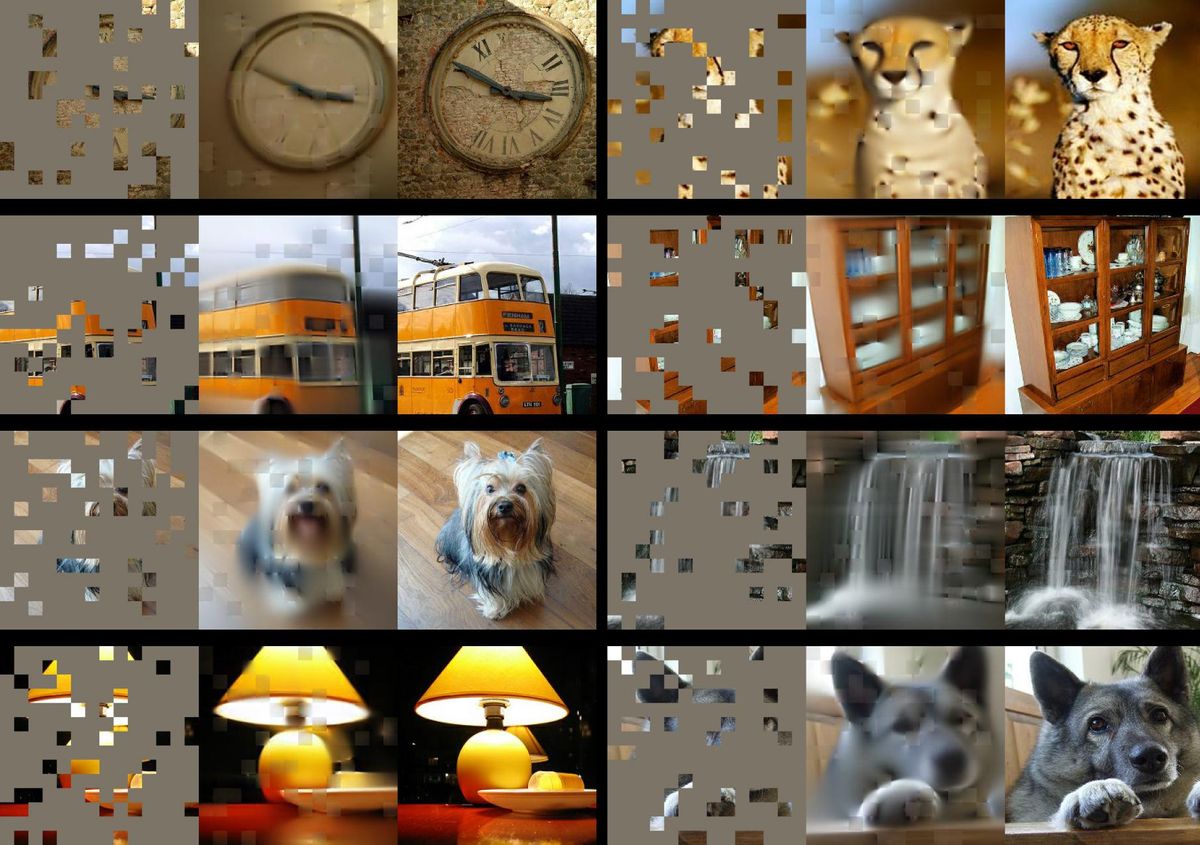

Girshick coauthored Meta’s first paper on MAE systems, which dealt with static images. Its training was analogous to how BERT and other language transformers are trained. Such language models are shown huge databases of text with some fraction of the words missing, or “masked.” The models try to predict the missing words, and then the missing text is unmasked so the models can check their work, adjust their parameters, and try again with a new chunk of text. To do something similar with vision, Girshick explains, the team broke up images into patches, masked some of the patches, and asked the MAE system to predict the missing parts of the images.

One of the team’s breakthroughs was the realization that masking a large proportion of the image gave the best results—a key difference from language transformers, where perhaps 15 percent of the words might be masked. “Language is an extremely dense and efficient communication system,” says Girshick. “Every symbol has a lot of meaning packed in. But images, which are signals from the natural world, are not constructed to remove redundancy. That’s why we can compress things so well when we create JPG images,” he notes.

By masking more than 75 percent of the patches in an image, Girshick explains, they remove the redundancy from the image that would otherwise make the task too trivial for training. Their two-part MAE system first uses an encoder that learns relationships between pixels across the training data set, then a decoder does its best to reconstruct original images from the masked versions. After this training regimen is complete, the encoder can also be fine-tuned for vision tasks such as classification and object detection.

“The reason why ultimately we’re excited is the results we see in transfer learning to downstream tasks,” says Girshick. When using the encoder for tasks such as object recognition, he says, “we’re seeing gains that are really substantial; they move the needle.” He notes that scaling up the model led to better performance, which is a promising sign for future models because SSL “has the potential to use a lot of data without requiring manual annotation.”

Going all-in for learning on massive uncurated data sets may be Meta’s tactic for improving results in SSL, but it’s also an increasingly controversial approach. AI ethics researchers such as Timnit Gebru have called attention to the biases inherent in the uncurated data sets that large language models learn from, sometimes with disastrous results.

Self-supervised learning in video and audio

In the MAE system for video, the masking obscured up to 95 percent of each video frame, because the similarities between frames meant that video signals have even more redundancy than static images. One big advantage of the MAE approach when it comes to video, says Meta researcher Christoph Feichtenhofer, is that video is typically very computationally demanding. But by masking up to 95 percent of each frame, MAE reduces the computational cost by up to 95 percent, he says.

The clips used in these experiments were only a few seconds long, but Feichtenhofer says that training an AI system on longer videos is “a very active research topic.” Imagine, he says, a virtual assistant who has a video feed of your house and can tell you where you left your keys an hour ago. (Whether you consider that possibility amazing or creepy, rest assured that it’s quite far off.)

More immediately, one can imagine both the image and video systems being useful for the kind of classification tasks that are needed for content moderation on Facebook and Instagram, and Feichtenhofer says that “integrity” is one possible application. “We are definitely talking to product teams,” he says, “but it’s very new, and we don’t have any concrete projects yet.”

For the audio MAE work, which the team says will soon be posted on the arXiv preprint server, the Meta AI team found a clever way to apply the masking technique. They turned the sound files into spectrograms, visual representations of the spectrum of frequencies within signals, and then masked parts of those images for training. The reconstructed audio is pretty impressive, though the model can currently handle clips of only a few seconds.

Predicting Audio

Meta’s masked auto-encoder for audio was trained on heavily masked data, and was then able to reconstruct audio files with impressive fidelity.

Bernie Huang, who worked on the audio system, says that potential applications include classification tasks, helping with voice over IP calls by filling in audio that gets lost when a packet gets dropped, or finding more efficient ways to compress audio files.

Meta has been on something of an AI charm offensive, open-sourcing research such as these MAE models and offering up a pretrained large language model to the AI community for research purposes. But critics have noted that for all this openness on the research side, Meta has not made its core commercial algorithms available for study—those that control newsfeeds, recommendations, and ad placements.

- Meta's Challenge to OpenAI—Give Away a Massive Language ... ›

- Meta Aims to Build the World's Fastest AI Supercomputer - IEEE ... ›

- The Turbulent Past and Uncertain Future of Artificial Intelligence ... ›

- Face Aging Software Can Show Your Child's Future - IEEE Spectrum ›

- Meta's AI Agents Learn To Move By Copying Toddlers - IEEE Spectrum ›

Eliza Strickland is a senior editor at IEEE Spectrum, where she covers AI, biomedical engineering, and other topics. She holds a master’s degree in journalism from Columbia University.