The machine learning industry’s efforts to measure itself using a standard yardstick has reached a milestone. Forgive the mixed metaphor, but that’s actually what’s happened with the release of MLPerf Inference v1.0 today. Using a suite of benchmark neural networks measured under a standardized set of conditions, 1,994 AI systems battled it out to show how quickly their neural networks can process new data. Separately, MLPerf tested an energy efficiency benchmark, with some 850 entrants for that.

This contest was the first following a set of trial runs where the AI consortium MLPerf and its parent organization MLCommons worked out the best measurement criteria. But the big winner in this first official version was the same as it had been in those warm-up rounds—Nvidia.

Entries were combinations of software and systems that ranged in scale from Raspberry Pis to supercomputers. They were powered by processors and accelerator chips from AMD, Arm, Centaur Technology, Edgecortix, Intel, Nvidia, Qualcomm, and Xilininx. And entries came from 17 organizations including Alibaba, Centaur, Dell Fujitsu, Gigabyte, HPE, Inspur, Krai, Lenovo, Moblint, Neuchips, and Supermicro.

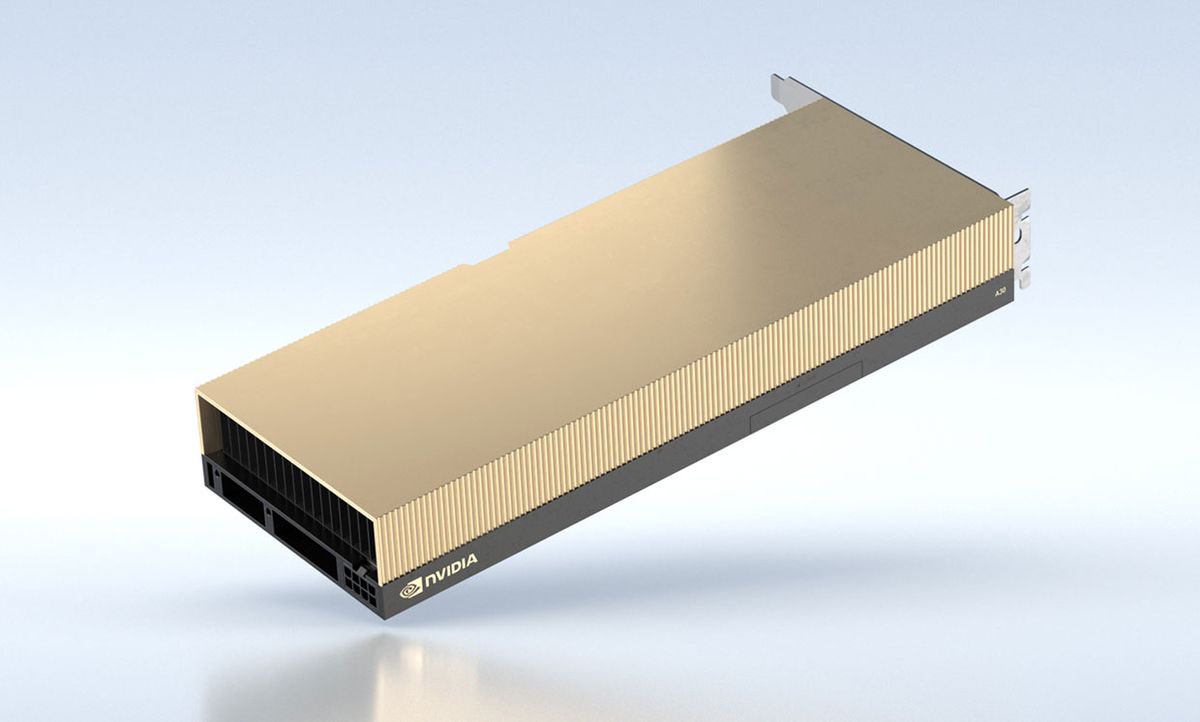

Despite that diversity most of the systems used Nvidia GPUs to accelerate their AI functions. There were some other AI accelerators on offer, notably Qualcomm’s AI 100 and Edgecortix’s DNA. But Edgecortix was the only one of the many, many AI accelerator startups to jump in. And Intel chose to show off how well its CPUs did instead of offering up something from its US $2-billion acquisition of AI hardware startup Habana.

Before we get into the details of whose what was how fast, you’re going to need some background on how these benchmarks work. [Click here if you want to skip the background.] MLPerf is nothing like the famously straightforward Top500 list of the supercomputing great and good, where a single value can tell you most of what you need to know. The consortium decided that the demands of machine learning is just too diverse to be boiled down to something like tera-operations per watt, a metric often cited in AI accelerator research.

First, systems were judged on six neural networks. Entrants did not have to compete on all six, however.

- BERT, for Bi-directional Encoder Representation from Transformers, is a natural language processing AI contributed by Google. Given a question input, BERT predicts a suitable answer.

- DLRM, for Deep Learning Recommendation Model is a recommender system that is trained to optimize click-through rates. It’s used to recommend items for online shopping and rank search results and social media content. Facebook was the major contributor of the DLRM code.

- 3D U-Net is used in medical imaging systems to tell which 3D voxel in an MRI scan are parts of a tumor and which are healthy tissue. It’s trained on a dataset of brain tumors.

- RNN-T, for Recurrent Neural Network Transducer, is a speech recognition model. Given a sequence of speech input, it predicts the corresponding text.

- ResNet is the granddaddy of image classification algorithms. This round used ResNet-50 version 1.5.

- SSD, for Single Shot Detector, spots multiple objects within an image. It’s the kind of thing a self-driving car would use to find important things like other cars. This was done using either MobileNet version 1 or ResNet-34 depending on the scale of the system.

Competitors were divided into systems meant to run in a datacenter and those designed for operation at the “edge”—in a store, embedded in a security camera, etc.

Datacenter entrants were tested under two conditions. The first was a situation, called “offline”, where all the data was available in a single database, so the system could just hoover it up as fast as it could handle. The second more closely simulated the real life of a datacenter server, where data arrives in bursts and the system has to be able to complete its work quickly and accurately enough to handle the next burst.

Edge entrants tackled the offline scenario as well. But they also had to handle a test where they are fed a single stream of data, say a single conversation for language processing, and a multistream situation like a self-driving car might have to deal with from its multiple cameras.

Got all that? No? Well, Nvidia summed it up in this handy slide:

And finally, the efficiency benchmarks were done by measuring the power draw at the wall plug and averaged over 10 minutes to smooth out the highs-and-lows caused by processors scaling their voltages and frequencies.

Here, then, are the tops for each category:

FASTEST

Datacenter (commercially available systems, ranked by server condition)

| Image Classification | Object Detection | Medical Imaging | Speech-to-Text | Natural Language Processing | Recommendation | |

|---|---|---|---|---|---|---|

| Submitter | Inspur | DellEMC | NVIDIA | DellEMC | DellEMC | Inspur |

| System name | NF5488A5 | Dell EMC DSS 8440 (10x A100-PCIe-40GB) | NVIDIA DGX-A100 (8x A100-SXM-80GB, TensorRT) | Dell EMC DSS 8440 (10x A100-PCIe-40GB) | Dell EMC DSS 8440 (10x A100-PCIe-40GB) | NF5488A5 |

| Processor | AMD EPYC 7742 | Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz | AMD EPYC 7742 | Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz | Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz | AMD EPYC 7742 |

| No. Processors | 2 | 2 | 2 | 2 | 2 | 2 |

| Accelerator | NVIDIA A100-SXM-80GB | NVIDIA A100-PCIe-40GB | NVIDIA A100-SXM-80GB | NVIDIA A100-PCIe-40GB | NVIDIA A100-PCIe-40GB | NVIDIA A100-SXM-80GB |

| No. Accelerators | 8 | 10 | 8 | 10 | 10 | 8 |

| Server queries/s | 271,246 | 8,265 | 479.65 | 107,987 | 26,749 | 2,432,860 |

| Offline samples/s | 307,252 | 7,612 | 479.65 | 107,269 | 29,265 | 2,455,010 |

Edge (commercially available, ranked by single-stream latency)

| Image Classification | Object Detection (small) | Object Detection (large) | Medical Imaging | Speech-to-Text | Natural Language Processing | |

|---|---|---|---|---|---|---|

| Submitter | NVIDIA | NVIDIA | NVIDIA | NVIDIA | NVIDIA | NVIDIA |

| System name | NVIDIA DGX-A100 (1x A100-SXM-80GB, TensorRT, Triton) | NVIDIA DGX-A100 (1x A100-SXM-80GB, TensorRT, Triton) | NVIDIA DGX-A100 (1x A100-SXM-80GB, TensorRT, Triton) | NVIDIA DGX-A100 (1x A100-SXM-80GB, TensorRT) | NVIDIA DGX-A100 (1x A100-SXM-80GB, TensorRT) | NVIDIA DGX-A100 (1x A100-SXM-80GB, TensorRT) |

| Processor | AMD EPYC 7742 | AMD EPYC 7742 | AMD EPYC 7742 | AMD EPYC 7742 | AMD EPYC 7742 | AMD EPYC 7742 |

| No. Processors | 2 | 2 | 2 | 2 | 2 | 2 |

| Accelerator | NVIDIA A100-SXM-80GB | NVIDIA A100-SXM-80GB | NVIDIA A100-SXM-80GB | NVIDIA A100-SXM-80GB | NVIDIA A100-SXM-80GB | NVIDIA A100-SXM-80GB |

| No. Accelerators | 1 | 1 | 1 | 1 | 1 | 1 |

| Single stream latency (milliseconds) | 0.431369 | 0.25581 | 1.686353 | 19.919082 | 22.585203 | 1.708807 |

| Multiple stream (streams) | 1344 | 1920 | 56 | |||

| Offline samples/s | 38011.6 | 50926.6 | 985.518 | 60.6073 | 14007.6 | 3601.96 |

The Most Efficient

Datacenter

| Image Classification | Object Detection | Medical Imaging | Speech-to-Text | Natural Language Processing | Recommendation | |

|---|---|---|---|---|---|---|

| Submitter | Qualcomm | Qualcomm | NVIDIA | NVIDIA | NVIDIA | NVIDIA |

| System name | Gigabyte R282-Z93 5x QAIC100 | Gigabyte R282-Z93 5x QAIC100 | Gigabyte G482-Z54 (8x A100-PCIe, MaxQ, TensorRT) | NVIDIA DGX Station A100 (4x A100-SXM-80GB, MaxQ, TensorRT) | NVIDIA DGX Station A100 (4x A100-SXM-80GB, MaxQ, TensorRT) | NVIDIA DGX Station A100 (4x A100-SXM-80GB, MaxQ, TensorRT) |

| Processor | AMD EPYC 7282 16-Core Processor | AMD EPYC 7282 16-Core Processor | AMD EPYC 7742 | AMD EPYC 7742 | AMD EPYC 7742 | AMD EPYC 7742 |

| No. Processors | 2 | 2 | 2 | 1 | 1 | 1 |

| Accelerator | QUALCOMM Cloud AI 100 PCIe HHHL | QUALCOMM Cloud AI 100 PCIe HHHL | NVIDIA A100-PCIe-40GB | NVIDIA A100-SXM-80GB | NVIDIA A100-SXM-80GB | NVIDIA A100-SXM-80GB |

| No. Accelerators | 5 | 5 | 8 | 4 | 4 | 4 |

| Server queries/s | 78,502 | 1557 | 372 | 43,389 | 10,203 | 890,334 |

| System Power (Watts) | 534 | 548 | 2261 | 1314 | 1302 | 1342 |

| Queries/joule | 147.06 | 2.83 | 0.16 | 33.03 | 7.83 | 663.61 |

Edge (commercially available, ranked by single-stream latency)

| Image Classification | Object Detection (small) | Object Detection (large) | Medical Imaging | Speech-to-Text | Natural Language Processing | |

|---|---|---|---|---|---|---|

| Submitter | Qualcomm | NVIDIA | Qualcomm | NVIDIA | NVIDIA | NVIDIA |

| System name | AI Development Kit | NVIDIA Jetson Xavier NX (MaxQ, TensorRT) | AI Development Kit | NVIDIA Jetson Xavier NX (MaxQ, TensorRT) | NVIDIA Jetson Xavier NX (MaxQ, TensorRT) | NVIDIA Jetson Xavier NX (MaxQ, TensorRT) |

| Processor | Qualcomm Snapdragon 865 | NVIDIA Carmel (ARMv8.2) | Qualcomm Snapdragon 865 | NVIDIA Carmel (ARMv8.2) | NVIDIA Carmel (ARMv8.2) | NVIDIA Carmel (ARMv8.2) |

| No. Processors | 1 | 1 | 1 | 1 | 1 | 1 |

| Accelerator | QUALCOMM Cloud AI 100 DM.2e | NVIDIA Xavier NX | QUALCOMM Cloud AI 100 DM.2 | NVIDIA Xavier NX | NVIDIA Xavier NX | NVIDIA Xavier NX |

| No. Accelerators | 1 | 1 | 1 | 1 | 1 | 1 |

| Single stream latency | 0.85 | 1.67 | 30.44 | 819.08 | 372.37 | 57.54 |

| System energy/stream (joules) | 0.02 | 0.02 | 0.60 | 12.14 | 3.45 | 0.59 |

The continuing lack of entrants from AI hardware startups is glaring at this point, especially considering that many of them are members of MLCommons. When I’ve asked certain startups about it, they usually answer that the best measure of their hardware is how it runs their potential customers’ specific neural networks rather than how well they do on benchmarks.

That seems fair, of course, assuming these startups can get the attention of potential customers in the first place. It also assumes that customers actually know what they need.

“If you’ve never done AI, you don’t know what to expect; you don’t know what performance you want to hit; you don’t know what combinations you want with CPUs, GPUs, and accelerators,” says Armando Acosta, product manager for AI, high-performance computing, and data analytics at Dell Technologies. MLPerf, he says, “really gives customers a good baseline.”

Due to author error a mixed metaphor was labelled as a pun in an earlier version of this post. And on 28 April the post was corrected, because the column labels “Object Detection (large)” and “Object Detection (small)” had been accidentally swapped.

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.