Claude Shannon: Tinkerer, Prankster, and Father of Information Theory

Shannon, who died in 2001, is regarded as one of the greatest electrical engineering heroes of all time. This profile, originally published in 1992, reveals the many facets of his life and work

Who is the real Claude Shannon? A visitor to Entropy House, the stuccoed mansion outside Boston where Shannon and his wife Betty have lived for more than 30 years, might reach different conclusions in different rooms. One room, prim and tidy, is lined with plaques that solemnly testify to Shannon's numerous honors, including the National Medal of Science, which he received in 1966; the Kyoto Prize, Japan's equivalent of the Nobel; and the IEEE Medal of Honor.

That room enshrines the Shannon whose work Robert W. Lucky, the executive director of research for AT&T Bell Laboratories, has called the greatest “in the annals of technological thought," and whose “pioneering insight" IBM Fellow Rolf W. Landauer has equated with Einstein's. That Shannon is the one who, as a young engineer at Bell Laboratories in 1948, defined the field of information theory. With a brilliant paper in the Bell System Technical Journal, he established the intellectual framework for the efficient packaging and transmission of electronic data. The paper, entitled “A Mathematical Theory of Communication," still stands as the Magna Carta of the communications age. [Editor's note: Read the paper on IEEE Xplore: Parts I and II; Part III.]

Editor's Note:

This month marks the centennial of the birth of Claude Shannon, the American mathematician and electrical engineer whose groundbreaking work laid out the theoretical foundation for modern digital communications. To celebrate the occasion, we're republishing online a memorable profile of Shannon that IEEE Spectrum ran in its April 1992 issue.

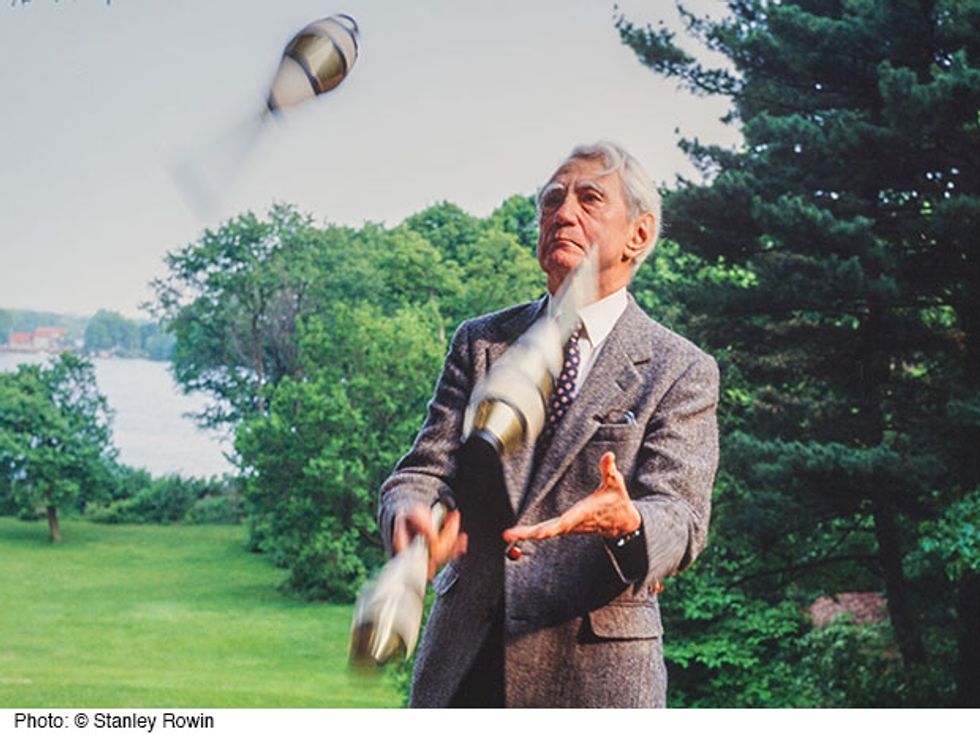

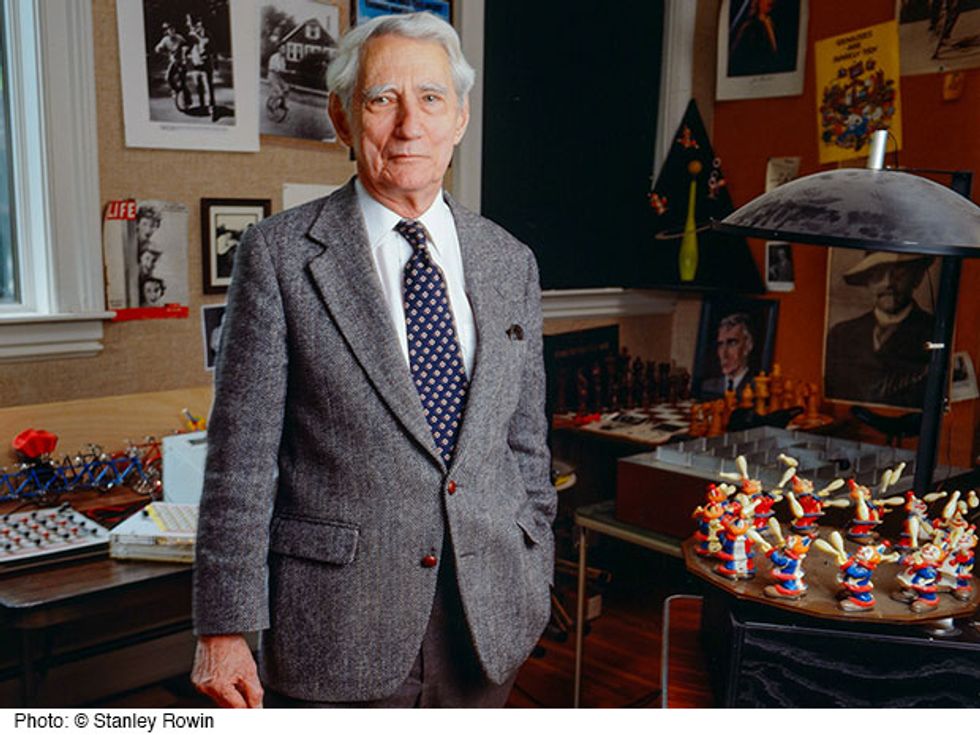

Written by former Spectrum editor John Horgan, who interviewed Shannon at his home in Winchester, Mass., the profile reveals the many facets of Shannon's character: While best known as the father of information theory, Shannon was also an inventor, tinkerer, puzzle solver, and prankster. The 1992 profile included a portrait of Shannon taken by Boston-area photographer Stanley Rowin. On this page we're reproducing that portrait along with other Shannon photos by Rowin that Spectrum has never published.

Shannon died in 2001 at age 84 after a long battle with Alzheimer's disease. He is regarded as one of the greatest electrical engineering heroes of all time.

But showing a recent visitor his awards, Shannon, who at 75 has a shock of snowy hair and an elfish grin, seemed almost embarrassed. After a fidgety minute, he bolted into the room next door. This room has framed certificates, too, including one certifying Shannon as a “doctor of juggling." But it is also lined with tables heaped with all kinds of gadgets.

Some of these treasures—such as the talking chess-playing machine, the hundred-blade jackknife, the motorized pogo stick, and the countless musical instruments—Shannon has collected through the years. Others he has built himself: a miniature stage with three juggling clowns, a mechanical mouse that finds its way out of a maze, a juggling manikin of the comedian W.C. Fields, and a computer called Throbac (Thrifty Roman Numeral Backward Computer) that calculates in Roman numerals. Shannon tried to get the manikin W.C. Fields to demonstrate his prowess, but in vain. “I love building machines, but it's hard keeping them in repair," he said a bit wistfully.

This roomful of gadgets reveals the other Shannon, the one who rode through the halls of Bell Laboratories on a unicycle while simultaneously juggling four balls, invented a rocket-powered Frisbee, and designed a “mind-reading" machine.

This room typifies the Shannon who—seeking insights that could lead to a chess-playing machine—began playing so much chess at work that “at least one supervisor became somewhat worried," according to a former colleague.

Shannon makes no apologies. “I've always pursued my interests without much regard for final value or value to the world," he said cheerfully. “I've spent lots of time on totally useless things."

The Gold Bug Influence

Shannon's delight in both mathematical abstractions and gadgetry emerged early on. Growing up in Gaylord, Mich., near where he was born in 1916, Shannon played with radio kits and erector sets supplied by his father, a probate judge. He also enjoyed solving mathematical puzzles given to him by his sister, Catherine, who eventually became a professor of mathematics.

“I was always interested, even as a boy, in cryptography and things of that sort," Shannon said. One of his favorite stories was “The Gold Bug," an Edgar Allan Poe mystery with a rare happy ending: By decoding a mysterious map, the hero finds a buried treasure.

As an undergraduate at the University of Michigan, in Ann Arbor, Shannon majored in both mathematics and electrical engineering. His familiarity with the two fields helped him notch his first big success as a graduate student at the Massachusetts Institute of Technology (MIT), in Cambridge. Following a discussion of complex telephone switching circuits with Amos Joel, famed Bell Laboratories expert in the topic, in his master's thesis Shannon showed how an algebra invented by the British mathematician George Boole in the mid-1800s—which deals with such concepts as “if X or Y happens but not Z, then Q results"—could represent the workings of switches and relays in electronic circuits.

Claude Elwood Shannon

Date of birth: 30 April 1916

Birthplace: Petosky, Mich.

Height: 178 centimeters

Weight: 68 kilograms

Childhood hero: Thomas Alva Edison

First job: Western Union messenger boy

Family: Married to Mary Elizabeth (Betty) Moore; three children: Robert J., computer engineer; Andrew M., musician; and Margarita C., geologist

Education: B.S., 1936, University of Michigan; M.S., 1940, Ph.D., 1940, Massachusetts Institute of Technology

Hobbies: Building gadgets, juggling, unicycling

Favorite invention: A juggling W.C. Fields robot

Favorite author: T. S. Eliot

Favorite music: Dixieland jazz

Favorite food: Vanilla ice cream with chocolate sauce

Memberships and awards: Fellow, IEEE; member, National Academy of Sciences, American Academy of Arts and Sciences; 1966 IEEE Medal of Honor; 1966 National Medal of Science; 1972 Harvey Prize; 1985 Kyoto Prize

Favorite award: 1940 American Institute of Electrical Engineers award for master's thesis

The implications of the paper by the 22-year-old student were profound: Circuit designs could be tested mathematically, before they were built, rather than through tedious trial and error. Engineers now routinely design computer hardware and software, telephone networks, and other complex systems with the aid of Boolean algebra.

Shannon's paper has been called “possibly the most important master's thesis in the century," but Shannon, typically, downplays it. “It just happened that no one else was familiar with both those fields at the same time," he said. After a moment's reflection, he added, “I've always loved that word, 'Boolean.'"

Receiving his doctorate from MIT in 1940 (his Ph.D. thesis addressed the mathematics of genetic transmission), Shannon then spent a year at the Institute for Advanced Study, in Princeton, N.J. Lowering his voice dramatically, Shannon recalled how he was giving a talk at the institute when suddenly the legendary Einstein entered a door at the rear of the room. Einstein looked at Shannon, whispered something to another scientist, and departed. After his talk, Shannon rushed over to the scientist and asked him what Einstein had said. The scientist gravely told him that the great physicist had “wanted to know where the tea was," Shannon said, and burst into laughter.

How Do You Spell “Eureka"?

Shannon went to Bell Laboratories in 1941 and remained there for 15 years. During World War II, he was part of a group that developed digital encryption systems, including one that Churchill and Roosevelt used for transoceanic conferences.

It was this work, Shannon said, that led him to develop his theory of communication. He realized that, just as digital codes could protect information from prying eyes, so could they shield it from the ravages of static or other forms of interference. The codes could also be used to package information more efficiently, so that more of it could be carried over a given channel.

“My first thinking about [information theory]," Shannon said, “was how you best improve information transmission over a noisy channel. This was a specific problem, where you're thinking about a telegraph system or a telephone system. But when you get to thinking about that, you begin to generalize in your head about all these broader applications." Asked whether at any point he had a “Eureka!"-style flash of insight, Shannon deflected the simplistic question with, “I would have, but I didn't know how to spell the word."

The definition of information set forth in Shannon's 1948 paper is crucial to his theory of communication. Sidestepping questions about meaning (which he has stressed that his theory “can't and wasn't intended to address"), Shannon demonstrated that information is a measurable commodity. The amount of information in a given message, he showed, is determined by the probability that—out of all the messages that could be sent—that particular message would be selected.

He defined the overall potential for information in a system as its “entropy," which in thermodynamics denotes the randomness—or “shuffledness," as one physicist has put it—of a system. (The great mathematician and computer theoretician John von Neumann persuaded Shannon to use the word entropy. The fact that no one knows what entropy really is, von Neumann argued, would give Shannon an edge in debates over information theory.)

Shannon defined the basic unit of information, which John Tukey of Bell Laboratories dubbed a binary unit and then a bit, as a message representing one of two states. One could encode a great deal of information in relatively few bits, just as in the old game “Twenty Questions" one could quickly zero in on the correct answer through deft questioning.

Building on this mathematical foundation, Shannon then showed that any given communications channel has a maximum capacity for reliably transmitting information. Actually, he showed that although one can approach this maximum through clever coding, one can never quite reach it. The maximum has come to be known as the Shannon limit.

Shannon's 1948 paper showed how to calculate the Shannon limit—but not how to approach it. Shannon and others took up that challenge later. The first step was to eliminate redundancy from the message. Just as a laconic Romeo can get his message across with a mere “i lv u," a good code first compresses information to its most efficient form.

A so-called error-correction code then adds just enough redundancy to ensure that the stripped-down message is not obscured by noise. For example, an error-correction code processing a stream of numbers might add a polynomial equation on whose graph the numbers all fall. The decoder on the receiving end knows that any numbers diverging from the graph have been altered in transmission.

Aaron D. Wyner, the head of the Communications Analysis Research Department at the AT&T Bell Laboratories, in Murray Hill, N.J., noted that some scientific discoveries seem in retrospect to be inevitably products of their times—but not Shannon's.

In fact Shannon's ideas were almost too prescient to have an immediate impact. “A lot of practical people around the labs thought it was an interesting theory but not very useful," said Edgar Gilbert, who went to Bell Labs in 1948—in part to work alongside Shannon. Vacuum-tube circuits simply could not handle the complex codes needed to approach the Shannon limit, Gilbert explained. Shannon's paper even received a negative review from J. L. Doob, a prominent mathematician at the University of Illinois, in Urbana-Champaign. Historian William Aspray also noted that in any event the conceptual framework was not in place to permit the application of information theory at the time.

Not until the early 1970s—with the advent of high-speed integrated circuits—did engineers begin fully to exploit information theory. Today Shannon's insights help shape virtually all systems that store, process, or transmit information in digital form—from compact discs to supercomputers, from facsimile machines to deep-space probes such as Voyager.

Solomon W. Golomb, an electrical engineer at the University of Southern California, Los Angeles, and a former president of the IEEE Information Theory Society, thinks Shannon's influence cannot be overstated. “It's like saying how much influence the inventor of the alphabet has had on literature," Golomb remarked.

Information Theory and Religion

Especially early on, however, information theory captivated an audience much larger than the one for which it was intended. People in linguistics, psychology, economics, biology, even music and the arts sought to fuse information theory to their disciplines.

John R. Pierce, a former coworker of Shannon who is now a professor emeritus at California's Stanford University, has compared the “widespread abuse" of information theory to that inflicted on two other profound and much misunderstood scientific ideas: Heisenberg's uncertainty principle and Einstein's theory of relativity.

Some physicists went to extraordinary lengths to prove that the entropy of information theory was mathematically equivalent to the entropy in thermodynamics. That turned out to be true but of little consequence, according to Bell Labs veteran David Slepian, a former colleague of Shannon at the laboratories who was also a seminal figure in information coding. Many engineers, too, “jumped on the bandwagon without really understanding" the theory, Slepian said. Shannon's work inspired the formation of the IEEE Information Theory Society in 1956, and subgroups dedicated to economics, biological systems, and other applications soon formed. In the early 1970s, the IEEE Transactions on Information Theory published an editorial, titled “Information Theory, Photosynthesis, and Religion," deploring the overextension of Shannon's theory. [Editor's note: In fact, the editorial, by Peter Elias, was titled “Two Famous Papers" and was published in September 1958.]

Shannon, while also skeptical of some of the uses of his theory, was rather free-ranging in his own investigations. In the 1950s, he did living-room experiments on the redundancy of language with his wife, Betty, who was a Bell computer scientist; Bernard Oliver, another Bell scientist (and a former IEEE president); and Oliver's wife. One person would offer the first few letters of a word, or words in a sentence, and the others would try to guess what came next. Shannon also directed an experiment at Bell Labs in which workers counted the number of times various letters appeared in a written text, and their order of appearance.

Moreover, Shannon has suggested that applying information theory to biological systems, at least, may not be so far-fetched. “The nervous system is a complex communication system, and it processes information in complicated ways," he said. When asked whether he thought machines could “think," he replied: “You bet. I'm a machine and you're a machine, and we both think, don't we?"

Indeed, Shannon's work on information theory and his love of gadgets led to a precocious fascination with intelligent machines. Shannon was one of the first scientists to propose that a computer could compete with humans in chess; in 1950 he wrote an article for Scientific American explaining how that task might be accomplished.

Shannon did not restrict himself to chess. He built a “mind-reading" machine that played the game of penny-matching, in which one person tries to guess whether the other has chosen heads or tails. A colleague at Bell Laboratories, David W. Hagelbarger, built the prototype; the machine recorded and analyzed its opponents' past choices, looking for patterns that would foretell the next choice. Because humans almost invariably fall into such patterns, the machine won more than 50 percent of the time. Shannon then built his own version of the machine and challenged Hagelbarger to a now legendary duel.

He also constructed a machine that could beat any human player at a board game called Hex, which was popular among the mathematically inclined a few decades back. Actually, Shannon had customized the board so that the human player's side had more hexes than the other; all the machine had to do to win was take the center hex and then match its opponent's moves thereafter.

The machine could have moved instantly, but to convey the impression that it was pondering its next move, Shannon added a delay switch to its circuit. Andrew Gleason, a brilliant mathematician from Harvard, challenged the machine to a game, vowing that no machine could beat him. Only when Gleason, after being soundly thrashed, demanded a rematch did Shannon finally reveal the machine's secret.

In 1950 Shannon created a mechanical mouse that could learn how to find its way through a maze to a chunk of brass cheese, seemingly unassisted. Shannon named the mouse Theseus, after the mythical Greek hero who slew the Minotaur and found his way out of the dreaded labyrinth. Actually, the “brains" of the mouse were contained in a bulky set of vacuum-tube circuitry under the floor of the maze; the circuits controlled the movement of a magnet which in turn controlled the mouse.

When in 1977 the editor of IEEE Spectrum challenged readers to create a “micromouse" whose “brains" were self-contained, who could through trial and error solve a maze, and who could then learn through its mistakes and get through the maze in a repeat attempt without error, a former colleague of Shannon called Spectrum and insisted that Shannon had built such a micromouse two decades earlier.

Knowing the technology of the '50s would not have permitted it, the editor nevertheless called Shannon, who laughed, saying he had fooled a lot of people as he took his “smart" mouse around the country. The drapes around the table that hid the vacuum tubes and lead-screw machinery were vital, he chuckled. When Spectrum ceremoniously presented its Amazing Micromouse Maze Contest awards in 1979, Shannon got Theseus down from his attic, loaded him into his station wagon, and put him on display alongside the lineup of contending micromice.

Asked about prospects for artificial intelligence, Shannon noted that current computers, in spite of their extraordinary power, are still “not up to the human level yet" in terms of raw information processing. Simply replicating human vision in a machine, he points out, remains a formidable task. But he added that “it is certainly plausible to me that in a few decades machines will be beyond humans."

Unified Field Theory of Juggling

In 1956 Shannon left his permanent position at Bell Labs (he remained affiliated for more than a decade) to become a professor of communications science at MIT. In recent years, his great obsession has been juggling. He has built several juggling machines and devised what may be the unified field theory of juggling: If B equals the number of balls, H the number of hands, D the time each ball spends in a hand, F the time of flight of each ball, and E the time each hand is empty, then B/H = (D + F)/(D + E).

(Unfortunately, the theory could not help Shannon juggle more than four balls at once. He has claimed his hands are too small.)

Shannon has also developed various mathematical models to predict stock performance and has tested them—successfully, he said—on his own portfolio.

He has even dabbled in poetry. Among his works is a homage to the Rubik's Cube, a popular puzzle during the late 1970s. The poem, “A Rubric on Rubik's Cubics," is set to the tune “Ta-Ra-Ra-Boom-De-Aye." One verse goes: “Respect your cube and keep it clean./Lube your cube with Vaseline./Beware the dreaded cubist's thumb,/The callused hand and fingers numb./No borrower nor lender be./Rude folk might switch two tabs on thee,/The most unkindest switch of all,/Into insolubility. [Chorus] In-sol-u-bility./The strangest place to be./However you persist/Solutions don't exist."

Shannon himself had a genius for avoiding that “strangest place," according to Elwyn Berlekamp, who studied under him at MIT and cowrote several papers with him. “There are doable problems that are trivial, and profound problems that are not doable," Berlekamp explained. Shannon had a “fantastic intuition and ability to formulate profound problems that were doable."

However, after the late 1950s, Shannon published little on information theory. Some former Bell colleagues suggested that by the time he went to MIT Shannon had “burned out" and tired of the field he had created.

But Shannon denied that claim. He said he continued to work on various problems in information theory through the 1960s, and even published a few papers, though he did not consider most of his investigations then important enough to publish. “Most great mathematicians have done their finest work when they were young," he observed.

In the 1960s Shannon also stopped attending meetings dedicated to the field he had created. Berlekamp offered one possible explanation. In 1973, he recalled, he persuaded Shannon to give the first annual Shannon lecture at the International Information Theory Symposium, but Shannon almost backed out at the last minute. “I never saw a guy with so much stage fright," Berlekamp said. “In this crowd, he was viewed as a godlike figure, and I guess he was worried he wouldn't live up to his reputation."

Berlekamp said Shannon eventually gave an inspiring speech, which anticipated ideas on the universality of feedback and self-referentiality in nature.

Shannon nevertheless fell out of sight once again. But in recent years, encouraged by his wife, he has begun to drop in on small meetings and to visit various laboratories where his work is carried on.

In 1985 he made an unexpected appearance at the International Information Theory Symposium in Brighton, England. The meeting was proceeding smoothly, if uneventfully, when news raced through the halls and lecture rooms that the snowy-haired man with the shy grin who was wandering in and out of the sessions was none other than Claude Shannon. Some of those at the conference had not even known he was still alive.

At the banquet, the meeting's organizers somehow persuaded Shannon to address the audience. He spoke for a few minutes and then—fearing that he was boring his audience, he recalled later—pulled three balls out of his pockets and began juggling. The audience cheered and lined up for autographs. Said Robert J. McEliece, a professor of electrical engineering at the California Institute of Technology and chairman of the symposium: “It was as if Newton had showed up at a physics conference."

A correction to this article was made on 29 April 2016.