Scientists at the U.S. National Institute of Standards and Technology, in Boulder, Colo., have developed a superconducting device that acts like a hyperefficient version of a human synapse.

Neural synapses are the connections between neurons, and changes in the strength of those connections are how neural networks learn. The NIST team has come up with a superconducting synapse made with nanometer-scale magnetic components that is so energy efficient, it appears to beat human synapses by a factor of 100 or more.

“The NIST synapse has lower energy needs that the human synapse, and we don’t know of any other artificial synapse that uses less energy,” NIST physicist Mike Schneider said in a press release.

The heart of this new synapse is a device called a magnetic Josephson junction. An ordinary Josephson junction is basically a “weak link between superconductors,” explains Schneider. Up to a certain amperage, current will flow with no voltage needed through such a junction by tunneling across the weak spot, say a thin sliver of nonsuperconducting material. However, if you push more electrons through until you pass a “critical current,” the voltage will spike at an extremely high rate—100 gigahertz or more.

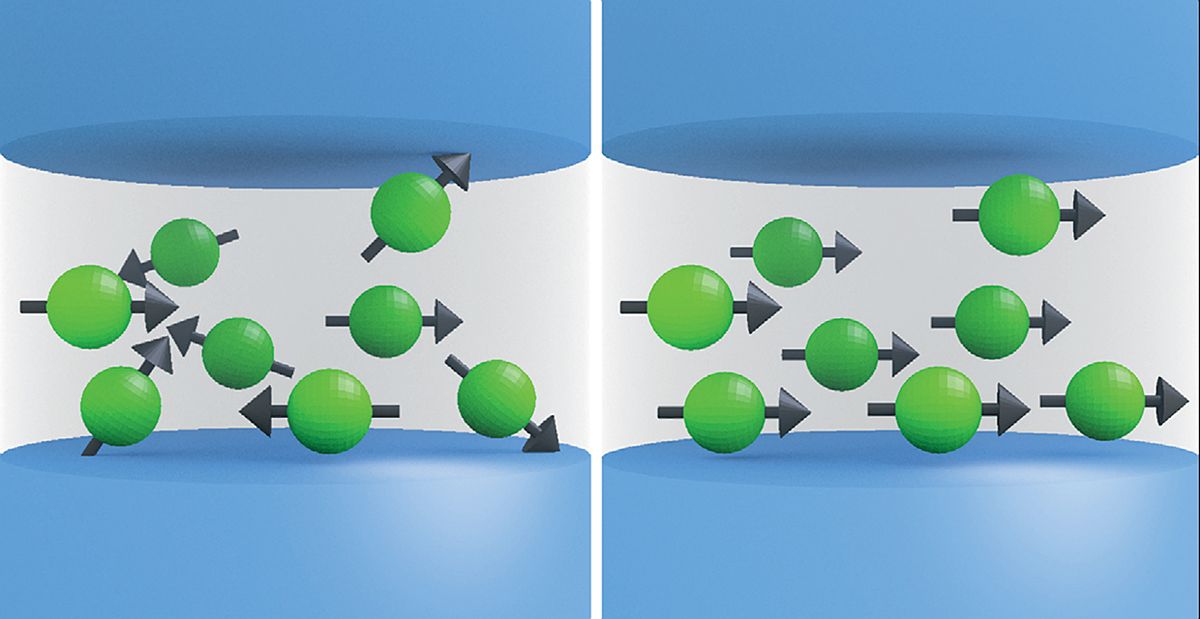

In a magnetic Josephson junction, that “weak link” is magnetic. The higher the magnetic field, the lower the critical current needed to produce voltage spikes. In the device Schneider and his colleagues designed, the magnetic field is caused by 20,000 or so nanometer-scale clusters of manganese embedded in silicon. Each nanocluster has its own field, but those fields start out all pointing in random directions, summing to zero. The NIST team found that they could use a small external magnetic field combined with tiny picosecond pulses of current to cause more and more manganese clusters to line up their magnetic fields. The result is a gradually increasing magnetic field in the junction, lowering the device’s critical current, and making it easier to induce voltage spikes.

The process is analogous to learning in a brain, where neurons send spikes of voltage to synapses. Whether that spike is enough to cause the next neuron to fire a spike of voltage itself depends on how strong the connection at the synapse is. Learning happens when more voltage spikes strengthen the synaptic connection. In the NIST device, the critical current is like the synapse strength. Whether or not a magnetic Josephson junction reaches the critical current depends on how aligned the nanoclusters have become, which is controlled by the spikes they receive.

The analogy alone isn’t enough to make such a device so interesting: It’s the fact that it can operate at 100 GHz and expend less than one attojoule—10-18 joules—per spike. Human neurons have less than one-millionth the speed and expend more than 1,000 times as much energy. Even better, the magnetic Josephson junction–based synapses can be easily stacked into dense, 3D circuits.

The NIST scientists have already simulated a simple neural network using their superconducting synapses. The next step is to build that circuit and more complex ones. But that’s not all they’ve got in store, according to Schneider.

They also want to eliminate the small external magnetic field needed for learning, because that could make circuits more complicated. Schneider thinks they can get the same effect by using technology borrowed from magnetic RAM. There, a particular kind of current torques the spin state of a magnetic layer. Schneider expects something similar might work on the manganese nanoclusters.

A more ambitious goal is to get the energy of the learning pulses to match that of the artificial neuron’s output pulses. Right now, each output voltage spike carries less than one attojoule of energy. But it takes at least 3 attojoules to budge the nanoclusters and cause learning. So, for now, learning has to happen “off-line” because one neuron can’t teach another without assistance. (Even so, once trained, the system would operate faster and with lower energy than any other.)

According to NIST simulations and experiments, they might get the input and output energies to more closely match by shrinking the size of the junctions and slightly raising the operating temperature from its frigid 5 kelvins. With matched inputs and outputs, they could create a low-power system that learns on its own. “That’s exciting because now we can get to something that’s really like the brain,” says Schneider.

The NIST scientists described the device in this week’s Science Advances, and detailed other aspects of their system at the IEEE International Conference on Rebooting Computing in November. (See “Four Strange New Ways to Compute,” IEEE Spectrum, December 2017.)

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.