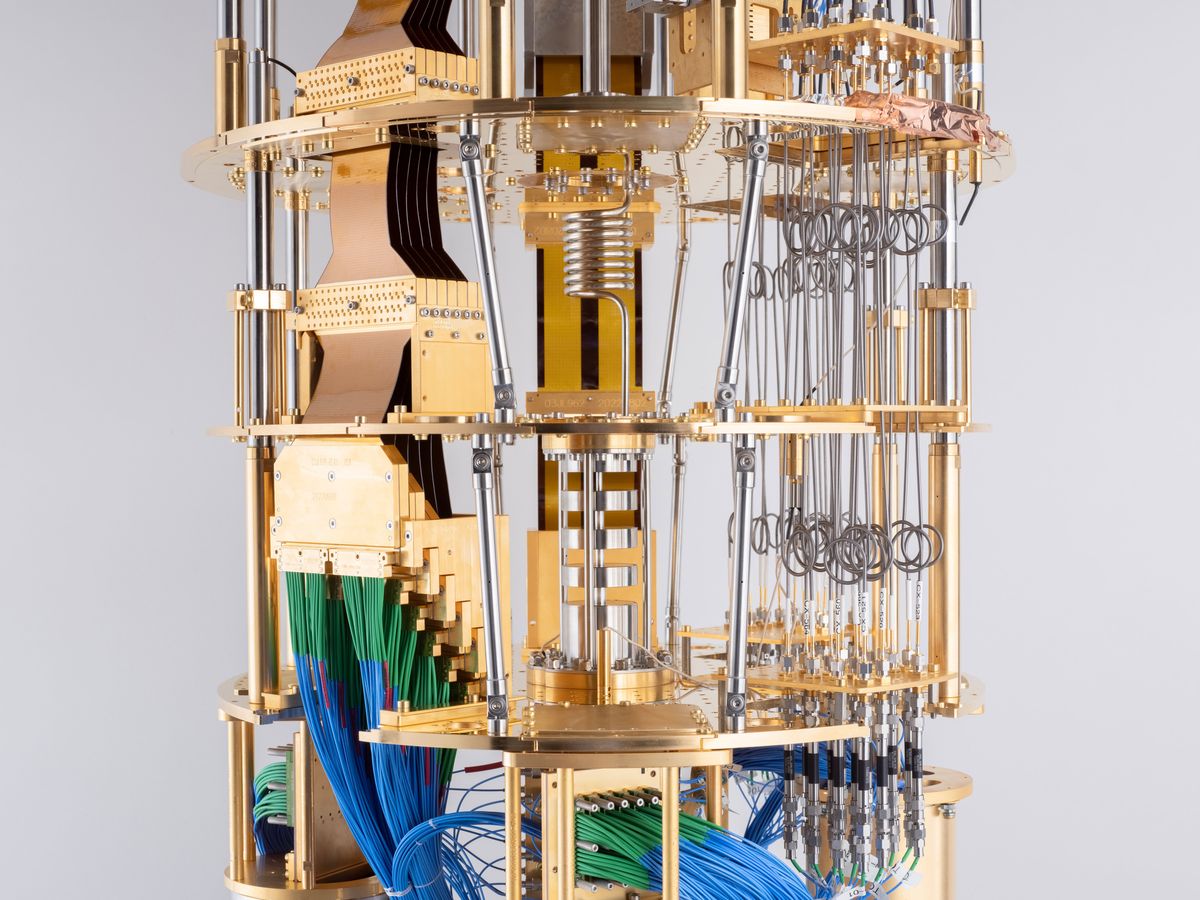

As powerful as quantum computers may theoretically one day be, they are currently so prone to error that their ultimate utility is often questioned. Now, however, IBM argues that quantum computing may be entering a new era of utility sooner than expected, with its 127-qubit Eagle quantum computer potentially delivering accurate results on useful problems beyond what even today’s supercomputers can tackle.

Quantum computers can in theory find answers to problems that classical computers would take eons to solve. The more components, known as quantum bits or qubits, that a quantum computer has linked together, the more basic computations, known as quantum gates, it can perform, in an exponential fashion.

“These methods can be applied to other, more general circuits.”

—Kristan Temme, IBM

The key problem that quantum computers face is how notoriously vulnerable they are to disruption from the slightest disturbance. Present-day state-of-the-art quantum computers typically suffer roughly one error every 1,000 operations, and many practical applications demand error rates lower by a billionfold or more.

Scientists hope to one day build so-called fault-tolerant quantum computers, which can possess many redundant qubits. This way, even if a few qubits fail, quantum error-correction techniques can help quantum computers detect and account for these mistakes.

Quantum Utility: The IBM Quantum and UC Berkeley experiment charts a path to useful quantum computing.IBM/www.youtube.com

However, existing quantum computers are so-called noisy intermediate-scale quantum (NISQ) platforms. This means they are too error ridden and possess too few qubits to successfully run quantum error-correction techniques.

Despite the current early nature of quantum computing, previous experiments by Google and others claimed that quantum computers may have entered the era of “quantum advantage,“ “quantum primacy,“ or “quantum supremacy” over typical computers. Critics in turn have argued that such tests showed only that quantum computers were able to outperform classical machines on contrived problems. As such, it remains hotly debated whether quantum computers are good enough to prove useful right now.

Now IBM reveals that its Eagle quantum processor can accurately simulate physics that regular computers find difficult to model past a certain level of complexity. Not only are these simulations of actual use to researchers, the company says, but the methods they developed could be applied to other kinds of algorithms running on quantum machines today.

In experiments, the researchers had IBM‘s quantum computer model the dynamics of the spins of electrons in a material to predict its properties, such as magnetization. This model is one that scientists understand well, making it easier for the researchers to validate the correctness of the quantum computer’s results.

“Importantly, our methods are not limited to this particular model,” says study coauthor Kristan Temme, a quantum physicist at IBM’s Thomas J. Watson Research Center, in Yorktown Heights, New York. “These methods can be applied to other, more general circuits.”

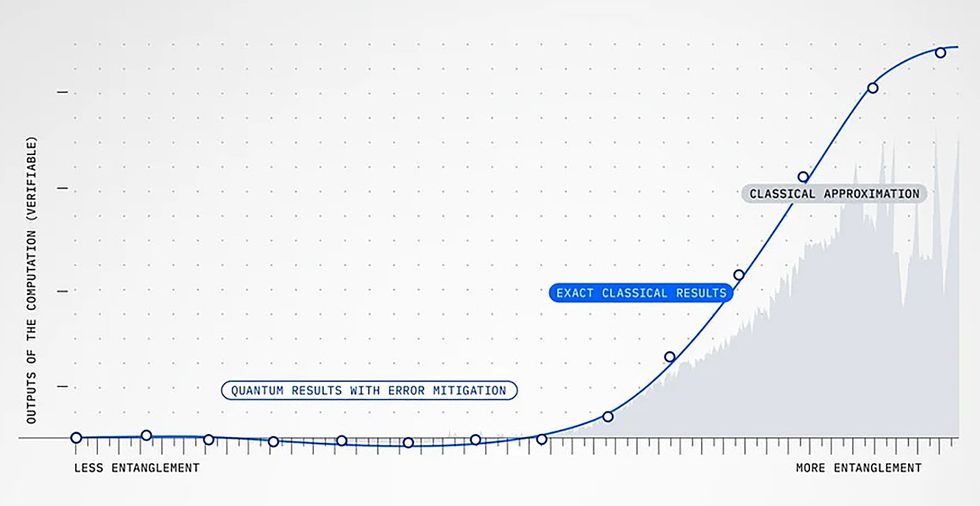

At the same time, scientists at the University of California, Berkeley, performed versions of these simulations on classical supercomputers to compare how well the quantum computer performed. They used two sets of techniques. Brute-force simulations provided the most accurate results, but also demanded too much processing power to simulate large, complex systems. On the other hand, approximation methods could estimate answers for big systems, but they generally prove less and less accurate the larger a system gets.

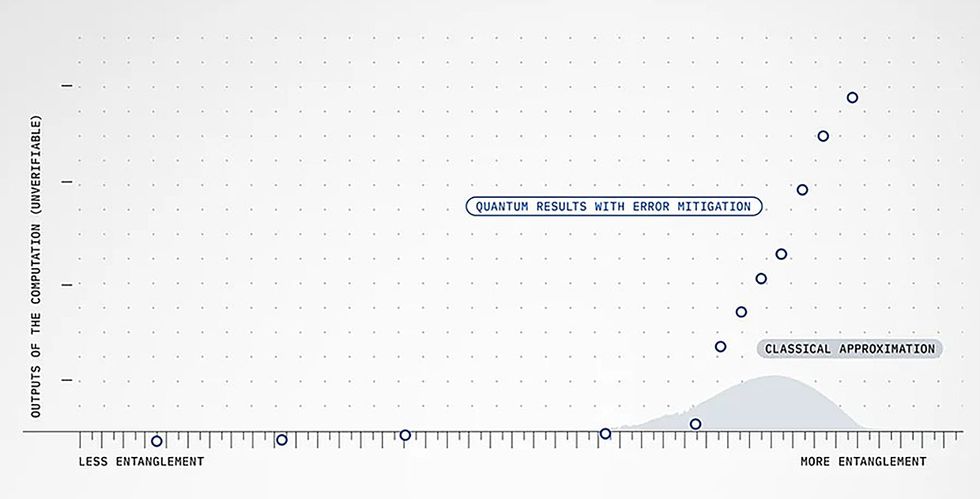

At the largest scale examined, the quantum computer was roughly three times as fast as the classical approximation methods, finding answers in nine hours compared to 30. More importantly, the researchers found that as the scale of the models increased, the quantum computer matched the classical brute-force simulations, while the classical approximation methods became less accurate.

“What we are doing in this work is to demonstrate that we can run quantum circuits at a very large scale and get correct results, something that has always been called into question and for which many people argued would be not feasible on the current devices,” Temme says.

When comparisons showed the quantum results did not agree with the classical approximation methods, “we initially assumed that the experiment had made a mistake,” Temme says. It was “quite the surprise to then learn” that the quantum computer matched the classical brute-force simulations rather than the classical approximation methods, he adds.

“We hope that this will lead to a back-and-forth between methods, which the quantum computer will ultimately win.”

—Kristan Temme, IBM

The scientists conducted tests in which they generated results from 127 qubits running 60 steps’ worth of 2,880 quantum gates. They note that what a quantum computer can theoretically do with 68 qubits is already beyond what classical brute-force simulations are capable of calculating. Although the researchers cannot prove whether the answers the quantum computer provided when using more than 68 qubits are correct, they argue that its success on previous runs makes them confident it was.

The IBM scientists caution that they are not claiming their quantum computer is better than classical computing. Future research may soon find that regular computers may find correct answers for the calculations used in these experiments, they say.

“We hope that this will lead to a back-and-forth between methods, which the quantum computer will ultimately win,” Temme says.

In any case, even if quantum computers do not completely outcompete classical computers—yet—these new findings suggest they may still prove useful for problems that regular computers find extraordinarily difficult. This suggests we may now be entering a new era of utility for quantum computing, Darío Gil, senior vice president and director of IBM Research, said in a statement.

IBM notes that its quantum hardware displayed more stable qubits and lower error rates than it did previously. However, the new findings depended on what IBM calls “quantum error mitigation” techniques, which examine a quantum computer’s output to account for and eliminate noise that its circuits experienced.

The quantum error mitigation strategy that IBM used in the new study, zero-noise extrapolation, repeated quantum computations at varying levels of noise the quantum processor may have experienced from its environment. This helped the researchers extrapolate what the quantum computer would have calculated in the absence of noise.

“Ultimately, we will want to have a fault-tolerant quantum computer. The long-term direction has to be to bridge these results all the way up to a point where we can use quantum error correction.”

—Kristan Temme, IBM

“Both our hardware and our error-mitigation methods are now at the level they can be used to start implementing the overwhelming majority of all the near-term algorithms that have been proposed in the last five to 10 years, to see which algorithm actually provides a quantum advantage in practice,” Temme says.

One drawback of zero-noise extrapolation is that it does require a quantum computer to run its circuits multiple times. “For the zero-noise extrapolation method we have used here, we need to run the same experiment at three different noise levels,” Temme says. “This is a cost that has to be paid for every data point in the calculation—that is, each time we use the processor.”

IBM notes that these new findings represent early results on quantum error mitigation at this scale. “We think there still is quite a bit of room for improvement in these methods,” Temme says. Future research can also test if quantum error mitigation can apply generally, as the company hopes, to other kinds of quantum applications beyond this one model, he adds.

IBM says its quantum computers running both on the cloud and on-site at partner locations in Japan, Germany, and the United States will be powered by a minimum of 127 qubits over the course of the next year.

“Ultimately, we will want to have a fault-tolerant quantum computer,” Temme says. “The long-term direction has to be to bridge these results all the way up to a point where we can use quantum error correction. We expect this to drive the hardware development, where every component-wise improvement now translates to more complex calculations that can be run, leading to a smoother transition to a fault-tolerant device.”

The scientists detailed their findings 14 June in the journal Nature.

This article appears in the October 2023 print issue as “IBM’s Quantum Computer Can Beat a Supercomputer— Sometimes.”

- Practical Quantum Computers Creep Closer to Reality ›

- Here’s a Blueprint for a Practical Quantum Computer ›

- An Optimist's View of the 4 Challenges to Quantum Computing ... ›

- Practical Quantum Computing Potential in Graphene Layers - IEEE Spectrum ›

- Here Are 6 Actual Uses for Near-Term Quantum Computers - IEEE Spectrum ›

- IBM’s Quantum-Centric Supercomputing Vision Is Coming - IEEE Spectrum ›

- None ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.