Using lasers, scientists can deep-freeze atoms down to a hair’s breadth above absolute zero. Because such cold atoms move very slowly, their properties can easily be measured. That makes them especially good for making ever-more-precise atomic clocks, navigation systems, and measurements of the Earth. But chilling atoms and keeping them confined usually requires an apparatus that’s mounted on a table, and so those clocks and navigation devices tend to be stuck in the lab.

Now, scientists might have found a way to change that. Researchers at the U.S. National Institute for Standards and Technology (NIST) have built a hand-size device that can trap atoms in a magnetic field and chill them to less than a millikelvin. NIST’s miniaturization could help cooled atoms exit the laboratory and start down the road to chips.

“There’s been this dream since the early 2000s to take [laser cooling] off of large optical tables...and make real devices out of them,” says William McGehee, a NIST researcher who helped build the device.

Scientists have used laser cooling for decades. It might seem counterintuitive, but if a laser’s light is fine-tuned to just the right frequency, and if the laser beam’s photons strike an atom like a headwind, the atom can absorb that photon and slow down. That kills off some of the atom’s energy and cools it. By repeatedly bombarding a cloud of atoms, scientists can plunge them to millionths of a degree above absolute zero.

Laser cooling was how physicists created that exotic state of matter called a Bose-Einstein condensate, winning the 2001 Nobel Prize in Physics. But cold atoms have more applications than just fun physics. In addition to clocks and navigation, they’re ideal for quantum computers and for linking them into quantum networks.

That’s part of what inspired the NIST researchers to find laser-cooling methods more amenable to industry. Other compact laser-cooling techniques doexist, but it’s difficult to mass-produce them at scales that devices of the future might need. “If you want to make, say, a hundred thousand nodes of a quantum network,” McGehee says, “you can’t take something that’s just a small version of what sits on an optics bench and make a hundred thousand of them.”

The NIST team’s device, about 15 centimeters long, contains three parts. First, is a photonic integrated circuit that launches a laser beam. But that beam is too narrow, so it passes through a metasurface, an array of silicon pillars that act like a prism, widening the beam. The metasurface also controls the beam’s brightness, preventing it from being more intense in its middle, and polarizes its light, which is important to helping it cool atoms.

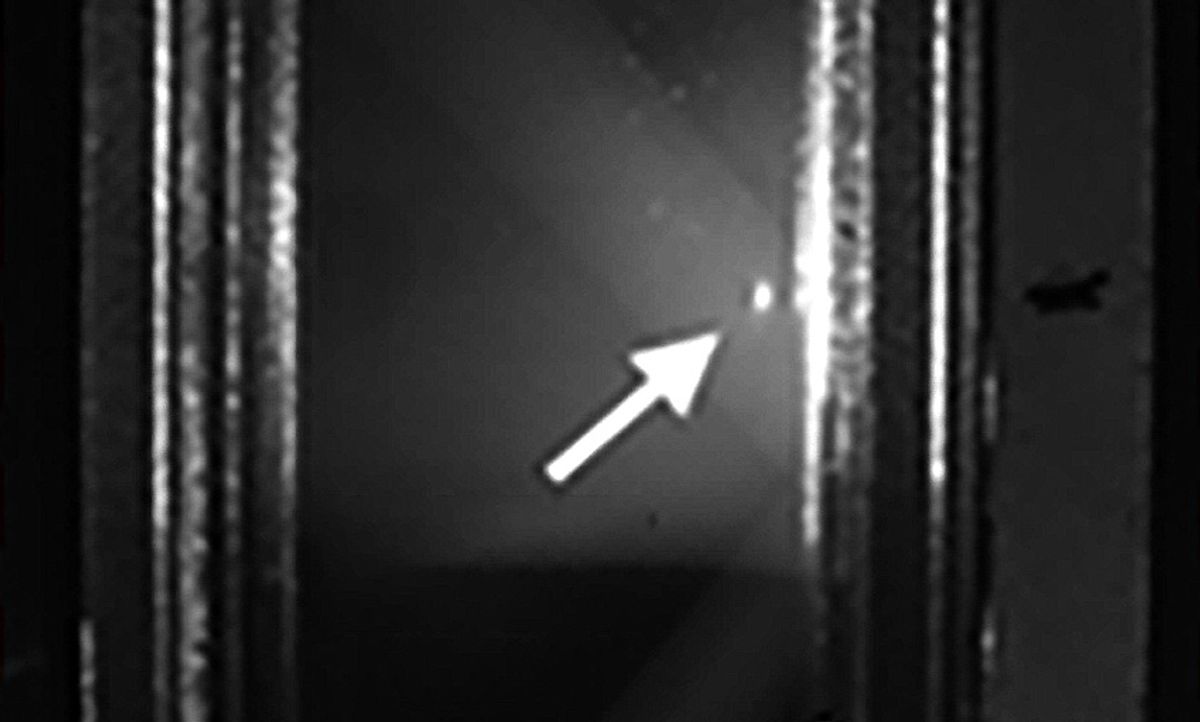

The third part is a diffraction grating chip that splits the widened beam into multiple beams that strike the atoms from all sides. Those beams, with the help of a magnetic field, cool the atoms and corral them into a trap that’s about a centimeter wide. Using that trap, the NIST group has been able to cool rubidium atoms down to around 200 microkelvins.

This device and its components are flat, allowing them to sit in parallel atop a larger chip. It’s possible that those components can be manufactured and placed alongside traditional CMOS devices. “You could imagine taking them and stacking them in some packaged instrument,” says McGehee.

“This research clearly demonstrates how atomic systems can be assembled using integrated optics on a chip and other compact and reliable components,” says Chris Monroe, co-founder and chief scientist of IonQ, a firm specializing in using similar technology to build quantum computers.

The NIST device isn’t perfect, of course. Integrated cooling lasers like these won’t be as effective as their larger counterparts used in the lab, says McGehee. And manufacturers won’t be able to make cooled-atom devices just yet. Before that can happen, the NIST device will need to get even smaller: one-tenth or even one-twentieth its current size. That’s a goal McGehee believes is achievable.