This sponsored article is brought to you by the NYU Tandon School of Engineering.

If you’ve ever learned to cook, you know how daunting even simple tasks can be at first. It’s a delicate dance of ingredients, movement, heat, and techniques that newcomers need endless practice to master.

But imagine if you had someone – or something – to assist you. Say, an AI assistant that could walk you through everything you need to know and do to ensure that nothing is missed in real-time, guiding you to a stress-free delicious dinner.

Claudio Silva, director of the Visualization Imaging and Data Analytics (VIDA) Center and professor of computer science and engineering and data science at the NYU Tandon School of Engineering and NYU Center for Data Science, is doing just that. He is leading an initiative to develop an artificial intelligence (AI) “virtual assistant” providing just-in-time visual and audio feedback to help with task execution.

And while cooking may be a part of the project to provide proof-of-concept in a low-stakes environment, the work lays the foundation to one day be used for everything from guiding mechanics through complex repair jobs to combat medics performing life-saving surgeries on the battlefield.

“A checklist on steroids”

The project is part of a national effort involving eight other institutional teams, funded by the Defense Advanced Research Projects Agency (DARPA) Perceptually-enabled Task Guidance (PTG) program. With the support of a $5 million DARPA contract, the NYU group aims to develop AI technologies to help people perform complex tasks while making these users more versatile by expanding their skillset — and more proficient by reducing their errors.

The NYU group – including investigators from NYU Tandon’s Department of Computer Science and Engineering, the NYU Center for Data Science (CDS) and the Music and Audio Research Laboratory (MARL) – have been performing fundamental research on knowledge transfer, perceptual grounding, perceptual attention and user modeling to create a dynamic intelligent agent that engages with the user, responding to not only circumstances but the user’s emotional state, location, surrounding conditions and more.

Dubbing it a “checklist on steroids” Silva says that the project aims to develop Transparent, Interpretable, and Multimodal Personal Assistant (TIM), a system that can “see” and “hear” what users see and hear, interpret spatiotemporal contexts and provide feedback through speech, sound and graphics.

While the initial application use-cases for the project for evaluation purposes focus on military applications such as assisting medics and helicopter pilots, there are countless other scenarios that can benefit from this research — effectively any physical task.

“The vision is that when someone is performing a certain operation, this intelligent agent would not only guide them through the procedural steps for the task at hand, but also be able to automatically track the process, and sense both what is happening in the environment, and the cognitive state of the user, while being as unobtrusive as possible,” said Silva.

The project brings together a team of researchers from across computing, including visualization, human-computer interaction, augmented reality, graphics, computer vision, natural language processing, and machine listening. It includes 14 NYU faculty and students, with co-PIs Juan Bello, professor of computer science and engineering at NYU Tandon; Kyunghyun Cho, and He He, associate and assistant professors (respectively) of computer science and data science at NYU Courant and CDS, and Qi Sun, assistant professor of computer science and engineering at NYU Tandon and a member of the Center for Urban Science + Progress will use the Microsoft Hololens 2 augmented reality system as the hardware platform test bed for the project.

The project uses the Microsoft Hololens 2 augmented reality system as the hardware platform testbed. Silva said that, because of its array of cameras, microphones, lidar scanners, and inertial measurement unit (IMU) sensors, the Hololens 2 headset is an ideal experimental platform for Tandon’s proposed TIM system.

“Integrating Hololens will allow us to deliver massive amounts of input data to the intelligent agent we are developing, allowing it to ‘understand’ the static and dynamic environment,” explained Silva, adding that the volume of data generated by the Hololens’ sensor array requires the integration of a remote AI system requiring very high speed, super low latency wireless connection between the headset and remote cloud computing.

To hone TIM’s capabilities, Silva’s team will train it on a process that is at once mundane and highly dependent on the correct, step-by-step performance of discrete tasks: cooking. A critical element in this video-based training process is to “teach” the system to locate the starting and ending point — through interpretation of video frames — of each action in the demonstration process.

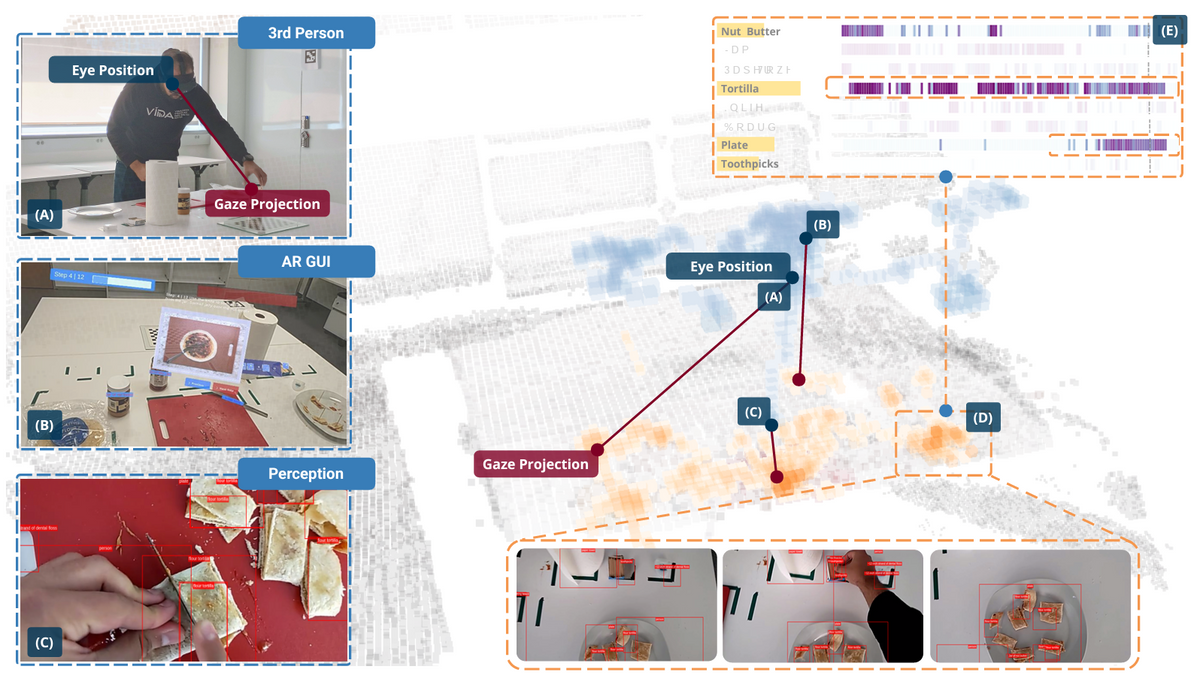

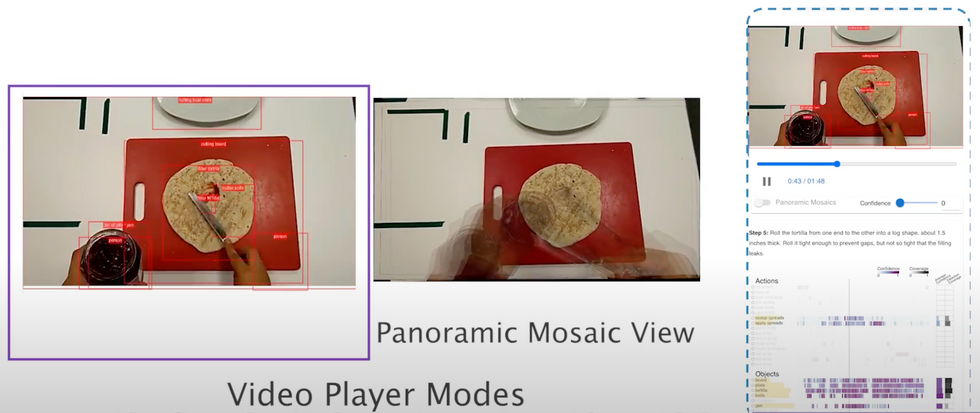

The team is already making huge progress. Their first major paper “ARGUS: Visualization of AI-Assisted Task Guidance in AR” won a Best Paper Honorable Mention Award at IEEE VIS 2023. The paper proposes a visual analytics system they call ARGUS to support the development of intelligent AR assistants.

The system was designed as part of a multi year-long collaboration between visualization researchers and ML and AR experts. It allows for online visualization of object, action, and step detection as well as offline analysis of previously recorded AR sessions. It visualizes not only the multimodal sensor data streams but also the output of the ML models. This allows developers to gain insights into the performer activities as well as the ML models, helping them troubleshoot, improve, and fine tune the components of the AR assistant.

“It’s conceivable that in five to ten years these ideas will be integrated into almost everything we do.”

Where all things data science and visualization happens

Silva notes that the DARPA project, focused as it is on human-centered and data-intensive computing, is right at the center of what VIDA does: utilize advanced data analysis and visualization techniques to illuminate the underlying factors influencing a host of areas of critical societal importance.

“Most of our current projects have an AI component and we tend to build systems — such as the ARt Image Exploration Space (ARIES) in collaboration with the Frick Collection, the VisTrails data exploration system, or the OpenSpace project for astrographics, which is deployed at planetariums around the world. What we make is really designed for real-world applications, systems for people to use, rather than as theoretical exercises,” said Silva.

“What we make is really designed for real-world applications, systems for people to use, rather than as theoretical exercises.” —Claudio Silva, NYU Tandon

VIDA comprises nine full-time faculty members focused on applying the latest advances in computing and data science to solve varied data-related issues, including quality, efficiency, reproducibility, and legal and ethical implications. The faculty, along with their researchers and students, are helping to provide key insights into myriad challenges where big data can inform better future decision-making.

What separates VIDA from other groups of data scientists is that they work with data along the entire pipeline, from collection, to processing, to analysis, to real world impacts. The members use their data in different ways — improving public health outcomes, analyzing urban congestion, identifying biases in AI models — but the core of their work all lies in this comprehensive view of data science.

The center has dedicated facilities for building sensors, processing massive data sets, and running controlled experiments with prototypes and AI models, among other needs. Other researchers at the school, sometimes blessed with data sets and models too big and complex to handle themselves, come to the center for help dealing with it all.

The VIDA team is growing, continuing to attract exceptional students and publishing data science papers and presentations at a rapid clip. But they’re still focused on their core goal: using data science to affect real world change, from the most contained problems to the most socially destructive.

- Deploying Data Science and AI to Fight Wildlife Trafficking ›

- When AI Unplugs, All Bets Are Off - IEEE Spectrum ›

- At CES 2024, AI Is Here to Help - IEEE Spectrum ›