If you believe the hype, AI will someday generate Hollywood films, cure cancer, and make driving a historical oddity. But at CES 2024, it’s tackling some more immediate and achievable goals.

LG and Samsung touted AI features that tune your television’s picture quality. Nvidia can use its GPUs to find what you want from hundreds of documents. And dozens of startups pitched AI assistants, avatars, coaches, and tutors.

LG and Samsung become AI hub hopefuls

LG and Samsung positioned their new televisions as AI powerhouses. That’s not entirely novel: Both have used AI for years to upscale low-resolution content to 4K resolution. For 2024, though, LG hopes its televisions can become an assistant.

“We want to help people find the settings they need,” says David Park, head of customer enablement at LG. “This isn’t just a television throwing up a bunch of words at random. It’s much more conversational.”

LG demonstrated a chatbot interface users can converse with to find settings, optimize image quality, or troubleshoot problems. It’s not unlike the search functions on many devices today. But the chatbot, unlike search, can help you find a setting with a name you can’t remember or recommend settings based on what you’re trying to achieve.

Samsung went a step further, branding its new televisions as “AI screens.” Many features that fall under this umbrella are meant to improve image quality or motion clarity, but others offer ease-of-use and accessibility. The company demonstrated an on-device, AI-powered optical character recognition (OCR) technology that can serve as a “voice guide” for subtitles. It also showed a mode called Relumino Together, which uses AI to enhance the image for those with low vision.

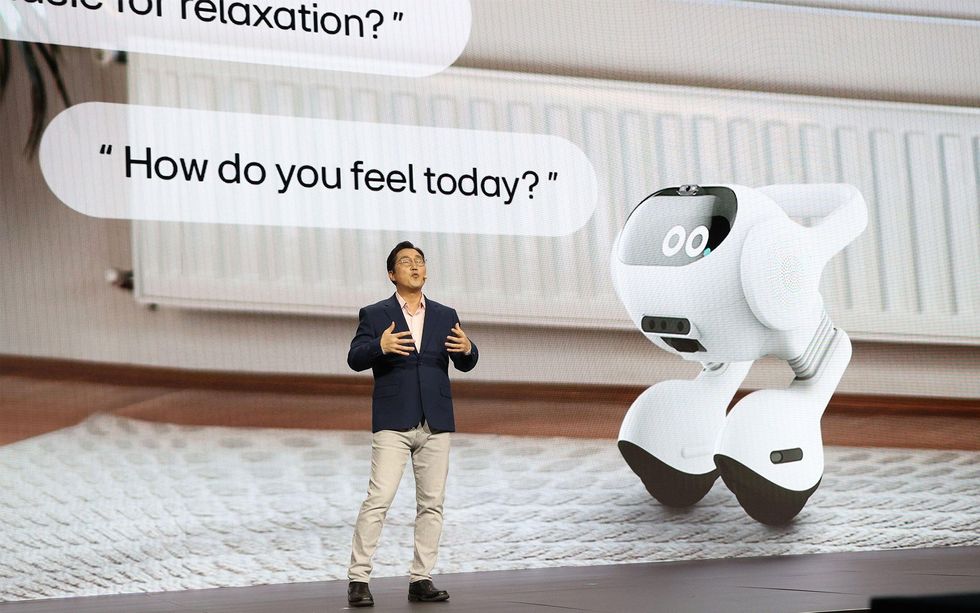

Both also came to the show with AI robots for the home. Samsung’s is a new iteration of Ballie, first shown at CES 2020, while LG touted its “two-wheeled AI agent.” The dream is to embody the AI services people might access on a TV, computer, or phone. Ballie even has a built-in projector that lets you bring whatever you’re viewing on a television or laptop with you.

But these robots also outline the limits of AI at CES 2024. They’re just prototypes for now, and it’s unclear when (or if) they’ll see release.

Startup makes AI your instruction manual

Of course, it’s not just tech giants looking to get in on the AI buzz. Eureka Park, a hub for startups on the CES show floor, was bursting with AI-powered assistants.

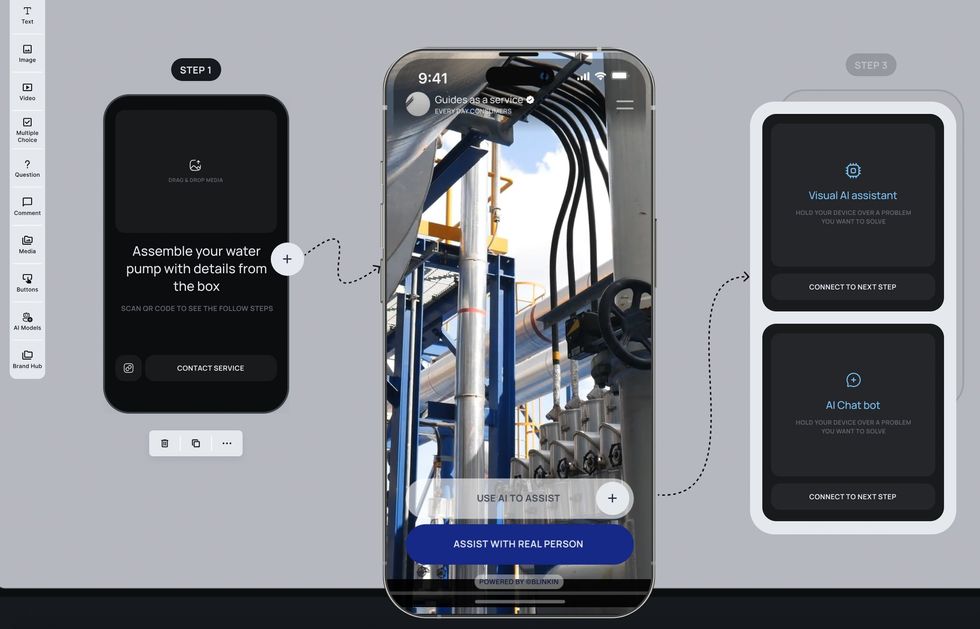

Among these was BlinkIn, a German startup building a multimodal “companion experience” called Houston, which is accessed through a smartphone app. Its purpose is similar to the chatbot on LG’s TV, but BlinkIn wants to offer advice for everything in your home, from your coffee maker to your washing machine. You can snap a photo of your device and ask a question about it.

This is a task that OpenAI’s ChatGPT, which has multimodal capabilities including image and audio prompts, can already tackle. But ChatGPT can prove an unreliable assistant. If I ask it how to clean my coffee maker, for example, it usually provides a meandering, long-winded answer with only some details relevant to my particular appliance.

“We want to help you not get a wall of text,” says Akshay Joshi, senior applied AI scientist at BlinkIn. Joshi told me the company is using open-source multimodal models, such as MiniGPT-4 (which is not affiliated with OpenAI), to tune the app’s replies, although it will use ChatGPT as a fallback.

BlinkIn also hopes to fill the trust gap with a community-driven approach. Users who feel helpful can provide answers that its AI model will use as training data, and Houston will surface and cite these answers (complete with user profiles). Josef Suess, CEO of BlinkIn, says this will add a communal feel similar to Reddit or YouTube. “Who do you trust more as a user? A content creator, or a brand?” he asks. “We want to build a platform where it’s about helping each other.”

Nvidia shows off encyclopedia GPU

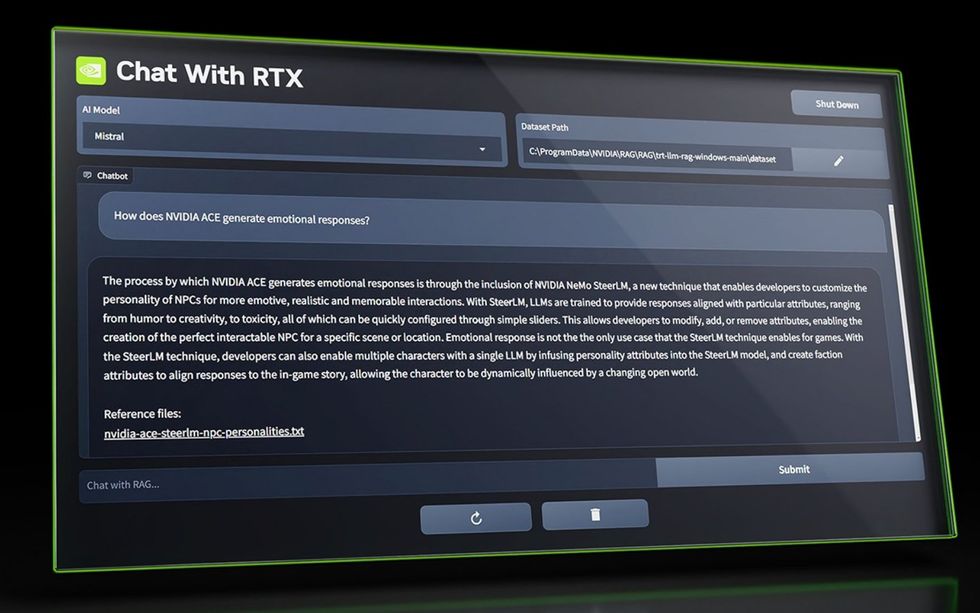

Everyone knows Nvidia’s hardware is great for AI (and, in case you’d forgotten, it came to CES 2024 touting the AI power of three new RTX Super desktop graphics cards). Yet, when it comes to using AI for yourself on your home PC, putting an Nvidia GPU on a task often requires a tour through GitHub or HuggingFace, and several hours of work.

Nvidia brought a solution to CES 2024: Chat with RTX. It’s a Windows application that lets you prompt a large language model with questions about a set of documents provided to the app. Chat with RTX currently supports two large language models: Meta’s Llama 2 and Mistral AI’s Mistral. The models load on your PC, and all AI inference occurs on your local Nvidia GPU, which means conversations in the app are kept private.

I had a chance to use Chat with RTX at the show, and I was immediately struck by its speed. The app responded to my questions with zero delay. I was also able to quickly switch between different sets of documents (the app can read Word documents, PDFs, text files, and XML). It’s a solution I’m eager to try at home, as I have several piles of PDF documents that are a bear to search.

Chat with RTX, like all the AI demos I saw at CES, still needs work. The interface is barebones and its capabilities limited to specific situations. Still, CES made clear there’s no shortage of interest in custom-tailored AI assistants built to achieve particular goals—expect to see more refined iterations of these ideas appear through 2024.

Matthew S. Smith is a freelance consumer technology journalist with 17 years of experience and the former Lead Reviews Editor at Digital Trends. An IEEE Spectrum Contributing Editor, he covers consumer tech with a focus on display innovations, artificial intelligence, and augmented reality. A vintage computing enthusiast, Matthew covers retro computers and computer games on his YouTube channel, Computer Gaming Yesterday.