Imagine being able to see fast-moving objects under poor lighting conditions – all with a wider angle of view. Us humans will have to make do with the vision system we’ve evolved, but computer vision is always reaching new limits. In a recent advancement, a research team in South Korea has combined two different types of cameras in order to better track fast-moving objects and create 3-D maps of challenging environments.

The first type of camera used in this new design is an event-based camera, which excels at capturing fast-moving objects. The second is an omni-directional (or fisheye) camera, which captures very wide angles.

Kuk-Jin Yoon, an associate professor at the Visual Intelligence Lab at the Korea Advanced Institute of Science and Technology, notes that both camera types offer advantages that are desirable for computer vision. “The event camera has much less latency, less motion blur, much higher dynamic range, and excellent power efficiency,” he says. “On the other hand, omni-directional cameras (cameras with fisheye lenses) allow us to get visual information from much wider views.”

His team sought to combine these approaches in a new design called event-based omnidirectional multi-view stereo (EOMVS). In terms of hardware, this means incorporating a fisheye lens with an event-based camera.

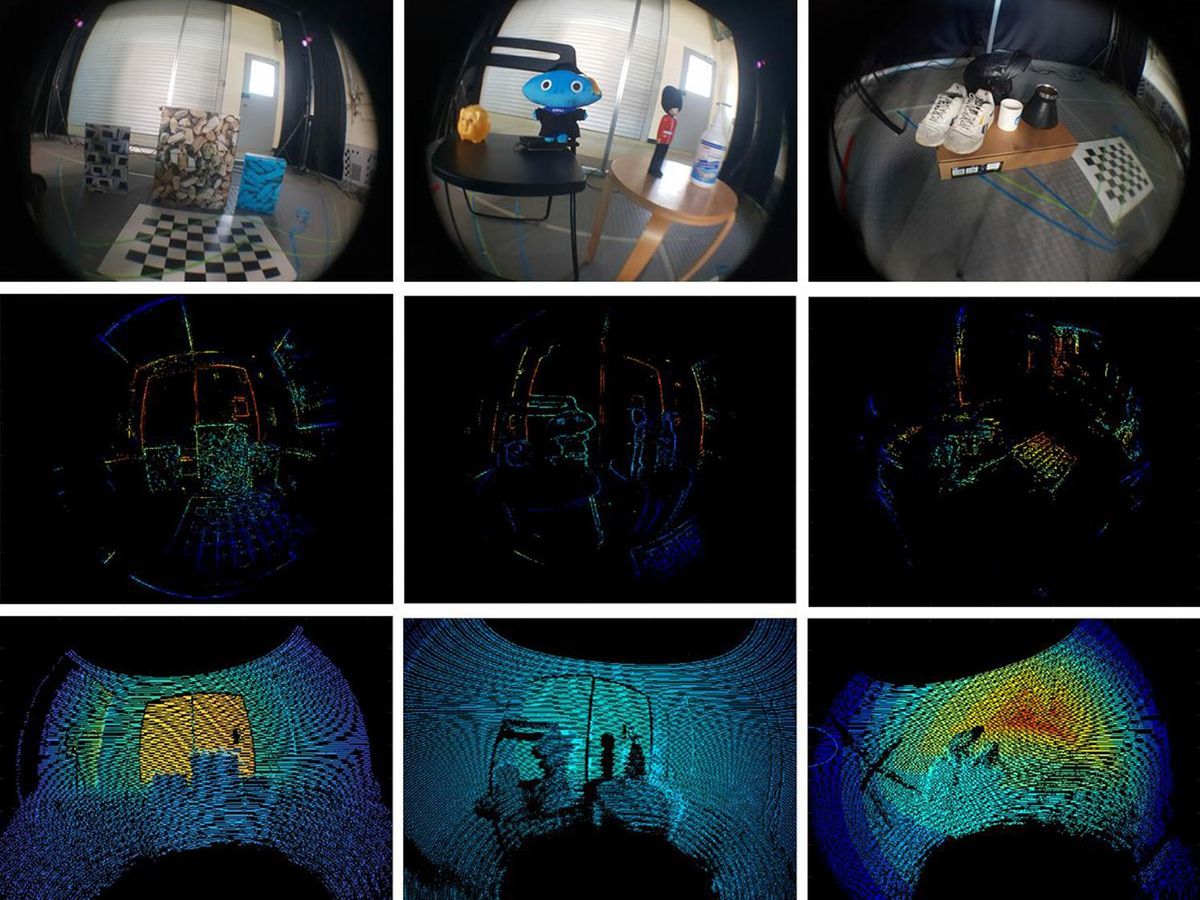

Next, software is needed to reconstruct 3D scenes with high accuracy. An approach that’s commonly used involves taking multiple images from different camera angles in order to reconstruct 3D information. Yoon and his colleagues used a similar approach, but rather than using images, the new EOMVS approach reconstructs 3D spaces using event data captured by the modified event camera.

The researchers tested their design against LiDAR measurements, which are known to be highly accurate for mapping out 3D spaces. The

results were published July 9 in IEEE Robotics and Automation Letters. In their paper, the authors note that they believe this work is the first attempt to set up and calibrate an omnidirectional event camera and use it to solve a vision task. They tested the system with the field of view set at 145°, 160°, and 180°.

The endeavor was largely a success. Yoon notes that the EOMVS system was very accurate at mapping out 3D spaces, with an error rate of 3 percent. The approach also proved to meet all the desirable features expected with such a combination of cameras. “[Using EOMVS] we can detect and track very fasting moving objects under very severe illumination without losing them in the field of view,” says Yoon. “In that sense, 3D mapping with drones can be the most promising real-world application of the EOMVS.”

He says his team is planning to commercialize this new design, as well as build upon it. The current EOMVS design requires knowledge of where the camera is positioned in order to piece together and analyze the data. But the researchers are interested in devising a more flexible design, where the exact position of the camera does not need to be known beforehand. To achieve this, they aim to incorporate an algorithm that estimates the positions of the camera as it moves.

Michelle Hampson is a freelance writer based in Halifax. She frequently contributes to Spectrum's Journal Watch coverage, which highlights newsworthy studies published in IEEE journals.