Inspired by the eyes of extinct trilobites, researchers have created a miniature camera with a record-setting depth of field—the distance over which a camera can produce sharp images in a single photo. Their new study reveals that with the aid of artificial intelligence, their device can simultaneously image objects as near as 3 centimeters and as far away as 1.7 kilometers.

Five hundred million years ago, the oceans teemed with horseshoe-crab-like trilobites. Among the most successful of all early animals, these armored invertebrates lived on Earth for roughly 270 million years before going extinct.

Like insects, trilobites possessed compound eyes. One trilobite species, Dalmanitina socialis, possessed a unique kind of compound eye never seen before or since. Both its eyes were each mounted on a stalk, and each eye possessed two sets of lenses that bent light at different angles, acting like bifocals. These eyes helped this species see both near and far at the same time.

Scientists from several labs in China and at the National Institute of Standards and Technology (NIST) in Gaithersburg, Md., used the eyes of D. socialis to help them develop a new light-field camera. These cameras attempt to capture the field of light rays that might travel from every point and in every direction within a scene.

Previous light-field cameras often used arrays of tiny lenses to capture light rays from a variety of directions. However, these prior devices often saw a trade-off between resolution and depth of field.

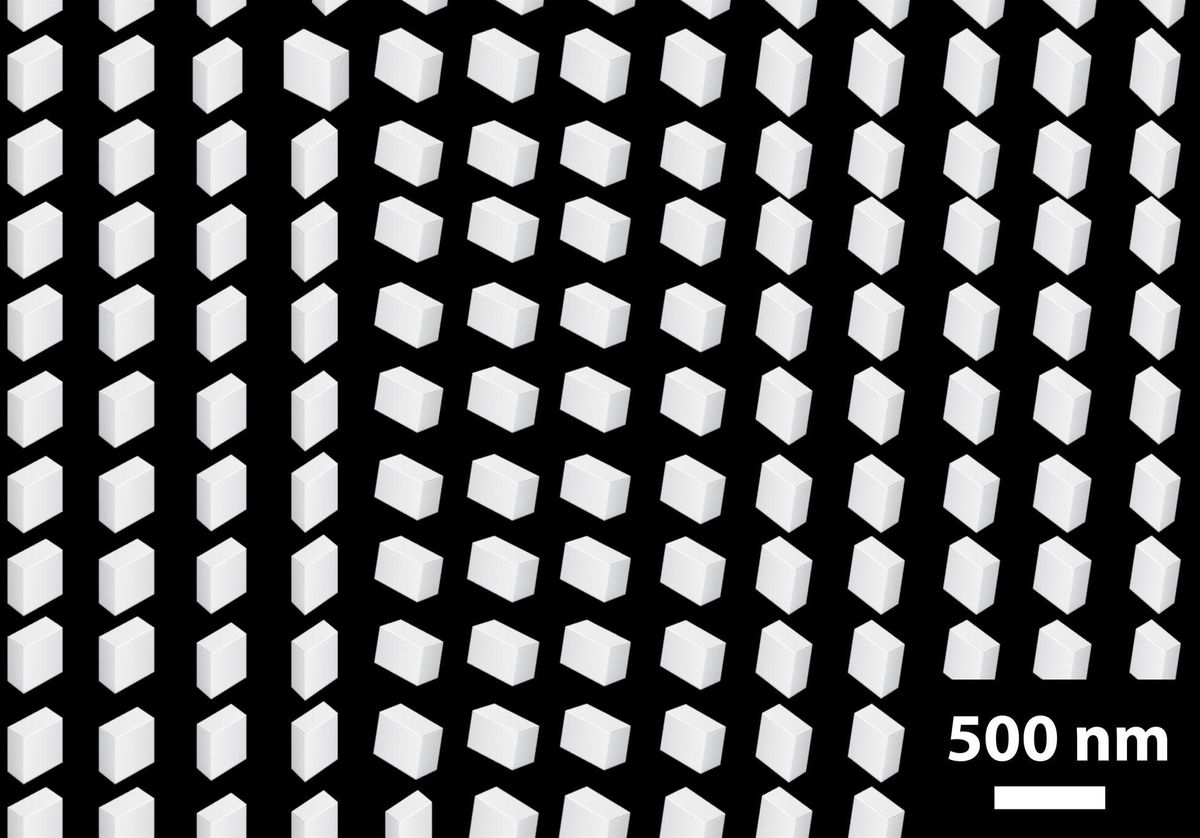

In the new study, the researchers experimented with metasurfaces, which are surfaces covered with forests of microscopic pillars. By tuning the spacing between these pillars—typically less than a wavelength of light—as well as their size and shape, metasurfaces can manipulate light in a variety of ways.

The scientists fabricated metasurface lenses made of flat panes of glass studded with millions of rectangular nanometer-scale pillars. These metalenses captured light from a scene that can be divided into two equal parts—light waves that are right-handed circularly polarized, meaning their electric fields rotate clockwise, and left-handed circularly polarized, meaning their electric fields rotate counterclockwise.

The design of the nanopillar led each one to bend right-handed and left-handed circularly polarized light by different amounts. Right-handed circularly polarized light had to travel through the longer part of each rectangular nanopillar, whereas left-handed circularly polarized light flowed through the shorter part. Light traveling over a longer path had to pass through more material and experienced greater bending.

Light that is bent by different amounts is brought to a different focus. The greater the bending, the closer the light is focused. Because of this, right-handed circularly polarized light was focused on near objects, whereas left-handed circularly polarized light was focused on far objects.

“There is no equivalent bulk optical component that can do this function—that is, focus light close and far at the same time,” says study coauthor Amit Agrawal, an electrical engineer at NIST. “This is only enabled by our ability to engineer light-matter interactions at nanometer-length scales.”

All in all, this metalens could simultaneously act like a telephoto lens to focus on distant objects and a macro lens to focus on close ones. However, that would mean the lens by itself could not focus on objects at intermediate distances—for example, objects just a few meters from the camera.

To account for this middle space, the researchers used a convolutional neural network—a system that roughly mimics how the human brain processes visual data—to recognize and correct for defects such as blurriness. This helped the camera reconstruct light-field data over a large depth of field from a single shot. This system to eliminate aberrations also helped make the camera highly tolerant of any potential defects in the metalens.

“The beauty of metasurface optics, and the large interest from the commercial sector, is that now one can envision making photonic components in a standard CMOS foundry platform,” Agrawal says. “That would scale up manufacturing, reduce cost, and so on.”

The researchers suggest their cameras might find use in consumer cameras, machine vision, optical microscopy, and more.

“I think some more work needs to be done where the processors in our cellphone or smart glasses can do this,” Agrawal says. “But from an optics perspective, I think there are no fundamental obstacles.”

The scientists detailed their findings online 19 April in the journal Nature Communications.

- Can Silicon Nanostructures Knock Plastic Lenses Out of Cell Phone ... ›

- Tiny Metamaterial Lens Snaps Outsize Images - IEEE Spectrum ›

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.