Processing images to allow self-driving cars to see where they’re going could get easier thanks to a specially sculpted lens that does the work of a computer.

Dutch and American researchers say they can use a metasurface to passively detect the edges of objects in video. Computers can perform such edge detection for autonomous vehicles or virtual reality applications, but that uses power and is not instantaneous. “If you want to do that digitally, it takes time for the computer to compute,” says Andrea Cordaro, a PhD student at AMOLF, a scientific research institute in Amsterdam, the Netherlands.

In a paper in Nano Letters, Cordaro and colleagues, including Albert Polman, who heads the Light Management in New Photovoltaic Materials group at AMOLF, and Andrea Alù at the City University of New York, describe how their material performs the mathematical operations necessary for edge detection.

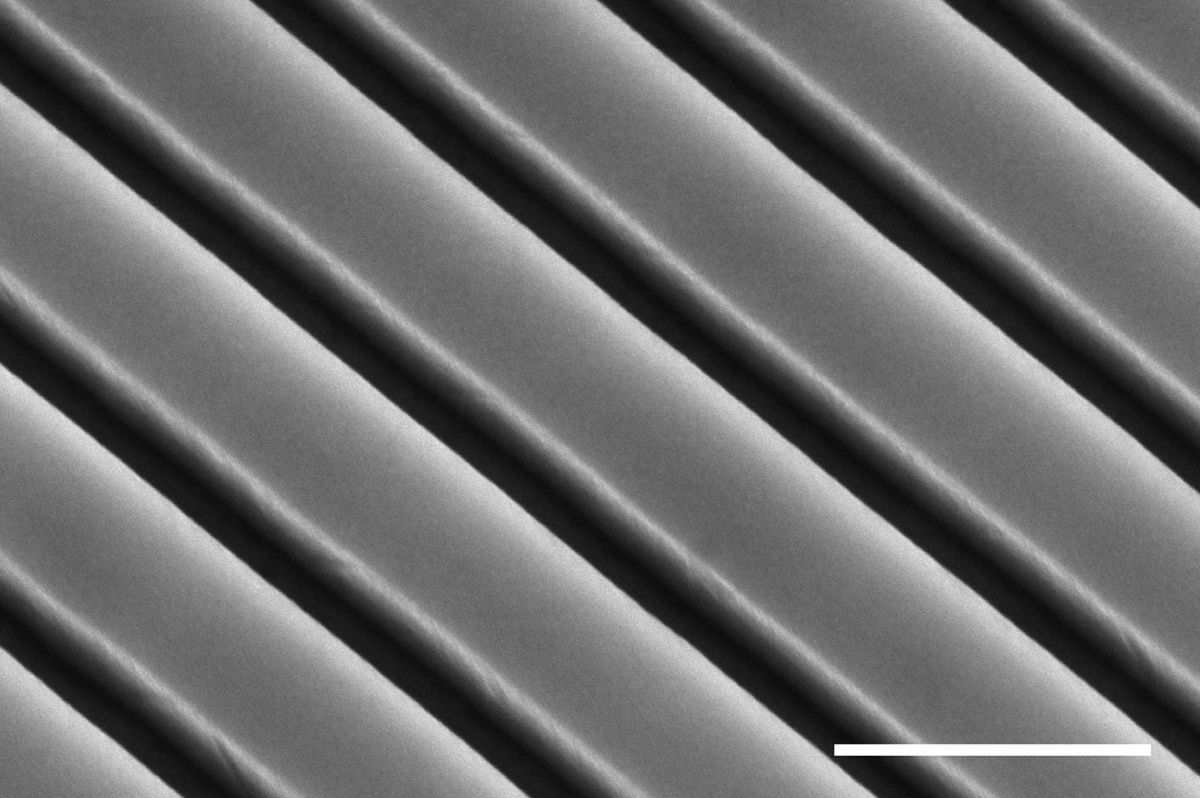

They built a metasurface, which is studded with tiny pillars, smaller than the wavelength of light, which can manipulate light in unusual ways based on their size and arrangement. In this case, they started with a thin sheet of sapphire, less than half a millimeter thick, and added pillars of silicon that were 206 nm thick, 142 nm tall, and spaced 300 nm apart.

When placed on the surface of a standard CCD chip, the metasurface acts like a lens, passing light that strikes it at steep angles but filtering out light hitting it at very slight angles. The features of an image are built from combinations of different light waves, and the waves that get filtered out carry the fine details of the image, leaving only the sharper components, such as the edges of a person’s face compared to the whiteboard behind her.

Depending on the computer and the size of the image, it might take several milliseconds to process this information digitally. With the analog approach, only limiting factor is the thickness of the metasurface. “It’s just the time light takes to travel 150 nm, which is basically nothing,” Cordaro says.

It’s also a passive technique. “It’s just a piece of glass, so you don’t need to give it power,” he says. Of course, the digital camera and a computer would still have a role, but Cordaro says this hybrid approach should be more efficient.

The researchers would like to try other materials, such as titanium oxide or silicon nitride, to see if they can get even better results. And while this metasurface captures edges in one dimension, they’d like to try two-dimensional designs, so they can capture edges at different orientations.

This post was updated on 7 February 2020.

Neil Savage is a freelance science and technology writer based in Lowell, Mass., and a frequent contributor to IEEE Spectrum. His topics of interest include photonics, physics, computing, materials science, and semiconductors. His most recent article, “Tiny Satellites Could Distribute Quantum Keys,” describes an experiment in which cryptographic keys were distributed from satellites released from the International Space Station. He serves on the steering committee of New England Science Writers.