If you ask Meta, or its peers, whether the metaverse is possible, the answer is confident: Yes—it’s just a matter of time. The challenges are vast, but technology will overcome them. This may be true of many problems facing the metaverse: Better displays, more sensitive sensors, and quicker consumer hardware will prove key. But not all problems can be overcome with improvements to existing technology. The metaverse may find itself bound by technical barriers that aren’t easily scaled by piling dollars against them.

The vision of the metaverse pushed by Meta is a fully simulated “embodied internet” experienced through an avatar. This implies a realistic experience where users can move through space at will and pick up objects with ease. But the metaverse, as it exists today, falls far short. Movement is restricted and objects rarely react as expected, if at all.

Louis Rosenberg, CEO of Unanimous AI and someone with a long history in augmented reality work, says the reason is simple: You’re not really there, and you’re not really moving.

“We humans have bodies,” Rosenberg said in an email. “If we were just a pair of eyes on an adjustable neck, VR headsets would work great. But we do have bodies, and it causes a problem I describe as ‘perceptual inconsistency.’ ”

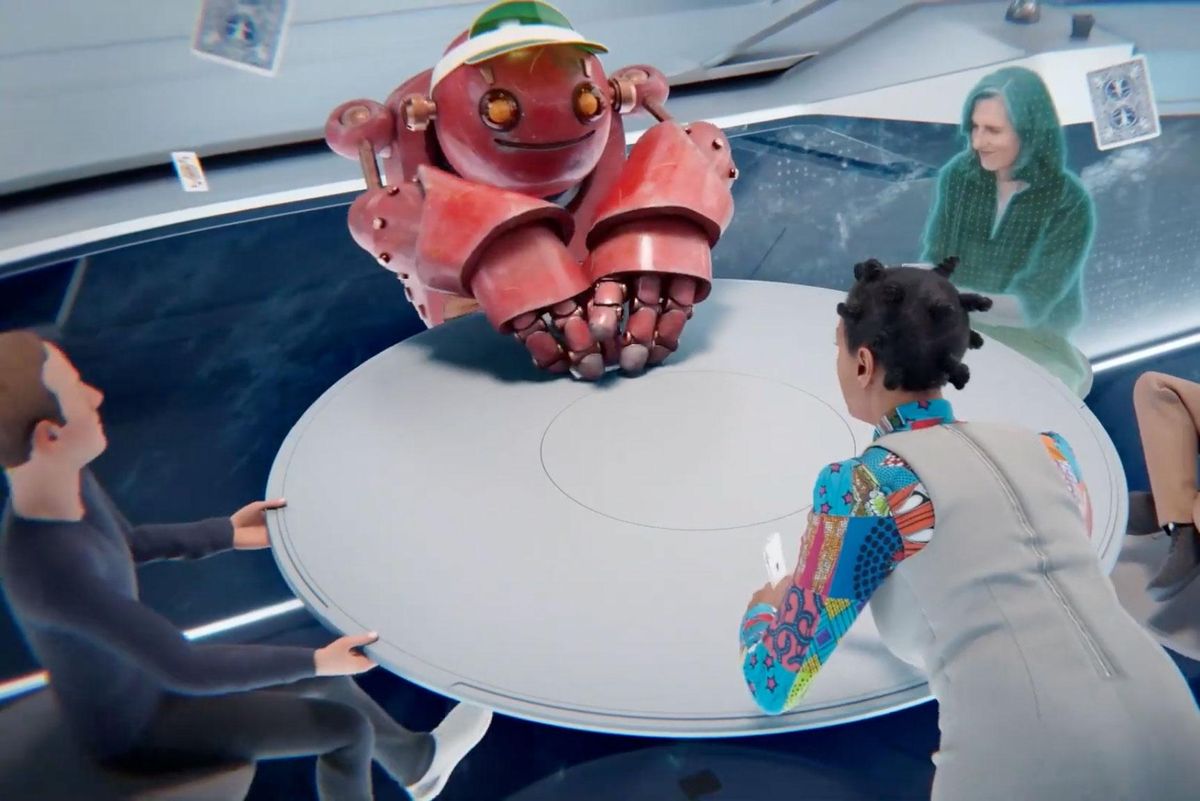

Meta frequently demos an example of this problem—friends surrounding a virtual table. The company’s press materials depict avatars fluidly moving around a table, standing up and sitting at a moment’s notice, interacting with the table and chairs as if it were a real, physical surface.

“That can’t happen. The table is not there,” says Rosenberg. “In fact, if you tried to pretend to lean on the table, to make your avatar look like that, your hand would go right through it.”

Developers can attempt to fix the problem with collision detection that stops your hand from moving through the table. But remember—the table is not there. If your hand stops in the metaverse, but continues to move in reality, you may feel disoriented. It’s a bit like a prankster yanking a chair from beneath you moments before you sit down.

Meta is working on EEG and ECG biosensors which might let you move in the metaverse with a thought. This could improve range of movement and stop unwanted contact with real-world objects while moving in virtual space. However, even this can’t offer full immersion. The table still does not exist, and you still can’t feel its surface.

Rosenberg believes this will limit the potential of a VR metaverse to “short duration activities” like playing a game or shopping. He sees augmented reality as a more comfortable long-term solution. AR, unlike VR, augments the real world instead of creating a simulation, which sidesteps the problem of perceptional inconsistency. With AR, you're interacting with a table that’s really there.

Figuring out how to translate our physical forms to virtual avatars is one hurdle, but even if that's solved, the metaverse will face another issue. Moving data between users thousands of miles apart with very low latency.

“To be truly immersive, the round trip between user action and simulation reaction must be imperceptible to the user,” Jerry Heinz, a member of the Ball Metaverse Index’s expert council, said in an email. “In some cases, ‘imperceptible’ is less than 15 milliseconds.”

Heinz, formerly the head of Nvidia’s enterprise cloud services, has first-hand experience with this problem. Nvidia’s GeForce Now service lets customers play games in real time on hardware located in a data center. This demands high bandwidth and low latency. According to Heinz, GeForce Now averages about 30 megabits per second down and 80 milliseconds round trip, with only a few dropped frames.

Modern cloud services like GeForce Now handle user load through content delivery networks, which host content in data centers close to users. When you connect to a game via GeForce Now, data is not delivered from a central data center used by all players but instead from the closest data center available.

The metaverse throws a wrench in the works. Users may exist anywhere in the world and the path data travels between users may not be under the platform’s control. To solve this, metaverse platforms need more than scale. They need network infrastructure that spans many clusters of servers working together across multiple data centers.

“The interconnects between clusters and servers would need to change versus the loss affinity they have today,” said Heinz. “To further reduce latency, service providers may well need to offer rendering and compute at their edge, while backhauling state data to central servers.”

The problems of perceptional inconsistency and network infrastructure may be solvable but, even so, they'll require many years of work and huge sums of money. Meta’s Reality Labs lost over US $20 billion in the past three years. That, it seems, is just the tip of the iceberg.

- Speculation in the Metaverse - IEEE Spectrum ›

- What Can the Metaverse Learn From Second Life? - IEEE Spectrum ›

- Silicon Valley's Metaverse Problem - IEEE Spectrum ›

Matthew S. Smith is a freelance consumer technology journalist with 17 years of experience and the former Lead Reviews Editor at Digital Trends. An IEEE Spectrum Contributing Editor, he covers consumer tech with a focus on display innovations, artificial intelligence, and augmented reality. A vintage computing enthusiast, Matthew covers retro computers and computer games on his YouTube channel, Computer Gaming Yesterday.