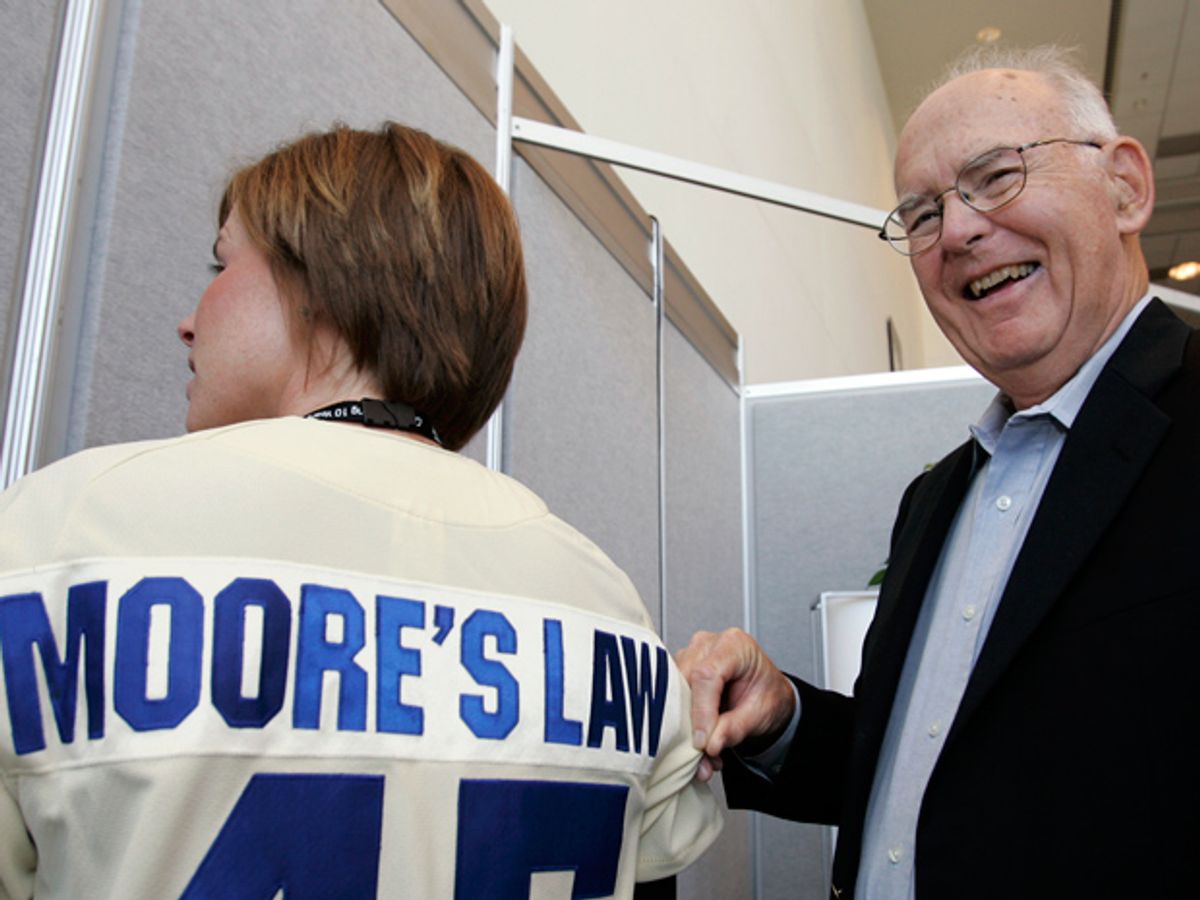

In the 50 years since Gordon Moore published his prediction about the future of the integrated circuit, the term “Moore’s Law” has become a household name. It’s constantly paraphrased, not always correctly. Sometimes it’s used to describe modern technological progress as a whole.

As IEEE Spectrum put together its special report celebrating the semicentennial, I started a list of key facts that are often overlooked when Moore’s Law is discussed and covered. Here they are (sans animated gifs):

1. Moore’s forecast changed over time. Gordon Moore originally predicted the complexity of integrated circuits—and so the number of components on them—would double every year. In 1975, he revised his prediction to a doubling every two years.

2. It’s not just about smaller, faster transistors. At its core, Moore’s prediction was about the economics of chipmaking, building ever-more sophisticated chips while driving down the manufacturing cost per transistor. Miniaturization has played a big role in this, but smaller doesn’t necessarily mean less expensive—an issue we’re beginning to run into now.

3. At first, it wasn’t just about transistors. Moore’s 1965 paper discussed components, a category that includes not just transistors, but other electronic components, such as resistors, capacitors, and diodes. As lithographer Chris Mack notes, some early circuits had more resistors than transistors.

4. The origin of the term “Moore’s Law” is a bit murky. Carver Mead is widely credited with coining the term “Moore’s Law”, but it’s unclear where it came from and when it was first used.

5. Moore’s Law made Moore’s Law. Silicon is a pretty unique material, but maintaining Moore’s Law for decades was hard work and it’s getting harder. As historian Cyrus Mody argues, the idea of Moore’s Law kept Moore’s Law going: it has long been a coordinating concept and common goal for the widely-distributed efforts of the semiconductor industry.

Rachel Courtland, an unabashed astronomy aficionado, is a former senior associate editor at Spectrum. She now works in the editorial department at Nature. At Spectrum, she wrote about a variety of engineering efforts, including the quest for energy-producing fusion at the National Ignition Facility and the hunt for dark matter using an ultraquiet radio receiver. In 2014, she received a Neal Award for her feature on shrinking transistors and how the semiconductor industry talks about the challenge.