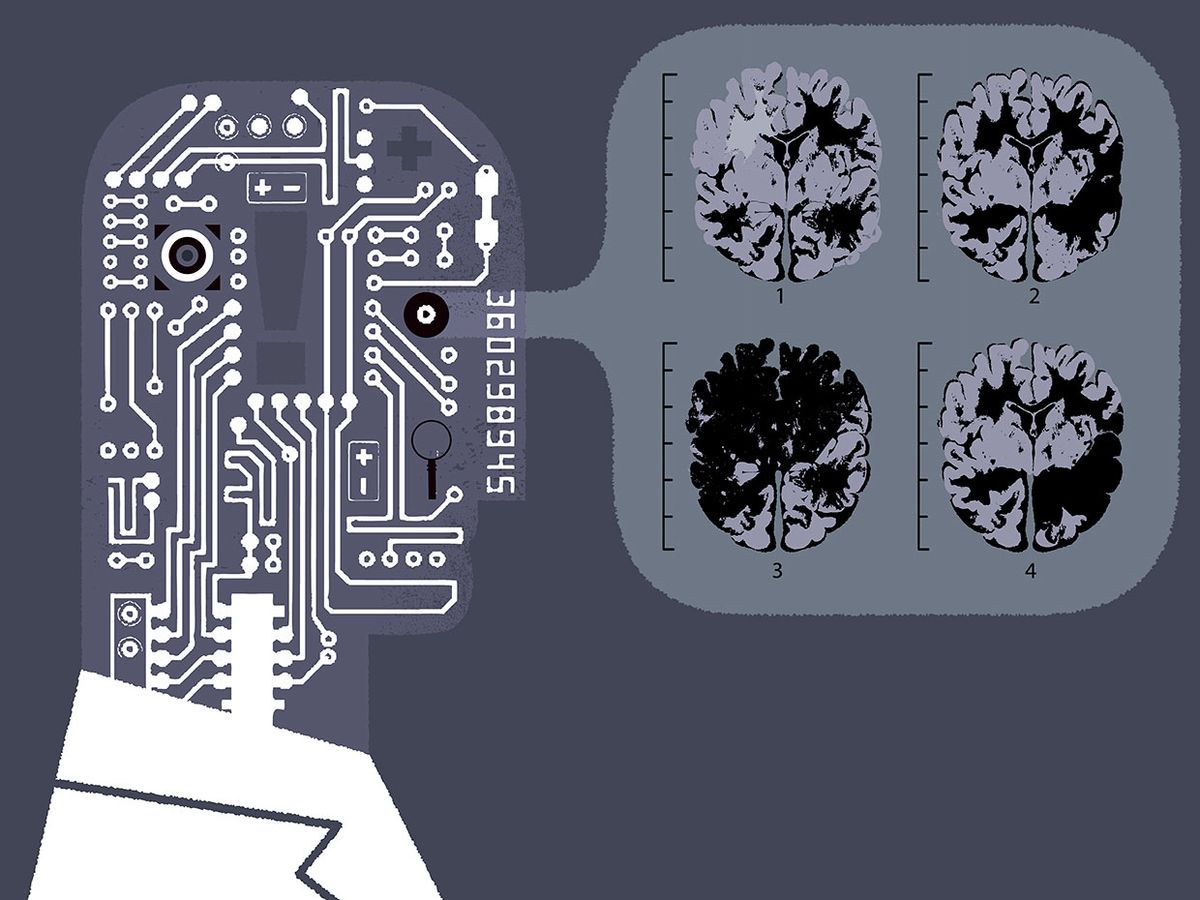

Last June, a team at Harvard Medical School and MIT showed that it’s pretty darn easy to fool an artificial intelligence system analyzing medical images. Researchers modified a few pixels in eye images, skin photos and chest X-rays to trick deep learning systems into confidently classifying perfectly benign images as malignant.

These so-called “adversarial attacks” implement small, carefully designed changes to data—in this case pixel changes imperceptible to human vision—to nudge an algorithm to make a mistake.

That’s not great news at a time when medical AI systems are just reaching the clinic, with the first AI-based medical device approved in April and AI systems besting doctors at diagnosis across healthcare sectors.

Now, in collaboration with a Harvard lawyer and ethicist, the same team is out with an article in the journal Science to offer suggestions about when and how the medical industry might intervene against adversarial attacks.

And their take-home message is—wait, but be ready to defend.

Adversarial attacks against medical AI systems are very likely for two reasons. First, there are “enormous incentives” for doctors and insurers to carry out such attacks, as IEEEreported last June. Second, it’s easy to do, as demonstrated by MIT undergraduates at LabSix. In fact, even just tilting the angle of a camera when taking a picture of a mole can alter an algorithm’s diagnosis from benign beauty mark to malignant skin cancer.

“Those types of things very well could be coming, but are still hypothetical,” emphasizes Samuel Finlayson, a graduate student at Harvard and MIT who co-authored the paper with Harvard biomedical informaticians Andrew Beam and Issac Kohane.

So what’s to be done when the attacks do begin?

Jonathan Zittrain, cofounder of Harvard Law School’s Berkman Klein Center for Internet & Society and author of The Future of the Internet and How to Stop It, had similar questions when he read the team’s paper.

“I was reminded of the time in the early 2000's when cybersecurity vulnerabilities were readily apparent but not yet often exploited,” Zittrain tells IEEE Spectrum. He reached out to Beam, Kohane and Finlayson to discuss how the field might move forward when dealing with these sorts of attacks.

One option is the procrastination principle, a concept that suggests “we shouldn't rush to anticipate every terrible thing that can happen with a new technology, but rather release and iterate as we learn,” says Zittrain. Trying to anticipate and prevent all possible adversarial attacks on a medical AI system could cripple rollout, delaying the good a system might do, such as diagnosing patients in rural areas who lack access to disease specialists.

Instead of preemptively building expansive defenses, the field could initially set forward best practices—such as testing for vulnerabilities before systems go live and hashing images at the moment of capture to detect any future tampering—and then defend against attacks as they arise.

Zittrain offers additional steps that can be taken: “Consistent auditing of results can be done, comparing the system's view to that of the people who originally trained it, to see if it's coming to strange conclusions,” he says. “As with software vulnerabilities, serious makers of ML-inclusive systems could put out bounties for those who can demonstrate exploits.”

Overall, the authors believe it is plausible that healthcare could become a “ground zero” for real-world adversarial attacks (which are much-studied at computer science conferences but not commonly detected in commercial products), but that balancing the potential of AI systems with vulnerabilities is important—an approach that “builds the groundwork for resilience without crippling rollout.”

“We’re very positive about machine learning and artificial intelligence,” Finlayson told IEEE Spectrum. “We think it’s going to do a lot of good in the world, and we don’t want to stall that process.”

Megan is an award-winning freelance journalist based in Boston, Massachusetts, specializing in the life sciences and biotechnology. She was previously a health columnist for the Boston Globe and has contributed to Newsweek, Scientific American, and Nature, among others. She is the co-author of a college biology textbook, “Biology Now,” published by W.W. Norton. Megan received an M.S. from the Graduate Program in Science Writing at the Massachusetts Institute of Technology, a B.A. at Boston College, and worked as an educator at the Museum of Science, Boston.