The Internet is hurtling into a hurricane of AI-generated nonsense, and no one knows how to stop it.

That’s the sobering possibility presented in a pair of papers that examine AI models trained on AI-generated data. This possibly avoidable fate isn’t news for AI researchers. But these two new findings foreground some concrete results that detail the consequences of a feedback loop that trains a model on its own output. While the research couldn’t replicate the scale of the largest AI models, such as ChatGPT, the results still aren’t pretty. And they may be reasonably extrapolated to larger models.

“Over time, those errors stack up. Then at one point in time, your data is basically dominated by the errors rather than the original data.” —Ilia Shumailov, University of Cambridge

“With the concept of data generation—and reusing data generation to retrain, or tune, or perfect machine-learning models—now you are entering a very dangerous game,” says Jennifer Prendki, CEO and founder of DataPrepOps company Alectio.

AI hurtles toward collapse

The two papers, both of which are preprints, approach the problem from slightly different angles. “The Curse of Recursion: Training on Generated Data Makes Models Forget” examines the potential effect on Large Language Models (LLMs), such as ChatGPT and Google Bard, as well as Gaussian Mixture Models (GMMs) and Variational Autoencoders (VAE). The second paper, “Towards Understanding the Interplay of Generative Artificial Intelligence and the Internet,” examines the effect on diffusion models, such as those used by image generators like Stable Diffusion and Dall-E.

While the models discussed differ, the papers reach similar results. Both found that training a model on data generated by the model can lead to a failure known as model collapse.

“This is because when the first model fits the data, it has its own errors. And then the second model, that trains on the data produced by the first model that has errors inside, basically learns the set errors and adds its own errors on top of it,” says Ilia Shumailov, a University of Cambridge computer science Ph.D. candidate and coauthor of the “Recursion” paper. “Over time, those errors stack up. Then at one point in time, your data is basically dominated by the errors rather than the original data.”

And the errors stack quickly. Shumailov and his coauthors used OPT-125M, an open-source LLM introduced by researchers at Meta in 2022, and fine-tuned the model with the wikitext2 dataset. While early generations produced decent results, responses became nonsensical within ten generations. A response from the ninth generation repeated the phrase “tailed jackrabbits” and alternated through various colors—none of which relates to the initial prompt about the architecture of the Somerset towers, in England.

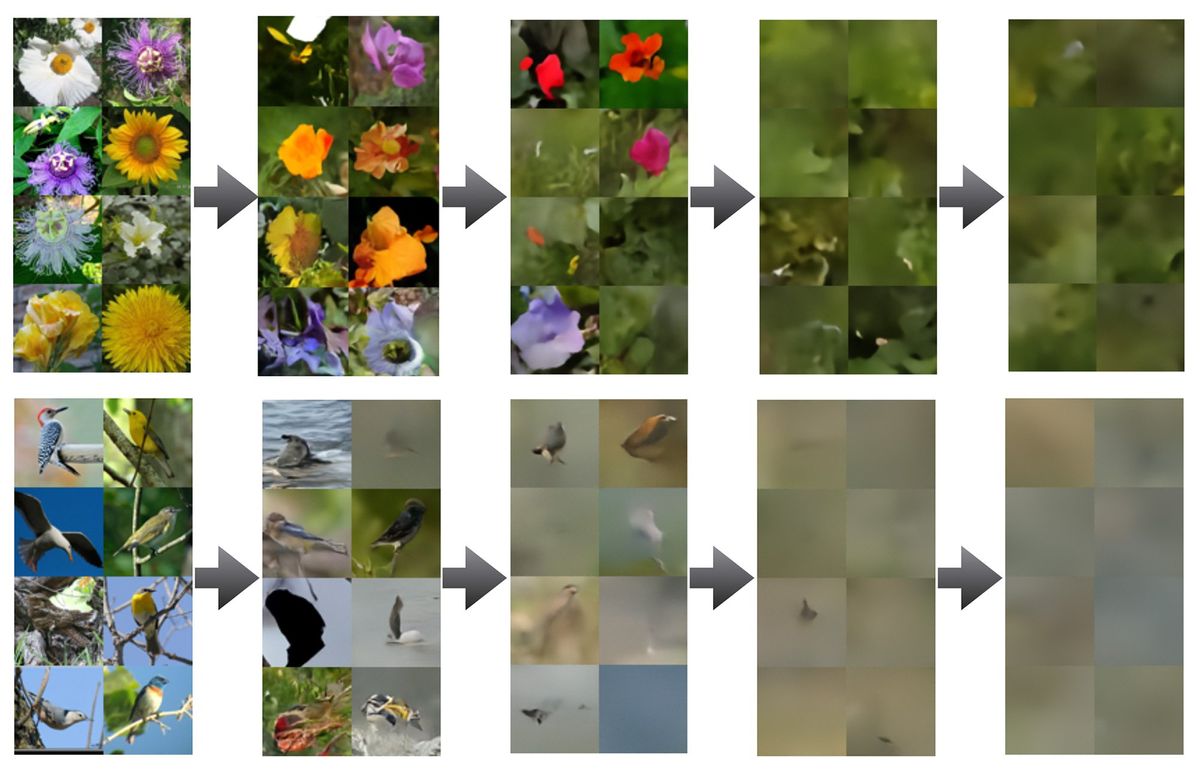

Diffusion models are just as susceptible. Rik Sarkar, coauthor of “Towards Understanding” and deputy director of the Laboratory for Foundations of Computer Science at the University of Edinburgh, says, “It seems that as soon as you have a reasonable volume of artificial data, it does degenerate.” The paper found that a simple diffusion model trained on a specific category of images, such as photos of birds and flowers, produced unusable results within two generations.

Sarkar cautions that the results are a worst-case scenario: the dataset was limited, and the results of each generation were fed directly back into the model. Still, the paper’s results show that model collapse can occur if a model’s training dataset includes too much AI-generated data.

AI training data represents a new frontier for cybersecurity

This isn’t a shock to those who closely study the interaction between AI models and the data used to train them. Prendki is an expert in the field of machine learning operations (MLOps) but also holds a doctorate in particle physics and views the problem through a more fundamental lens.

“It’s basically the concept of entropy, right? Data has entropy. The more entropy, the more information, right?” says Prendki. “But having twice as large a dataset absolutely does not guarantee twice as large an entropy. It’s like you put some sugar in a teacup, and then you add more water. You’re not increasing the amount of sugar.”

“This is the next generation of cybersecurity problem that very few people are talking about.” —Jennifer Prendki, CEO, Alectio.com

Model collapse, when viewed from this perspective, seems an obvious problem with an obvious solution. Simply turn off the faucet and heap in another spoonful of sugar. That, however, is easier said than done. Pedro Reviriego, a coauthor of “Towards Understanding” and assistant professor of telecommunication at the Polytechnic University of Madrid, says that while methods for weeding out AI-generated data exist, the daily release of new AI models rapidly makes them obsolete. “It’s like [cyber]security,” says Reviriego. “You have to keep up running after something that is moving fast.”

Prendki concurs with Reviriego and takes the argument a step further. She says organizations and researchers training an AI model should view training data as a potential adversary that must be vetted to avoid degrading the model. “This is the next generation of cybersecurity problem that very few people are talking about,” says Prendki.

There is one solution that might completely solve the issue: watermarking. Images generated by OpenAI’s DALL-E includes a specific pattern of colors by default, as a watermark (though users have the option to remove it). LLMs can contain watermarks, as well, in the form of algorithmically detectable patterns which aren’t obvious to humans. A watermark provides an easy way to detect and exclude AI-generated data.

Effective watermarking requires some agreement on how it’s implemented, however, and a means of enforcement to prevent bad actors from distributing AI-generated data without a watermark. China has introduced a draft measure that would enforce a watermark on AI content (among other regulations), but it’s an unlikely template for Western democracies.

A few glimmers of hope remain. The models presented in both papers are small compared to the largest models used today, such as Stable Diffusion and GPT-4, and it’s possible that large models will prove more robust. It’s also possible that new methods of curating data will improve the quality of future datasets. Absent such solutions, however, Shumailov says AI models could face a “first mover advantage,” as early models will have better access to datasets untainted by AI-generated data.

“Once we have an ability to generate synthetic data with some error inside of it, and we have large-scale usage of such models, inevitably the data produced by these models is going to end up being used online,” says Shumailov. “If I want to build a company that provides a large language model as a service to somebody [today]. If I then go and scrape a year worth of data online and try and build a model, then my model is going to have model collapse inside of it.”

Matthew S. Smith is a freelance consumer technology journalist with 17 years of experience and the former Lead Reviews Editor at Digital Trends. An IEEE Spectrum Contributing Editor, he covers consumer tech with a focus on display innovations, artificial intelligence, and augmented reality. A vintage computing enthusiast, Matthew covers retro computers and computer games on his YouTube channel, Computer Gaming Yesterday.