A View to the Cloud

What really happens when your data is stored on far-off servers in distant data centers

We live in a world that’s awash in information. Way back in 2011, an IBM study estimated that nearly 3 quintillion—that’s a 3 with 18 zeros after it—bytes of data were being generated every single day. We’re well past that mark now, given the doubling in the number of Internet users since 2011, the powerful rise of social media and machine learning, and the explosive growth in mobile computing, streaming services, and Internet of Things devices. Indeed, according to the latest Cisco Global Cloud Index, some 220,000 quintillion bytes—or if you prefer, 220 zettabytes—were generated “by all people, machines, and things” in 2016, on track to reach nearly 850 ZB in 2021.

Much of that data is considered ephemeral, and so it isn’t stored. But even a tiny fraction of a huge number can still be impressively large. When it comes to data, Cisco estimates that 1.8 ZB was stored in 2016, a volume that will quadruple to 7.2 ZB in 2021.

Our brains can’t really comprehend something as large as a zettabyte, but maybe this mental image will help: If each megabyte occupied the space of the period at the end of this sentence, then 1.8 ZB would cover about 460 square kilometers, or an area about eight times the size of Manhattan.

Of course, an actual zettabyte of data doesn’t occupy any space at all—data is an abstract concept. Storing data, on the other hand, does take space, as well as materials, energy, and sophisticated hardware and software. We need a reliable way to store those many 0s and 1s of data so that we can retrieve them later on, whether that’s an hour from now or five years. And if the information is in some way valuable—whether it’s a digitized family history of interest mainly to a small circle of people, or a film library of great cultural significance—the data may need to be archived more or less indefinitely.

Projected global data-center storage capacity, 2016 to 2021

The grand challenge of data storage was hard enough when the rate of accumulation was much lower and nearly all of the data was stored on our own devices. These days, however, we’re sending off more data to “the cloud”—that (forgive the pun) nebulous term for the remote data centers operated by the likes of Amazon Web Services, Google Cloud, IBM Cloud, and Microsoft Azure. Businesses and government agencies are increasingly transferring more of their workloads—not just peripheral functions but also mission-critical work—to the cloud. Consumers, who make up a growing segment of cloud users, are turning to the cloud because it allows them to access content and services on any device wherever they go.

And yet, despite our growing reliance on the cloud, how many of us have a clear picture of how the cloud operates or, perhaps more important, how our data is stored? Even if it isn’t your job to understand such things, the fact remains that your life in more ways than you probably know relies on the very basic process of storing 0s and 1s. The infographics below offer a step-by-step guide to storing data, both locally and remotely, as well as a more detailed look into the mechanics of cloud storage.

I. The Basics of Data Storage

Storing Data Locally on a Solid-State Drive

Step 1: Clicking on the “Save” icon in a program invokes firmware that locates where the data is to be stored on the drive.

Step 2: Inside the drive, data is physically located in different blocks. A quirk of the flash memory used in solid-state drives is that when data is being written, individual bits can only be changed from 1 to 0, never from 0 to 1. So when data is written to a block, all of the bits are first set to 1, erasing any previous data. Then the 0s are written, creating the correct pattern of 1s and 0s.

Step 3: Another quirk of flash memory is that it’s prone to corrupting stored bits, and this corruption tends to affect clusters of bits that are located close together. Error-correcting codes can compensate for only a certain number of corrupted bits per byte, so each bit in a byte of data is stored in a different block, to minimize the likelihood of multiple bits in a given byte being corrupted.

Step 4: Because erasing a block is slow, each time a portion of the data on a block is updated, the updated data is written to an empty part of the block, if possible, and the original data is marked as invalid. Eventually, though, the block must be erased to allow new data to be written to it.

Step 5: Reading back data always introduces errors, but error-correcting codes can locate the errors in a block and correct them, provided that the block has only one or two errors. If the block has multiple errors, the software can’t correct them, and the block is deemed unreadable. Errors tend to occur in bursts and may be caused by stray electromagnetic fields—for example, a phone ringing or a motor turning on. Errors also arise from imperfections in the storage medium.

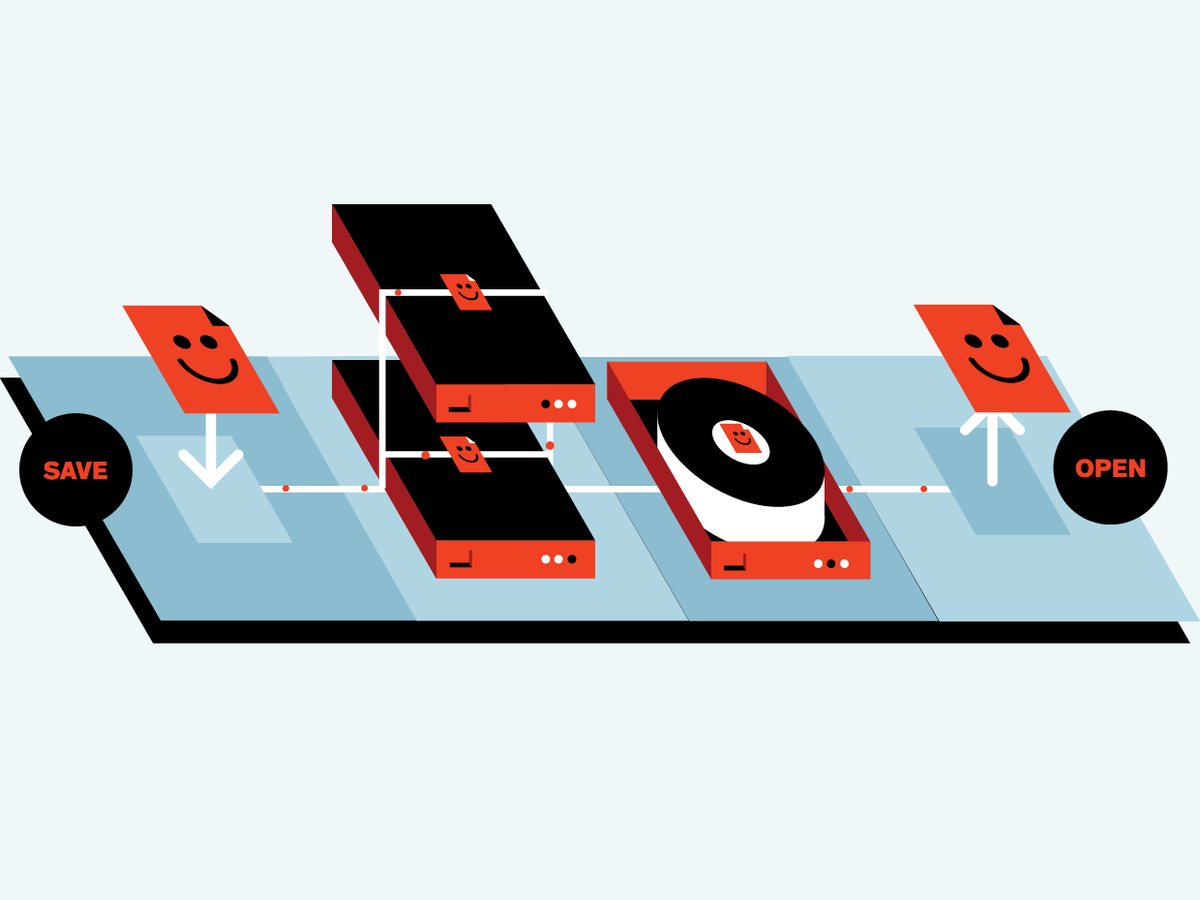

Storing Data Remotely in the Cloud

Step 1: Data is saved locally, in the form of blocks.

Step 2: Data is transmitted over the Internet to a data center [see below, "A Deeper Dive into the Cloud"].

Step 3: For redundancy, data is stored on at least two hard-disk drives or two solid-state drives (which may not be in the same data center), following the same basic method described above for local storage.

Step 4: Later that day, if the data center follows best practices, the data is backed up onto magnetic tape. However, not all data centers use tape backup.

Step 5: Reading back data always introduces errors, but error-correcting codes can locate the errors in a block and correct them, provided that the block has only one or two errors. If the block has multiple errors, the software can’t correct them, and the block is deemed unreadable. Errors tend to occur in bursts and may be caused by stray electromagnetic fields—for example, a phone ringing or a motor turning on. Errors also arise from imperfections in the storage medium.

II. A Deeper Dive Into the Cloud

Though most data that gets stored is still retained locally, a growing number of people and devices are sending an ever-greater share of their data to remote data centers.

Data from multiple users moves over the Internet to a cloud data center, which is connected to the outside world via optical fiber or, in some cases, satellite gigabit-per-second links.

The cloud data centeris basically a warehouse for data, with multiple racks of specialized storage computers called database servers.

There are three basic types of cloud data storage:hard-disk drives, solid-state drives, and magnetic tape cartridges, which have the following features:

Magnetic Tape Hard-Disk Drive Solid-State Drive Access time, read/write 10–60 seconds 7 milliseconds 50/1,000 nanoseconds Capacity 12 terabytes 8 terabytes 2 terabytes Data persistence 10–30 years 3–6 years 8–10 years Read/write cycles Indefinite Indefinite 1,000 Cloud security: Cloud data centers protect data using encryption and firewalls. Most centers offer multiple layers of each. Opting for the highest level of encryption and firewall protection will of course increase the amount of time it takes to store and retrieve the data.

Magnetic Storage vs. Solid State

Hard-disk drives and magnetic tape store data by magnetizing particles that coat their surfaces. The amount of data that can be stored in a given space—the density, that is—is a function of the size of the smallest magnetized area that the recording head can create. Perpendicular recording [right] can store about 3 gigabits per square millimeter. Newer drives based on heat-assisted magnetic recording and microwave-assisted magnetic recording boast even higher densities. Flash memory in solid-state drives uses a single transistor for each bit. Data centers are replacing HDDs with SDDs, which cost four to five times as much but are several orders of magnitude faster. On the outside, a solid-state drive may look similar to an HDD, but inside you’ll find a printed circuit board studded with flash memory chips.

About the Author

Barry M. Lunt is a professor of information technology at Brigham Young University, in Provo, Utah.