The University of Washington has announced that it will receive five years of funding—$18.5 million in total—from the National Science Foundation to establish a new center for neural engineering on the Seattle campus. San Diego State University and MIT, among others, will partner in a fresh push to give the human brain control over computers and robotic limbs.

Much of the foundation has already been laid with early animal research that looked at how the brain initiates movement in the muscles and then re-routed these intentions to external machines. But BCI technology remains largely out of reach for the motor-impaired human patients who are the most likely to benefit.

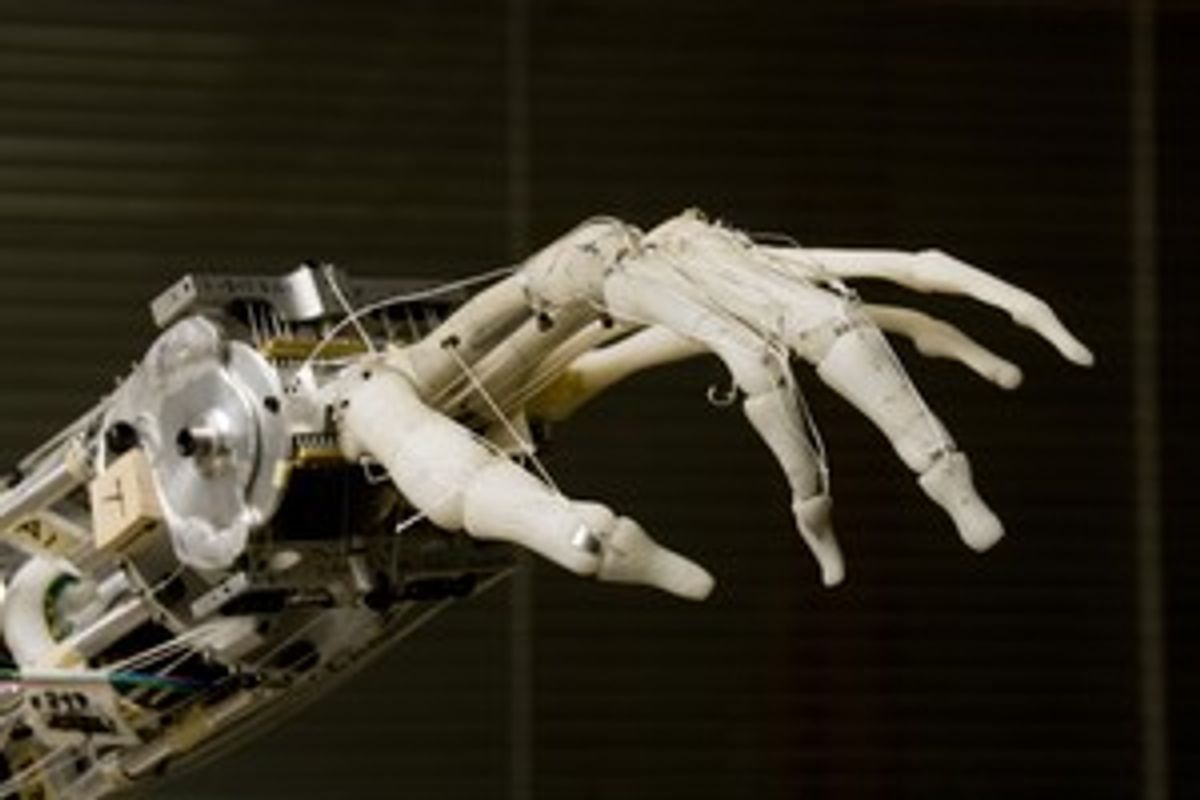

The director of the new facility, Yoky Matsuoka, is putting few limits on what kind of work will go on at the Engineering Research Center for Sensorimotor Neural Engineering, or CSNE, as they're calling it. Matsuoka, a 2007 MacArthur fellow and engineering professor at UW has spent a large chunk of her career developing a robotic human hand prosthesis. In her work, she seeks to replicate human physiology as closely as possible.

But past work may provide few clues about what to expect from the new facility. Anything is possible as everything seems to be on the table—devices that use signals from the brain and nerves, whether collecting them non-invasively with electrodes on the scalp and muscles, or going right into the brain to record the chatter of single cells. I asked Matsuoka to comment a bit about the future of neural prosthetics and to predict which techniques are likely to dominate. She was hesitant to rule anything out.

"Which way should we be going? It's not about what's going to be the ultimate one. I think all of them are going to have their place. It's like contact lenses versus glasses," she says.

Recording single cells provides the most precise information about the brain's intentions, but it also requires surgery, which may not be an option for some patients. Brain computer interfaces have the potential to treat an incredible range of disorders. It may be wise to keep the solutions as diverse as the problems.

Image: a prosthetic hand developed by Yoky Matsuoka Credit: University of Washington