A chip made by researchers at IMEC in Belgium uses brain-inspired circuits to compose melodies. The prototype neuromorphic chip learns the rules of musical composition by detecting patterns in the songs it’s exposed to. It then creates its own song in the same style. It’s an early demo from a project to develop low-power, general purpose learning accelerators that could help tailor medical sensors to their wearers and enable personal electronics to learn their users’ patterns of behavior.

Today’s connected devices don’t have much smarts on board—instead they send data into the cloud for analysis by remote servers, where energy use and cooling costs are not at a premium, says Praveen Raghavan, who leads technology development for neuromorphic computation at IMEC. The IMEC team wants to change this. “The whole objective is to make artificial intelligence more compact, and bring it closer to the user,” he says. That means making compact, low-power dedicated learning chips. “We want to be as cost effective as possible,” he says.

The chip tune released by IMEC definitely sounds derivative. The composer has made a few odd note selections, but nothing that could be called avant garde—it’s in the mold of a certain strain of western classical music. Indeed, the 30-second tune evokes the simple melodies beginning musicians practice over and over. Two bars are very close to a riff on the chromatic scale; in the last bar, it resolves on a note that feels pleasing and expected.

For the chip to generate this tune, it was sequentially loaded with songs in the same time signature (which specifies how many beats are in each bar of music) and style. If exposed to a broad range of rhythms and styles, it wouldn’t have been able to discern the patterns at work. Raghavan says the prototype was taught using old Belgian and French flute minuets. Based on this, the chip learned the rules at play and then applied them. As the inputs are switched to a different time signature, it will start to learn that one, says Raghavan.

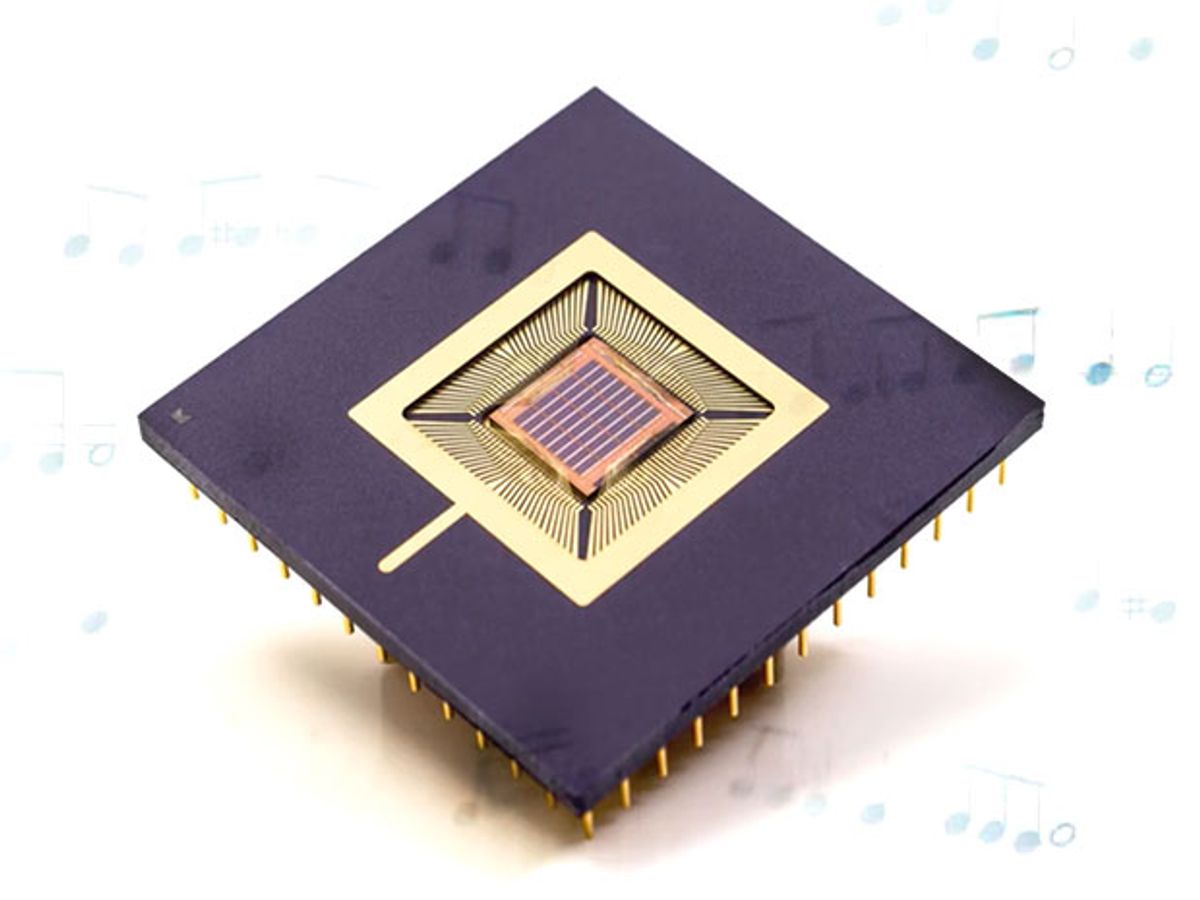

The prototype neuromorphic chip uses a one megabit bank of resistive RAM built on top of a processor. The memory cells are filaments of an oxide material whose electrical resistance varies along a continuum of states in response to different applied voltages. When the chip “hears” two particular notes one after the other in multiple training songs, it detects a pattern. The more often the notes occur together, the stronger the association between them, and the stronger the connection between the two memory cells that store them. In turn, it becomes more likely the chip will put those two notes in sequence when it switches from learning to composing mode. This is analogous to the way connections between different neurons in the brain strengthen or weaken as we learn. The system is hierarchical, and can spot and learn broader patterns, too. “It starts to see patterns in the music in the short term and long term,” says Raghavan.

Raghavan would not say how much power the prototype used—he says they overdesigned it so that it could take on a variety of tasks, building large memory cells that are responsive to a range of voltages. The music task doesn’t make use of the entire memory bank, either—but future, more complex tasks likely will. Now that they’ve studied the hardware’s capabilities, he says, they’ll optimize the design. Raghavan says it’s already flexible. The group has also trained the system with text, teaching it to autocomplete sentences.

Raghavan says these systems could be used as learning accelerators in personal electronics, or combined with other hardware designed to run neural networks. One application he has in mind is a heart-rate monitor for a smart watch that would be able to adjust to the user’s style to get more accurate readings. “Some people wear it tight, some loose, some higher or lower on the arm,” he says. All this can affect the accuracy of the measurements.

Katherine Bourzac is a freelance journalist based in San Francisco, Calif. She writes about materials science, nanotechnology, energy, computing, and medicine—and about how all these fields overlap. Bourzac is a contributing editor at Technology Review and a contributor at Chemical & Engineering News; her work can also be found in Nature and Scientific American. She serves on the board of the Northern California chapter of the Society of Professional Journalists.