These days, people reach for their smartphone after stumbling out of bed in the morning, but many see just a blurry mess instead of an alarm, messages, or pictures. A new vision-correcting display would pre-distort a digital screen so their imperfect vision renders it crystal clear—without glasses.

Fu-Chung Huang started working on vision-correcting displays in 2011 as a graduate student at University of California, Berkeley (he's now at Microsoft Corp). “Photoshop can deblur a photo,” he says, “so why can’t I correct the visual blur on a display?”

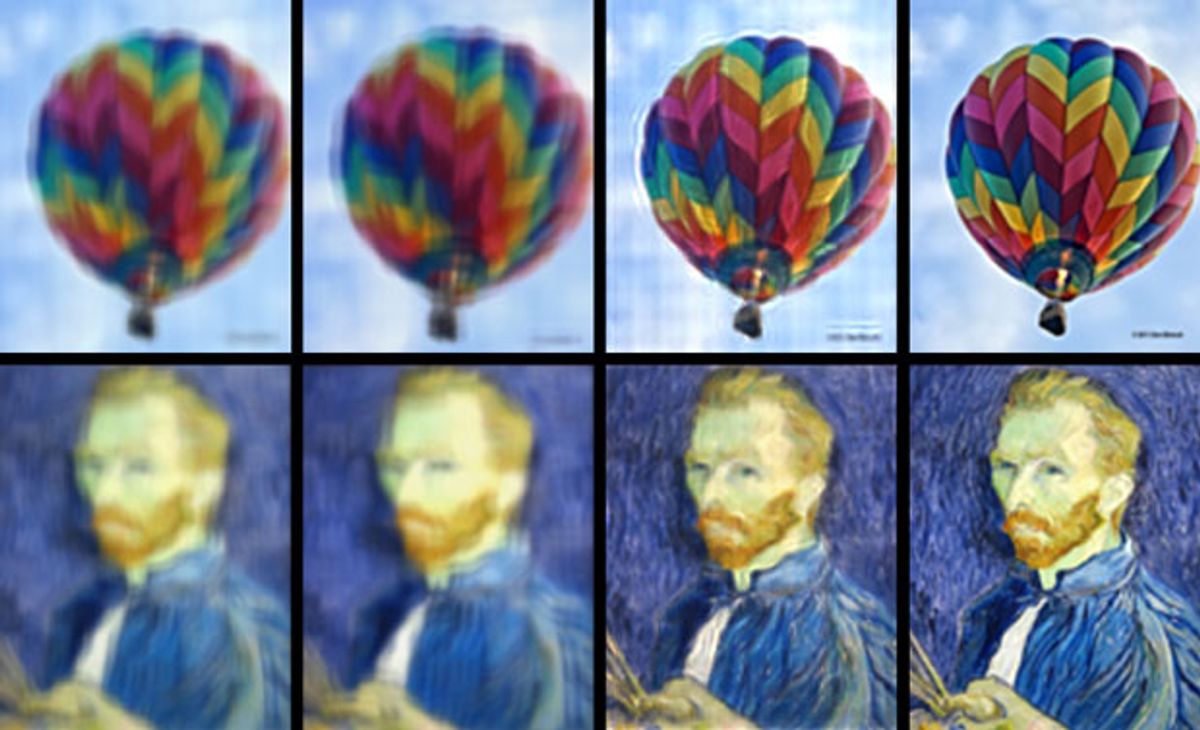

Earlier attempts to make a vision-corrected view led to quality issues: an image-processing algorithm on a normal 2-D screen or two screens layered on top of each other led to low image contrast, and a light-field display projected multiple images from different perspectives with low resolution. Huang and his collaborators at UC Berkeley and the Massachusetts Institute of Technology realized they’d have to fine-tune both a specialized display and an algorithm to make the system work.

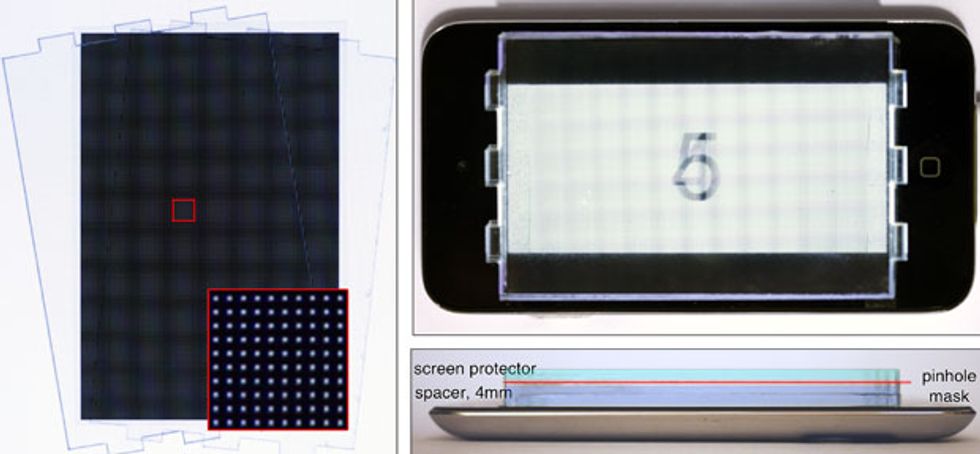

Huang constructed a simple prototype: an ordinary iPod whose screen was covered with a clear film sandwiching a thin grid of pinholes. From any given point in space only some of the pixels are revealed through the pinholes, which lets the algorithm choose the selection of pixels making their way to different parts of the eye by controlling their position on the screen. This can compensate for a viewer's incorrect focus—for instance, by presenting pixels as if the screen was half the distance away for a nearsighted viewer, or varying their distance if the viewer's field of vision is irregular. Similar technology is used to show a 3-D effect on displays like the Nintendo 3DS, where each eye sees a slightly different view.

Future incarnations of the display might use tiny lenses or a more sophisticated barrier to make the image brighter and sharper, but for now the researchers chose to keep it simple with an array that could easily be added as a screen cover to existing devices.

“The overall cost is less than $10,” says Huang. “I can build the thing in a few minutes.” (And he posted instructions here.)

In order to test the algorithm’s compensation for different eyesight problems, the researchers turned a DSLR camera (with lens similar in shape to the human eye) on the display. Focusing the camera too far away simulated farsightedness, and the researchers could tell whether the display was working by examining the pictures. To test other, more complicated visual problems, the researchers ran simulations and found that their algorithm was able to make a clear picture even for irregular eye shapes that current glasses cannot correct.

Although the group didn't run a human study, Huang tested the algorithm out with his own nearsighted vision. “It requires precise calibration between the eye and the display,” says Huang, “and it took some time to find the sweet spot for my eye.” But with eye-tracking technology, like that on the new Amazon Fire Phone, the next version could compensate for the viewer’s movement and adjust the picture to stay in focus.

Gordon Wetzstein, one of the project's MIT collaborators, focuses his research on compression algorithms for 2-D and 3-D displays—which he believes are key to unlocking creative new uses for the technology.

“I think this is what people need to spend more effort on,” says Wetzstein. “Finding new applications like vision correction, new user interfaces, heads-up displays for augmented virtual reality—these kinds of things are very hot. Finding the right killer app is something nobody’s really solved yet.”

Explanatory video from MIT below.